A special message for our Companies and Markets blog subscribers: Today we’d like to share with you one of our recently published AI articles. This is one of the many we have published throughout the year. If you would like to join your peers in staying informed about AI and its subsets such as generative AI and Large Language Models, consider updating your Insight blog subscription by selecting the “Data Science & Technology” topic in the list of choices. FactSet AI perspectives will be delivered right to your inbox when we publish new commentaries.

As businesses grapple with how to harness the impressive power of generative artificial intelligence, decision-makers are asking a logical question: How can I use generative AI right now?

Generative AI can help you increase productivity, enhance client and employee experiences, and accelerate business priorities over time. We discuss how to use the current capabilities of Large Language Models (LLMs) as well as how to address material business risks such as hallucinations from generative AI.

Brainstorm ideas. Knowledge workers in nearly every area of your firm can use ChatGPT as a brainstorming partner. It can provide catalysts to kick off group discussions or go deeper exploring the ideas employees generate themselves. Although AI can sometimes make up—or “hallucinate”—information, using it as a brainstorming partner to spark ideas is low risk.

Generate first drafts. The newest and most sophisticated LLMs excel at generating text that is both coherent and relevant, closely resembling human output. This can help you clear the hurdle of a blank page. Consider using AI to develop first drafts of internal presentations, meeting summaries, emails, and policy proposals.

Process documents. To free up employees’ time for specialized, high-value work, financial firms can pass filings, research, transcripts, and news stories to ChatGPT, which can summarize and extract key takeaways. New incoming documents can be automatically classified to determine which staff should take a closer look. Foreign-language documents and customer communications can be automatically translated. Employees can provide a table of data to ChatGPT and have it draft narrative prose.

Repurpose content for different audiences. Employees can pass in their own drafts and use ChatGPT as an editor to modify the tone, expand key points, or tighten up certain sections. For example, you could repurpose a complex or lengthy presentation into a streamlined set of talking points for an all-employee meeting. Be sure to set company standards for what can be passed into public generative AI systems (like OpenAI’s ChatGPT). It’s critical to ensure that proprietary or confidential company content is not entered in public models, which may use your data for training, potentially resulting in a data leak. Instead, consider building a private internal instance as we’ve done for FactSet employees to ensure your data will not leave the firm. To learn how we did this, read our Insight article, “We Gave Our Employees Access to ChatGPT. Here’s What Happened.”

Explore and learn. Generative AI is great at providing cohesive explanations that accelerate your learning. For example, you might ask: “What does a standard portfolio risk analysis involve?” The response will provide a high-level definition and might list specific elements such as asset allocation analysis and stress testing. If an answer is too complex, you can ask the system to simplify it. You can also prompt the system with specific questions to go deeper into the topic. However, especially for topics outside of general knowledge, it is important to validate the model’s answers with a trusted source. You cannot rely on today’s LLMs to natively provide accurate facts, figures, or references.

Guide business processes. ChatGPT can provide valuable guidance for questions like “How do I set up a working group?” or “Can you create an onboarding and training workshop for new employees?”. Users can ask it to provide more detail to flesh out any of its recommended steps, or to brainstorm alternative methods.

Accelerate coding. Developers at your firm may already be using ChatGPT or another AI coding assistant to help debug software, write code comments and documentation, understand what a section of code is trying to accomplish, or even write new sections of code. As with any use of a LLM, a human expert must review the output for accuracy. Coding LLMs have proven to be great time-savers for many software engineers—but they can also produce non-working, buggy, or insecure code that can pose a genuine threat to your organization if not properly validated. Recent studies have shown that developers using AI coding assistants produce less secure code while thinking that it is more secure, so employing industry-standard security checks is key for AI-generated code.

Develop conversational support bots. Many businesses would benefit from a private instance of a LLM fine-tuned with their proprietary support documentation. Because of the potential for inaccurate responses, companies should first provide the support chatbot to their internal support agents. They can validate answers, correct any errors, and provide the final results to clients. This will not only save the agents valuable time, but their edits can serve as feedback to improve the bot. Eventually, the more reliable chatbot can be used by clients directly. You can vastly boost accuracy, reliability, and explainability of the bot’s answers by using a retrieval-augmented generation (RAG) approach on your help documentation versus relying on fine-tuning alone.

Simplify user experience. LLMs can also help provide a conversational interface in software products. This will make applications more intuitive and discoverable, relieving users from the burden of mastering a product’s myriad menus, features, and options. A firm’s machine learning team can use LLMs to generate synthetic training data to improve their existing internal natural language understanding models. Dedicated LLMs could power employee knowledge bases. These can be fine-tuned with internal corporate data or use RAG to answer employees’ questions about corporate policy or procedures, accelerate new-hire onboarding, and reduce knowledge silos.

Validating Outputs from Generative AI

Although there are risks with generative AI, there are effective ways to use it to your benefit today. As your organization does so, information professionals will need to consider the material risks of:

-

Hallucinations

-

Data privacy

-

Copyrighted content

-

Security breaches

Legal and Compliance departments may need to develop strategies to mitigate risks relating to privacy regulations and intellectual property rights—or even defamation from AI-generated disinformation. Security teams will be wary of more effective phishing cyberattacks, more prolific AI-generated malware, and improperly tested AI-generated internal code.

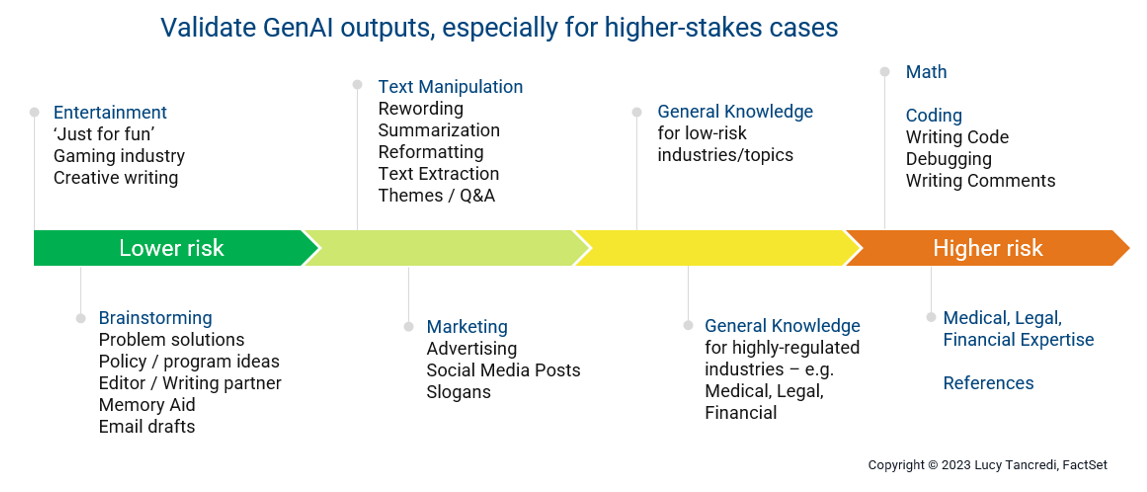

When it comes to hallucinations, it’s important for users to understand the need to validate generative AI outputs, particularly for higher-stakes use cases or those not in the LLM’s wheelhouse. Using this technology for brainstorming, creative writing, or editing support is low risk, as you will naturally evaluate and modify its outputs. Large Language Models are inherently skilled at text manipulation, so rewording and summarizing are also well-suited use cases.

However, once you start asking questions requiring specialized expertise in highly regulated industries like medicine, law, or finance, or more exacting domains like math or coding, or when asking for specific references or citations, it’s crucial that you carefully review any generative AI output and validate it against a trusted source.

To learn more, visit FactSet Artificial Intelligence and check out a few of the additional AI articles we’ve published:

This blog post is for informational purposes only. The information contained in this blog post is not legal, tax, or investment advice. FactSet does not endorse or recommend any investments and assumes no liability for any consequence relating directly or indirectly to any action or inaction taken based on the information contained in this article.