There’s a new “gen” in the artificial intelligence (AI) lexicon. While Nvidia CEO Jensen Huang recently claimed that “artificial general intelligence” could emerge within the next five years, many experts believe that the new technology is not a threat to human jobs or lifestyle in the near future.

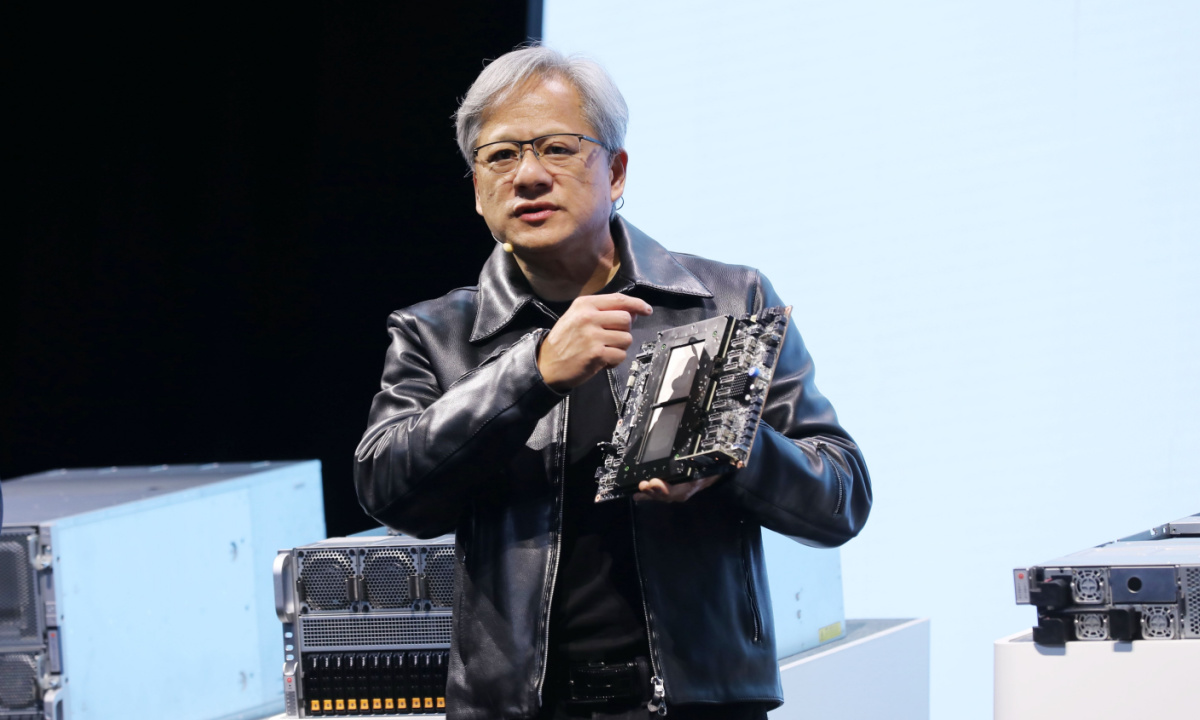

Huang, the leader of the top global company producing AI chips, answered a query during an economic forum at Stanford University regarding the timeline for achieving Silicon Valley’s enduring ambition of developing computers capable of humanlike thought.

He explained that the timeline is significantly influenced by how the goal is characterized. If being able to pass tests intended for humans is the benchmark, he pointed out, we might see the emergence of artificial general intelligence (AGI) soon.

“If I gave an AI … every single test that you can possibly imagine, you make that list of tests and put it in front of the computer science industry, and I’m guessing in five years time, we’ll do well on every single one,” said Huang, whose firm reached $2 trillion in market value last week.

What Is AGI?

There’s widespread confusion among the public about the difference between AGI and AI. AI follows specific instructions. AGI aims for autonomous intelligence, Duncan Curtis, SVP of AI Product and Technology at the AI data firm Sama, told PYMNTS in an interview.

Models like ChatGPT struggle to create entirely new content or combine different information to solve unfamiliar problems, Curtis said. They can sometimes sound overly confident, even when they’re not completely accurate. On the other hand, AGI is designed to handle new challenges effortlessly and to continuously learn and improve.

“Due to this confusion, it’s easy for the public to mistake a model like ChatGPT as an intelligent AGI,” he added. “Having a better definition of what both AI and AGI are capable of is necessary for safer public-AI interactions that will also help reduce the unrealistic expectations and overhyping of both AGI and AI.”

Some researchers have even claimed there is a glimmering of AGI in current AI models. Last year, Microsoft researchers published a paper saying GPT-4 represents a significant advancement towards achieving AGI. The team noted that despite GPT-4 being purely a large language model, the early version had remarkable capabilities in different fields and tasks such as abstraction, comprehension, coding, vision, mathematics, law, understanding of human motives, medicine, and even emotions.

Long Way From Human

Despite Huang’s recent prediction, many observers think we won’t see AGI soon. Right now, even the term “AGI” is unclear because there’s no precise definition of what constitutes intelligence, tech adviser Vaclav Vincalek told PYMNTS in an interview.

“It has been the subject of millennia of debate in scientific and philosophical circles,” he added. “Since the ‘I’ is not clearly defined (or even understood), it is impossible to create a specification against which the technology people can build the system.”

Yann LeCun, Meta’s chief scientist and a pioneer in deep learning, has previously pushed back against Huang’s statements that AGI is on the horizon. LeCun has expressed his belief that current AI systems are still decades away from achieving a level of sentience that includes common sense.

LeCun suggested that society is more inclined to develop AI akin to the intelligence levels of cats or dogs before reaching human-level AI. He also pointed out that just focusing on language models and text data in the tech industry isn’t enough to create the advanced AI systems that experts have dreamed about for years.