This issue of Nature Computational Science includes a Focus that highlights recent advancements, challenges, and opportunities in the development and use of digital twins across different domains.

More than 50 years ago, as part of the Apollo 13 mission, NASA used high-fidelity simulators controlled by a network of digital computers to train astronauts and mission controllers. These simulators were particularly important for testing scenarios of failure and refining instructions that determined success in critical mission situations. During the mission, an in-flight explosion critically damaged the spacecraft’s main engine, and mission controllers used data from the spacecraft to modify their simulators in order to reflect the condition of the corresponding physical counterpart1. Ultimately, the simulations were used as a tool to help inform the decisions that safely brought the astronauts back home.

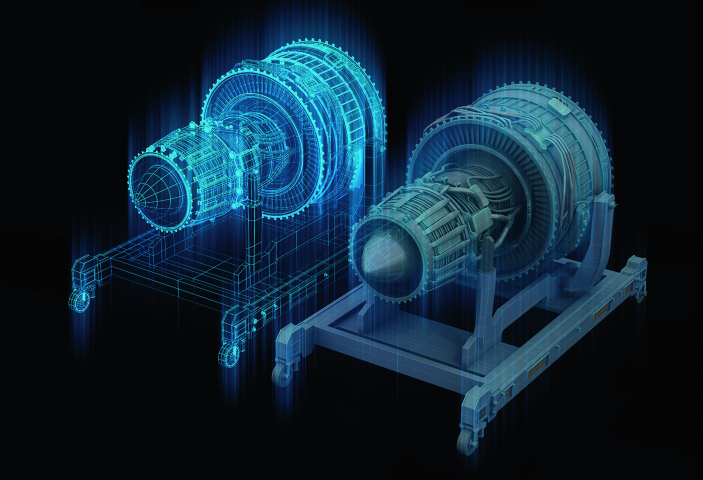

Credit: Haiyin Wang / Alamy Stock Photo

The term ‘digital twin’ would only be coined more than 40 years later2, but the Apollo simulators were already notable examples of the concept behind this term (although this has been recently disputed3). After all, these simulators represented a set of virtual assets that were supposed to mimic the structure and behavior of their corresponding physical asset (meaning, the spacecraft), and most importantly, that received feedback of data from the physical asset to inform critical decisions that realized value. It is worth noting that these simulators were more than ‘just traditional model simulations’: they were designed to acquire and process real-time data to help mission controllers with responding to high-stakes situations and making real-time decisions that, in turn, would directly influence the physical asset. The aforementioned events of Apollo 13, for instance, happened over just a few days.

Since then, there have been major developments in the technologies that surround digital twins. We have experienced an ever-growing amount of data being generated and made available, including in real time. We have implemented sophisticated modeling capabilities and observed the sharp rise in data-driven methodologies, including machine learning, which has allowed us to take advantage of the data deluge. While NASA made use of state-of-the-art telecommunications technology at the time of the Apollo 13 mission, we now have access to advanced Internet of Things networks that can substantially accelerate data movement. The list goes on and on.

Another important development is in regards to applications. The engineering and industrial domains have arguably leveraged digital twins for longer, such as for developing, testing, and maintaining aircraft and spacecraft in aerospace engineering, and for optimizing product life-cycle management in manufacturing systems (the concept of a digital twin in this context was first introduced by Michael Grieves4, before the term was coined). More recently, however, many other distinct areas of science have realized the potential of digital twins, from biomedical sciences to climate sciences and social sciences. For instance, digital twins could enable improved precision medicine, more accurate weather and climate predictions, and more informed urban planning.

Undoubtedly, all of these developments bring exciting new capabilities and opportunities for digital twins — but not without myriad challenges. This issue presents a Focus that highlights these recent developments within this burgeoning field, bringing together experts’ opinions on the requirements, gaps, and opportunities when implementing digital twins across different domains.

Advancements and current challenges for industrial applications of digital twins are discussed in a Perspective by Fei Tao and colleagues. Digital twins have become very popular in industry and manufacturing, with different conceptual models proposed in the past and large investments from many well-known companies being made within this space. Nevertheless, according to the authors, we still have a long way to go to improve the maturity of digital twins and to facilitate large-scale industrial applications. Among the many challenges and opportunities that still need to be addressed, the authors argue that the trade-offs between overly simplistic models (which are less expensive, but less accurate) and overly complex models (which are more accurate, but can be prohibitively expensive) need to be well-understood and evaluated on a case-by-case basis; that the opportunities and risks brought by artificial intelligence need to be better assessed; and that validation benchmarks and international standards are urgently needed to make the field more mature.

Across aerospace and mechanical engineering, the use cases for digital twins are vast, and the potential benefits, gaps, and future directions in these domains are highlighted in a Perspective by Karen Willcox and Alberto Ferrari. Notably, the authors advocate for the value of considering the digital twin as an asset in its own right: similar to how cost–benefit–risk tradeoffs are employed in the design and development of physical assets, the development and the life cycle of digital twins cannot be an afterthought and must involve investments paralleling those that are made over the life cycle of a physical asset. The computational cost and the complexity of digital twins are also discussed by Willcox and Ferrari: they argue that, to satisfy stringent computational constraints, the use of surrogate models — meaning, approximation models that behave similarly to the simulation model but that are computationally more accessible — may play an essential role, and that for complex systems it is not beneficial to see a digital twin as an identical twin of a physical asset — instead, a digital twin must be envisioned to be fit for purpose, depending on cost–benefit tradeoffs and required capabilities.

The applications of digital twins in the biomedical sciences are explored in the Focus by Reinhard Laubenbacher and colleagues in a Perspective. The authors argue that, different from industry and engineering, there is no broad consensus as to what constitutes a digital twin in medicine, mainly due to some of the unique challenges faced in the field, including the fact that the relevant underlying biology is partially or completely unknown and that the required data are often not available or difficult to collect, with the latter challenge impacting the exchange of data between physical and digital twin. That said, the authors discuss many potential promising applications for medical digital twins in curative and preventive medicine, as well as in developing novel therapeutics and helping with health disparities and inequalities.

The definition of a digital twin is also examined by Michael Batty in a Perspective, this time in the context of urban planning. Batty argues that, while the coupling between real and digital tends to be strong and formalized for physical assets, the same is not true for social, economic, and organizational systems (such as cities), since the transfer of data is often non-automated. Batty also discusses the need of the human in the loop in the design and use of digital twins, and the fact that cities may be intrinsically unpredictable, which brings challenges to applying the standard definition of digital twins to the field. On the other hand, Luís M. A. Bettencourt argues in a Comment that cities do present many levels of predictability that can be leveraged and represented in digital twins, in particular related to processes that are being increasingly understood via statistical models and theory. Both authors talk about the fact that cities are very complex and are associated with long-term dynamics (in contrast to the typical short-term dynamics of many applications), as well as about the different computational challenges that come with building digital twins of cities, such as the high computational complexity and the required multiscale modeling support.

In the area of Earth systems science, Peter Bauer, Torsten Hoefler, and colleagues discuss in a Comment — similar to the arguments put forth by Batty for city planning — that flexible human-in-the-loop interaction is essential for understanding and making efficient use of the data provided by digital twins of Earth. The authors argue that, in order to achieve such needed interaction, large pre-trained data-driven models are expected to be key to facilitate access to information hidden in complex data and to implement the human interface portion of digital twins (for instance, by using intelligent conversational agents). The authors also indicate the need for agreed upon standards for data quality and model quality, echoing some of the issues discussed by Tao et al. for industrial applications.

Evidently, there are many commonalities across these domains when it comes to current obstacles and opportunities for digital twins — but at the same time, there is also variability in how digital twins are perceived and used depending upon the specific challenges faced by each research community. Accordingly, the National Academies of Sciences, Engineering, and Medicine (NASEM) recently published a report5 that identified gaps in the research that underlies the digital twin technology across multiple areas of science. The report — recapitulated by Karen Willcox and Brittany Segundo in a Comment — proposes a cross-domain definition for digital twins based on a previously published definition6 and highlights many issues and gaps also echoed by some of the manuscripts in the Focus, such as the critical role of verification, validation, and uncertainty quantification; the notion that a digital twin should be ‘fit for purpose’, and not necessarily an exact replica of the corresponding physical twin; the need for protecting individual privacy when relying on identifiable, proprietary, and sensitive data; the importance of the human in the loop; and the need of sophisticated and scalable methods for enabling an efficient bidirectional flow of data between the virtual and physical assets.

It goes without saying that the Focus covers only a fraction of the potential applications for digital twins: many other areas have the potential to benefit from this technology, including, but not limited to, civil engineering7,8, chemical and materials synthesis9, sustainability10,11, and agriculture12. Without a doubt, there are many challenges to overcome and many research gaps to be addressed before we can bring the promise of digital twins to fruition. As stated in the NASEM report5, realizing the potential of digital twins will require an integrated research agenda, as well as an interdisciplinary workforce and collaborations between domains. We hope that this Focus will facilitate such collaborations and further discussion within the broad computational science community.

References

-

Ferguson, S. Apollo 13: The First Digital Twin (Siemens, 2020); https://blogs.sw.siemens.com/simcenter/apollo-13-the-first-digital-twin/

-

National Research Council. NASA Space Technology Roadmaps and Priorities: Restoring NASA’s Technological Edge and Paving the Way for a New Era in Space (The National Academies Press, 2012); https://doi.org/10.17226/13354

-

Grieves, M. Physical twins, digital twins, and the Apollo myth. LinkedIn (8 September 2022); https://go.nature.com/3ILB4Im

-

Grieves, M. Product Lifecycle Management: Driving the Next Generation of Lean Thinking (McGraw Hill Professional, 2005).

-

National Academies of Sciences, Engineering, and Medicine. Foundational Research Gaps and Future Directions for Digital Twins (The National Academies Press, 2023); https://doi.org/10.17226/26894

-

Digital Twin: Definition & Value — An AIAA and AIA Position Paper (AIAA, 2021); https://www.aia-aerospace.org/publications/digital-twin-definition-value-an-aiaa-and-aia-position-paper/

-

Feng, J., Ma, L., Broyd, T. & Chen, K. Autom. Constr. 130, 103838 (2021).

Google Scholar

-

Torzoni, M., Tezzele, M., Mariani, S., Manzoni, A. & Willcox, K. E. Comput. Methods Appl. Mech. Eng. 418, 116584 (2024).

Google Scholar

-

David, N., Sun, W. & Coley, C. W. Nat. Comput. Sci. 3, 362–364 (2023).

Google Scholar

-

Tzachor, A. et al. Nat. Sustain. 5, 822–829 (2022).

Google Scholar

-

Mehrabi, Z. Nature 615, 189 (2023).

Google Scholar

-

Shrivastava, C. et al. Nat. Food 3, 413–427 (2022).

Google Scholar

Rights and permissions

Reprints and permissions

About this article

Cite this article

The increasing potential and challenges of digital twins.

Nat Comput Sci 4, 145–146 (2024). https://doi.org/10.1038/s43588-024-00617-4

-

Published: 26 March 2024

-

Issue Date: March 2024

-

DOI: https://doi.org/10.1038/s43588-024-00617-4