Scientists are increasingly expected to incorporate socio-political considerations in their work, for instance by anticipating potential socio-political ramifications. While this is aimed at promoting pro-social values, critics argue that the desire to serve society has led to self-censorship and even to the politicization of science. Philosophers of science have developed various strategies to distinguish between influences of values that safeguard the integrity and freedom of research from those impinging on them. While there is no consensus on which strategy is the best, they all imply some trade-offs between social desirability and the aims of science. If scientists are to incorporate socio-political considerations, they should receive relevant guidance and training on how to make these trade-offs. Codes of conduct for research integrity as professional codes of ethics can help scientists navigate evolving professional expectations. Unfortunately, in their current status, these codes fail to offer guidance on how to weigh possibly conflicting values against the aims of science. The new version of the European Code of Conduct (2023) is a missed opportunity in this regard. Future codes should include guidance on the trade-offs that professional scientists face when incorporating socio-political considerations. To increase effectiveness, codes should increase the attention that scientists have for such trade-offs, make sure scientists construe them in appropriate ways, and help scientists understand the motivations behind pro-social policies. Considering the authority of these documents—especially the European one—amending codes of conduct can be a promising starting point for broader changes in education, journal publishing, and science funding.

Introduction

The professional expectations placed on scientists have shifted away from the traditional separation of science and society in myriad ways. There have been calls to incorporate sex, gender, and diversity analyses in the design of research (Hunt et al. 2022); to anticipate potential socio-political interpretations of research (Science Must Respect the Dignity and Rights of All Humans, 2022; Sudai et al. 2022); and to incorporate the knowledge of non-academic communities into science (Albuquerque et al. 2021). While these calls aim to promote social values, some critics argue that the prosocial desire to serve society has led to a form of self-censorship (Clark et al. 2023). Some go as far as to consider policies motivated by pro-social values an outright politicisation of science threatening its objectivity and even harming human welfare (Krylov and Tanzman, 2023).

Should we reject these calls and keep science as free as possible from socio-political considerations? On a more balanced note, it must be acknowledged that some influence of values is already widely considered necessary and legitimate. For instance, the principles of research ethics are universally accepted as legitimate, even though they limit what animal testing can be carried out. Nonetheless, critics might be right to highlight that the difference between outright political interference and integrating social values into science is more nuanced than it first seems. Social and political values, even the most shared and desirable ones, are not always perfectly aligned with the aims of science. Scholars working on this topic have developed many strategies to manage the influence of various socio-political values in such a way as to avoid or at least minimise risks to the freedom and integrity of science. All these strategies imply complex trade-offs between social values and scientific aims to be performed by scientists on a case-by-case basis.

Scientists should not be left alone in this task, and codes of conduct for research integrity can be invaluable tools for helping them in making these decisions. The newly revised European Code of Conduct for Research Integrity is a missed opportunity in this regard. Like many codes of conduct, it eschews meaningful guidance on how to weigh possibly conflicting values against the aims of science. This is a mistake: if scientists are to incorporate social considerations in their work, they must receive relevant training and guidance on how to navigate the complex trade-offs they are bound to face.

Here, we call for integrating such guidance in future codes of conduct and highlight three goals and related challenges of this task.

New expectations and old ideals

The introduction of non-scientific considerations into science can potentially undermine scientific knowledge. After all, science is considered trustworthy insofar as it does not depend on “subjective” values but only on observation and logical reasoning. This idea is encapsulated in the so-called value-free ideal of science, according to which non-scientific interests and values should not influence scientists’ reasoning (Douglas, 2009). Though popular throughout the 20th century, this ideal has started losing its appeal. Philosophers of science have shown that values necessarily influence many scientific activities, including decisions on what topic to study and how to study it; drawing conclusions from uncertain data; and communicating results in scientific and popularizing contexts (Elliott, 2017). The Intergovernmental Panel on Climate Change’s newest report acknowledges that values play a role in physical climate science (e.g. in event attribution) (Pulkkinen et al. 2022). Furthermore, the evolving professional expectations listed above are also not compatible with this ideal. For instance, biomedical scientists are asked to “incorporate within their own research practice an awareness and sensitivity” on how their use of terms like “sex” and “gender” may be received in legal and policy circles (Sudai et al. 2022, p. 804). This implies the expectation to integrate social values in scientific reasoning, hence to depart from the value-free ideal.

Though incompatible with pro-social policies, the value-free ideal can still count on supporters. Indeed, this ideal encapsulates a simple though crucial idea: non-scientific interests and values could bias scientific research and thus compromise its integrity. Moreover, many societal, political, and professional reasons can be given to defend such an ideal (Ambrosj, Dierickx et al. 2023). For instance, it can be argued that letting social and political values drive science could erode the professional autonomy of researchers. Similar concerns on the autonomy and freedom of research can motivate the claim that injecting social values into science leads to self-censorship (Clark et al. 2023; Krylov and Tanzman, 2023). Thus, while values might be inherent in science, there is no denying that they can pose risks to scientific integrity and freedom. Indeed, the scholars who acknowledge that science is inherently value-laden do not recommend letting all non-scientific values have unrestrained influence on science, but instead search for strategies to manage and limit their influence.

The next section shows how the evaluation of the new pro-social trend in science policy is less straightforward than it might seem. Complex trade-offs must be performed even when the values underlying science policies are virtually universally shared.

Challenging evaluations between scientific risks and social desirability

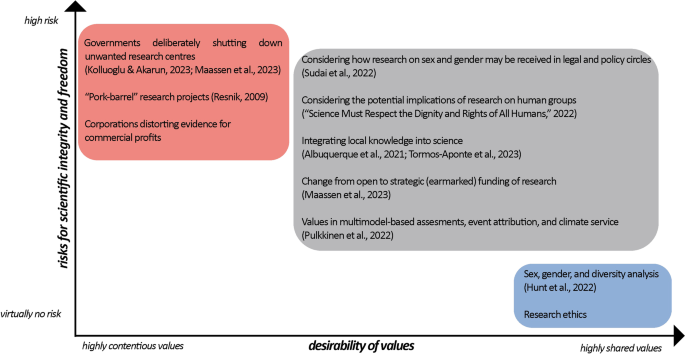

Whether or not societal values or other non-scientific interests should be integrated into scientific activity depends, at least, on the desirability of the values and the potential risks they pose to scientific freedom and integrity (Fig. 1).

The introduction of social, political, or commercial interests and values into science needs to be evaluated on a case-by-case basis. Such evaluation depends on both the effects of the influence of the specific value (how and to what extent it influences the science) and on how desirable the value is considered. The figure shows how some calls for value influence found in the literature might be evaluated. While some clear cases are likely to be uncontroversial (e.g. when values are widely shared and pose almost no risk to science; or when values are repugnant and represent clear threats to science), many cases fall in a grey area where different stakeholders holding different values may evaluate differently.

To be sure, in some cases, the evaluation is relatively straightforward. On the one hand, direct breaches of academic freedom, where (semi-)authoritarian governments deliberately shut down unwanted research centres, are clear examples of illegitimate influence (upper-left corner of Fig. 1). For instance, at Boğaziçi University in Istanbul the direct interference of the Turkish government has caused the deactivation of the university governing boards and committees, the shutdown of independent research and cultural centres, and the recruitment of politically aligned faculty members (Kolluoglu and Akarun, 2023). Similarly, a recent study on academic freedom commissioned by the EU Parliament reports how the Hungarian government has been bringing universities under strict supervision (Maassen et al. 2023). This has led, among other things, to the revocation of accreditation to gender studies programmes, and to the forced move of a prominent European institution, the Central European University, from Budapest to Vienna (CEU, 2018). On the other hand, the introduction of scientific practices motivated by social concerns might be helpful for science (lower-right corner of Fig. 1). The inclusion of sex, gender, and diversity analysis in scientific research is attuned to social needs and fosters scientific discovery and reproducible science (Hunt et al. 2022). Keeping track of sex and gender differences in clinical trials can lead to a better understanding of the functioning of the drug under study and more efficient and targeted therapies (Tannenbaum et al. 2019). In the case of research ethics, the influence of values such as human and animal rights is almost universally considered legitimate even though they constrain the range of acceptable research activities, even if this means prohibiting methods that would otherwise be maximally efficient means to reach epistemic aims. For instance, keeping human subjects under control conditions and exposing them to toxins would produce more accurate knowledge and more quickly than relying on epidemiological studies and extrapolations from studies on animals. Such a study would be considered as a potentially criminal and surely reckless disregard for the safety and well-being of participants. This illustrates how moral values already take legitimate precedence over epistemic desiderata.

However, most policies and practices motivated by values fall in a grey area where evaluation is more controversial, even if these values are largely shared and considered legitimate. Let us look at three examples from Fig. 1.

First, consider a recent editorial published in Nature Human Behaviour presenting new ethical guidance on potential harms to population groups who are not participants in a scientific study (Science Must Respect the Dignity and Rights of All Humans, 2022). According to the editorial, scientists should “carefully consider the potential implications (including inadvertent consequences) of research on human groups” (p. 1030). This is an extension of the research ethics principles of care and respect for participants of a study to the wider population who might be affected by it. Accordingly, scientists are asked to actively reflect on their positionality, contextualise their findings both in scientific and non-scientific communications, and use inclusive and non-stigmatizing language. In extreme cases, this could lead scientists not to publish some results, even if no immediate harm to research participants was posed, merely because of possible future harm to the wider population. The editors recognize that there is a fine balance to be found between the enforcement of this guidance and the respect of scientific freedom. Thus, they commit themselves to a cautious use of the guidance, following the principle that “no research is discouraged simply because it may be socially or academically controversial” (p. 1029). This laudable commitment notwithstanding, some critics argue that the new guidance boils down to letting the decision on whether or not high-quality research should be published depend on the moral sensibilities of the editors (Clark et al. 2023). Thus, researchers would face trade-offs between non-scientific values (avoiding future social harm), and the epistemic aims of science (pursuing scientifically interesting research questions and disseminating sound results).

Second, let us consider calls for the engagement of lay communities in scientific research. Integrating local or indigenous knowledge in participatory research is motivated by very desirable social goals and has the potential to unlock information not available via standard scientific methods. However, it raises questions about the compatibility of non-scientific knowledge with academic knowledge. For instance, in ethnobiology and applied ecology it is well-known that traditional knowledge can help protect species and environments, but sometimes produces predictions that are not compatible with those of scientists (Albuquerque et al. 2021; Ludwig, 2016). How should traditional knowledge be incorporated into academic knowledge? Should it be incorporated only when it fits into the theoretical frameworks of Western science? Here it is important to notice that these problems are not exclusive to the integration of non-Western knowledge. Projects involving lay people in various capacities in the research process always pose some challenges (Bedessem and Ruphy, 2020). Although none of these challenges are in principle insurmountable, they imply complex trade-offs between socially and ethically desirable goals (including less represented social groups in the scientific enterprise) and different epistemic standards.

Third, funding bodies decide how much of a role social and political interests play in the allocation of funds across scientific domains and topics. Such decisions inevitably constrain the freedom of scientists to set their own research agenda, in favour of the pursuit of socio-political goals. In fact, researchers increasingly consider the growth in strategic (earmarked) funding of research to be a possible threat to their academic freedom (Maassen et al. 2023, p. 175). Nevertheless, earmarking is not necessarily an illegitimate breach of scientific freedom. Sometimes it might be the only way to fund expensive, innovative, and interdisciplinary projects (e.g. the Human Genome Project). This does not mean it is without dangers. At an extreme, there is the risk of promoting so-called “pork-barrel” projects, where short-sighted political interests overrule both academic freedom and societal interests (Resnik, 2009). And one need not go so far in order to point out potential downsides: legitimate decisions on the research agenda may have undesirable downstream effects on scientific outputs. When an entire field of study prioritises certain research questions and methods only ignoring possible alternatives, knowledge gaps and an imbalanced representation of the object of study may follow (Hoyningen-Huene, 2023). Thus, the extent to which social and political interests should play a role in the allocation of funds is not clear-cut and open to controversy.

In these and other cases, there is no single and easy way to solve the complex trade-offs between social values and possible risks to scientific integrity and freedom. This means that in practice scientists will inevitably perform complex ethical-epistemic deliberations. Accordingly, they should receive appropriate guidance. The next section explores the current status of the guidance researchers receive on this topic, finding it insufficient.

The new European Code of Conduct: a missed opportunity

In search of guidance, it is natural to turn to ethical codes designed by the research community to self-regulate, as they represent consensus statements on the ethical and professional expectations placed on scientists (Desmond, 2020). These codes already offer detailed guidance on many ethical issues related to various research activities, including supervision, data management, collaboration, publication, authorship, and the review process. However, they still lag behind regarding guidance on how to navigate different pro-social expectations. In a recent study, we have shown that many national codes of European countries expect scientists to take into consideration social needs but fail to offer guidance on how these are to be weighed against the aims of science (Ambrosj et al. 2023). This situation is not exclusive to Europe. Table 1 shows guidance from a selection of prominent ethical documents from across the globe. Like their European counterparts, these documents expect scientists to incorporate social considerations in their work but do not detail how this should be done. More importantly, they do not acknowledge that the incorporation of social values is bound to lead to ethical-epistemic trade-offs.

Among codes of conduct, the European Code of Conduct for Research Integrity (ECoC) stands out as one of the most authoritative, used as a model by institutions and funding schemes across and beyond Europe. The new ECoC, published in June 2023, integrates the feedback of many stakeholders inside and outside academia, and marks an important advance over previous versions (see the accompanying document Feedback on Outcomes of the Stakeholder Consultation). These admirable efforts in the revision process notwithstanding, not much progress has been made regarding the guidance on how to integrate the interests of different stakeholders in the research process.

In particular, the new ECoC is silent on how to meet the social expectations placed on scientists discussed in the previous sections. As in previous versions, the ECoC only acknowledges the risks related to clear cases of illegitimate influence, such as political and commercial pressure (top left corner of Fig. 1): “research […] should develop independently of pressure from commissioning parties and from ideological, economic, or political interests”(ECoC, p.3). By stressing the freedom of research and its independence from economic and commercial influence ECoC seems here to hold on to the old value-free ideal of science. However, in other passages, ECoC is more attuned to those pro-social tendencies that are incompatible with the value-free ideal. For instance, in one passage, the ECoC requires research protocols to account for relevant differences in gender, culture, and ethnic origin, even if there is no mention of possible ethical-epistemic conflicts arising from this requirement. Another passage seems to acknowledge—even if in passing—some legitimacy to the incorporation of non-scientific values into science: “Authors are transparent in their communication, outreach, and public engagement about assumptions and values influencing their research” (ECoC, p. 9). Remarkably, this is the only passage explicitly stating that research can be influenced by values in general, including non-epistemic values that go beyond the values of research integrity alone. However, also here, there is no mention of the risks that non-scientific values and interests may pose to science and the challenges researchers are bound to face in deciding how to integrate such values and interests. In general, in the new ECoC, there is no passage that guides researchers on how to comply with pro-social requirements without compromising the integrity of the research. This is unfortunate for, as established in the previous section, it is not always straightforward how to integrate social values, independently of their desirability, without infringing on the integrity and freedom of research.

We call for codes of conduct to broaden the scope of their guidance to include guidance on the trade-offs that professional scientists face when incorporating socio-political considerations. Many strategies have been developed by philosophers of science to make sure that value influence is legitimate and does not infringe on the integrity of science. These usually include being transparent about the values at play and that values are somehow representative of those held by the broader society (i.e. they do not represent exclusively the values of a small and powerful group such as industry or a political party) (e.g. Elliott, 2017; Pulkkinen et al. 2022). Strategies to reduce risks for academic freedom from political interference include considering whether the autonomy of single scientists or that of institutions is at stake; whether the restriction is about the content or the process of science; and whether it takes the form of oversight or more invasive micromanagement (Resnik, 2009). Despite the efforts of philosophers, there is still no consensus on how to distinguish between legitimate and illegitimate influence of values (Holman and Wilholt, 2022). Each of these strategies has its merits and shortcomings, and their application is such a context-dependent task that a one-size-fits-all solution is unlikely to be found. Thus, we maintain that instead of pretending to offer a ready-made solution to decide on legitimate and illegitimate value influences, codes of conduct should endow scientists with appropriate ethical and epistemic tools to make the complex deliberations they themselves are to perform. In the next section, we turn to how to effectively achieve this.

Goals and challenges of effective guidance on ethical-epistemic evaluations

Following the principles of attention, construal, and motivation (Epley and Tannenbaum, 2017), we identify three goals that guidance on ethical-epistemic deliberation should aim at in order to be effective, as well as related challenges.

First, codes of conduct should bring the attention of scientists to the trade-offs between different societal values and scientific aims they are expected to perform. This goal is thus a type of awareness raising, and is relatively easy to achieve. For purposes of a code like the ECoC, it will suffice to add a sentence or paragraph mentioning that pursuing legitimate social goals is not always perfectly in harmony with the epistemic purposes of science and thus scientists are expected to perform some trade-offs. Where codes explicitly require researchers to pursue some social goal, this qualifying sentence or paragraph should follow such requirements.

The difficulty here for codes is to offer guidance without succumbing to an artificially clear but ultimately inapplicable set of rules. As mentioned above, there is likely no universally applicable set of rules that can be used to determine whether some value, in general, is either a legitimate or illegitimate influence. Simple requirements like those already included in codes of conduct such as “be transparent” or “disclose conflicts of interest” do not always work. In both cases, it is less useful to use the principle of transparency as a justification for a generally applicable rule (“be transparent”), rather than deeming it as a tool with which to conduct deliberations (Ambrosj et al. 2023; Desmond, 2023). For codes of conduct to effectively guide scientists in navigating challenging situations, they need to both acknowledge that the difficulty cannot be resolved by following a simple and generally applicable rule, and at the same time they need to offer researchers some actionable principles. The discussion of the next goal suggests a way to make such principles more actionable.

Second, we should ensure that scientists themselves interpret or construe new requirements in appropriate ways. Scientists should actively reflect on how they should meet social responsibilities and on whether this impacts the quality of the research. To facilitate this active reflection, actionable principles should be offered in the form of guiding questions for researchers. For instance, the set of questions proposed by David Resnik and Kevin Elliott (2023, tbl. 3) to determine whether a study complies with scientific norms is a good starting point. However, these questions should be tailored to engender reflection of researchers on their own ongoing work: “is our study project adequately addressing the possible relevant social implications?”; “are we ignoring some possible explanation because of our commitment to social values?”. In order not to excessively increase the length of codes of conduct, these questions may be published in separate supporting documents.

The challenge here is that scientists may take these lists of questions just as another administrative requirement imposed by their funder or institution. To overcome this challenge, any addition of attention-raising statements or reflection-stimulating questions must be accompanied by other policies, as shown in the discussion of the next and last goal to which we now turn.

Finally, the motivation behind value integration must be clear and shared. Most of the societal expectations on scientists are based on pro-social goals: these must be clearly conveyed in codes of conduct so that the underlying rationale can be understood and so that expectations of value integration are not perceived as a form of interference. Of course, adding a line in a code of conduct by itself is not sufficient to ensure the motivation of scientists: also changes in education, journal publishing, and science funding should be implemented. For instance, dedicated courses could be implemented or already-existent training on research integrity could be integrated with a module on pro-social policies, their benefits and limits. Philosophy of science might be more thoroughly integrated into scientific training—as recently suggested by the Science editor-in-chief (Thorp, 2024)—to increase the awareness of the complex relationship between values and science in the scientific community, especially in its governing bodies such as faculty boards. As for publishing and funding, journals and funders could require a statement on pro-social aims or related scientific risks. This could be an additional section, in analogy to the disclosure of funds and conflicts of interest.

This is the most difficult goal to achieve and possibly the most contentious one as scientists might genuinely disagree with these policies and may have strong opinions against the injection of pro-social values into science. To mitigate this scepticism, major changes in scientific training and practice should not be imposed from above. Rather they should be the product of consultation with the various stakeholders of scientific research, including, of course, scientists themselves.

Conclusion

Science is not a value-free endeavour. The way it is organized, funded, and conducted makes it impossible—and possibly undesirable—for it to be isolated from the surrounding social and political reality. This has been long discussed in philosophy of science, but now seems to be—to an extent—recognized also by the scientific community and science policy-makers. Accordingly, new expectations have been placed on scientists. Many scientists might be sceptical about the requirements to integrate values into their work. They might even regard these as a way to politicize and bias science. While the literature on values in science offers an invaluable source of thorough reflections and strategies to incorporate values without losing integrity, the fears of scientists are based on an uncontroversial observation: even our best values and intentions if not well managed can infringe scientific integrity and freedom.

Rather than calling for a retreat back to the ivory tower in response to these challenges, we recommend making the evaluation of risks arising from integrating values a structural part of scientific practice and education. Scientists should receive the appropriate tools to perform these complex evaluations. Codes of conduct for research integrity are a good starting point as amending them is relatively inexpensive and because as authoritative documents they can (more or less directly) influence science policy and practice. As one of the most authoritative codes of conduct, ECoC can pave the way. By guiding researchers through the challenges inherent to the integration of various legitimate interests and values in research, the next ECoC can mark a further and decisive contribution towards a more socially responsive yet still reliable scientific community.

References

-

Albuquerque UP, Ludwig D, Feitosa IS, de Moura JMB, Gonçalves PHS, da Silva RH, da Silva TC, Gonçalves-Souza T, Ferreira Júnior WS (2021) Integrating traditional ecological knowledge into academic research at local and global scales. Regional Environ Change 21(2):45. https://doi.org/10.1007/s10113-021-01774-2

Google Scholar

-

Ambrosj J, Desmond H, Dierickx K (2023) The value-free ideal in codes of conduct for research integrity. Synthese, 202(5). https://doi.org/10.1007/s11229-023-04377-y

-

Ambrosj J, Dierickx K, Desmond H (2023) The Value-Free Ideal of Science: A Useful Fiction? A Review of Non-epistemic Reasons for the Research Integrity Community. Sci Eng Ethics 29(1):1. https://doi.org/10.1007/s11948-022-00427-9

Google Scholar

-

Bedessem B, Ruphy S (2020) Citizen Science and Scientific Objectivity: Mapping Out Epistemic Risks and Benefits. Perspect Sci 28(5):630–654. https://doi.org/10.1162/posc_a_00353

Google Scholar

-

CEU Central European University. (2018, December 3). CEU Forced Out of Budapest: To Launch U.S. Degree Programs in Vienna in September 2019. https://www.ceu.edu/article/2018-12-03/ceu-forced-out-budapest-launch-us-degree-programs-vienna-september-2019

-

Clark CJ, Jussim L, Frey K, Stevens ST, al-Gharbi M, Aquino K, Bailey JM, Barbaro N, Baumeister RF, Bleske-Rechek A, Buss D, Ceci S, Del Giudice M, Ditto PH, Forgas JP, Geary DC, Geher G, Haider S, Honeycutt N, von Hippel W (2023) Prosocial motives underlie scientific censorship by scientists: A perspective and research agenda. Proc Natl Acad Sci 120(48):e2301642120. https://doi.org/10.1073/pnas.2301642120

Google Scholar

-

Desmond H (2020) Professionalism in Science: Competence, Autonomy, and Service. Sci Eng Ethics 26(3):1287–1313. https://doi.org/10.1007/s11948-019-00143-x

Google Scholar

-

Desmond H (2023) The ethics of expert communication. Bioethics, bioe.13249. https://doi.org/10.1111/bioe.13249

-

Douglas H (2009) Science, Policy, and the Value-Free Ideal. University of Pittsburgh Press. https://doi.org/10.2307/j.ctt6wrc78

-

Elliott KC (2017) A Tapestry of Values: An Introduction to Values in Science. In A Tapestry of Values. Oxford University Press. https://doi.org/10.1093/acprof:oso/9780190260804.001.0001

-

Epley N, Tannenbaum D (2017) Treating Ethics as a Design Problem. Behav Sci Policy 3(2):73–84. https://doi.org/10.1177/237946151700300206

Google Scholar

-

Holman B, Wilholt T (2022) The new demarcation problem. Stud Hist Philos Sci 91:211–220. https://doi.org/10.1016/j.shpsa.2021.11.011

Google Scholar

-

Hoyningen-Huene P (2023) Objectivity, value-free science, and inductive risk. Eur J Philos Sci 13(1):14. https://doi.org/10.1007/s13194-023-00518-9

Google Scholar

-

Hunt L, Nielsen MW, Schiebinger L (2022) A framework for sex, gender, and diversity analysis in research. Science 377(6614):1492–1495. https://doi.org/10.1126/science.abp9775

Google Scholar

-

Kolluoglu B, Akarun L (2023) Standing up for the university. Nat Human Behav 1–2. https://doi.org/10.1038/s41562-023-01593-x

-

Krylov AI, Tanzman J (2023) Critical Social Justice Subverts Scientific Publishing. Eur Rev 31(5):527–546. https://doi.org/10.1017/S1062798723000327

Google Scholar

-

Ludwig D (2016) Overlapping ontologies and Indigenous knowledge. From integration to ontological self-determination. Stud Hist Philos Sci Part A 59:36–45. https://doi.org/10.1016/j.shpsa.2016.06.002

Google Scholar

-

Maassen P, Martinsen D, Elken M, Jungblut J, Lackne E, European Parliament. Directorate General for Internal Policies of the Union. (2023) State of play of academic freedom in the EU Member States: Overview of de facto trends and developments. Publications Office. https://doi.org/10.2861/466486

-

Pulkkinen K, Undorf S, Bender F, Wikman-Svahn P, Doblas-Reyes F, Flynn C, Hegerl GC, Jönsson A, Leung G-K, Roussos J, Shepherd TG, Thompson E (2022) The value of values in climate science. Nat Clim Change 12(1):1. https://doi.org/10.1038/s41558-021-01238-9

Google Scholar

-

Resnik D B (2009) Playing Politics with Science. Oxford University Press. https://doi.org/10.1093/acprof:oso/9780195375893.001.0001

-

Resnik D B, Elliott K C (2023) Science, Values, and the New Demarcation Problem. J General Philosophy Sci. https://doi.org/10.1007/s10838-022-09633-2

-

Science must respect the dignity and rights of all humans (2022) Nat Hum Behav 6(8):8. https://doi.org/10.1038/s41562-022-01443-2

Google Scholar

-

Sudai M, Borsa A, Ichikawa K, Shattuck-Heidorn H, Zhao H, Richardson SS (2022) Law, policy, biology, and sex: Critical issues for researchers. Science 376(6595):802–804. https://doi.org/10.1126/science.abo1102

Google Scholar

-

Tannenbaum C, Ellis RP, Eyssel F, Zou J, Schiebinger L (2019) Sex and gender analysis improves science and engineering. Nature 575(7781):7781. https://doi.org/10.1038/s41586-019-1657-6

Google Scholar

-

Thorp HH (2024) Teach philosophy of science. Science 384(6692):141–141. https://doi.org/10.1126/science.adp7153

Google Scholar

Acknowledgements

This article is part of a project funded by FWO (Fonds Wetenschappelijk Onderzoek) with grant number G0D6920N.

Author information

Authors and Affiliations

Contributions

The three authors equally contributed to the idea of writing an opinion piece on this topic. Author 1 drafted the first version of this text, and produced figures and tables. Author 1, Author 2, Author 3 contributed to various revisions of the text.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

This article does not contain any studies with human participants performed by any of the authors.

Informed consent

No informed consent was needed for this study as it did not involve human subjects.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

41599_2024_3261_MOESM1_ESM.docx

Table S1. Passages on the integration of social values into science included in prominent ethical codes and statements from across the world.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Reprints and permissions

About this article

Cite this article

Ambrosj, J., Dierickx, K. & Desmond, H. Codes of conduct should help scientists navigate societal expectations.

Humanit Soc Sci Commun 11, 770 (2024). https://doi.org/10.1057/s41599-024-03261-5

-

Received: 04 March 2024

-

Accepted: 03 June 2024

-

Published: 17 June 2024

-

DOI: https://doi.org/10.1057/s41599-024-03261-5