By analysing citations and authors’ publication records, Argos identifies ‘high risk’ papers that warrant further investigation.Credit: bernie_photo/Getty

Which scientific publishers and journals are worst affected by fraudulent or dubious research papers — and which have done least to clean up their portfolio? A technology start-up founded to help publishers spot potentially problematic papers says that it has some answers, and has shared its early findings with Nature.

The science-integrity website Argos, which was launched in September by Scitility, a technology firm headquartered in Sparks, Nevada, gives papers a risk score on the basis of their authors’ publication records, and on whether the paper heavily cites already-retracted research. A paper categorized as ‘high risk’ might have multiple authors whose other studies have been retracted for reasons related to misconduct, for example. Having a high score doesn’t prove that a paper is low quality, but suggests that it is worth investigating.

The papers that most heavily cite retracted studies

Argos is one of a growing number of research-integrity tools that look for red flags in papers. These include the Papermill Alarm, made by Clear Skies, and Signals, by Research Signals, both London-based firms. Because creators of such software sell their manuscript-screening tools to publishers, they are generally reluctant to name affected journals. But Argos, which is offering free accounts to individuals and fuller access to science-integrity sleuths and journalists, is the first to show public insights.

“We wanted to build a piece of technology that was able to see hidden patterns and bring transparency to the industry,” says Scitility co-founder Jan-Erik de Boer, who is based in Roosendaal, the Netherlands.

By early October, Argos had flagged more than 40,000 high-risk and 180,000 medium-risk papers. It has also indexed more than 50,000 retracted papers.

Publisher risk ratings

Argos’s analysis shows that the publisher Hindawi — a now-shuttered subsidiary of the London-based publisher Wiley — has the highest volume and proportion of already-retracted papers (see ‘Publishers at risk’). That’s not surprising, because Wiley has retracted more than 10,000 Hindawi-published papers over the past two years in response to concerns raised by editors and sleuths; this amounts to more than 4% of the brand’s total portfolio over the past decade. One of its journals, Evidence-based Complementary and Alternative Medicine, has retracted 741 papers, more than 7% of its output.

Argos risk-score ratings flag more than 1,000 remaining Hindawi papers — another 0.65% — as still ‘high risk’. This suggests that, although Wiley has done a lot to clean up its portfolio, it might not have yet completed the job. The publisher told Nature that it welcomed Argos and similar tools, and had been working to rectify the issues with Hindawi.

Source: Argos.

Other publishers seem to have much more investigation to do, with few retractions relative to the number of high-risk papers flagged by Argos (publishers might have already examined some of these papers and determined that no action was necessary).

The publishing giant Elsevier, based in Amsterdam, has around 5,000 retractions but more than 11,400 high-risk papers according to Nature’s analysis of Argos data — although all of these together make up just more than 0.2% of the publisher’s output over the past decade. And the publisher MDPI has retracted 311 papers but has more than 3,000 high-risk papers — about 0.24% of its output. Springer Nature has more than 6,000 retractions and more than 6,000 high-risk papers; about 0.3% of its output. (Nature’s news team is independent of its publisher.)

Chain retraction: how to stop bad science propagating through the literature

In response to requests for comment, all of the publishers flagged as having the greatest number of high-risk articles say that they are working hard on research integrity, using technology to screen submitted articles, and that their retractions demonstrated their commitment to cleaning up problematic content.

Springer Nature says that it rolled out two tools in June that have since helped to spot hundreds of fake submitted manuscripts; several publishers noted their work with a joint integrity hub that offers software which can flag suspicious papers. Jisuk Kang, a publishing manager at MDPI in Basel, Switzerland, says that products such as Argos can give broad indications of potential issues, but noted that the publisher couldn’t check the accuracy or reliability of the figures on the site. She adds that the largest publishers and journals would inevitably have higher numbers of high-risk papers, so that the share of output is a better metric.

The publishing brands with the greatest proportions of high-risk papers in their portfolios are Impact Journals (0.82%), Spandidos (0.77%) and Ivyspring (0.67%), the Argos figures suggest. Impact Journals tells Nature that, although its journals have experienced problems in the past, they have now improved their integrity. The publisher says that there were “0% irregularities” in its journal Oncotarget over the past two years, owing to the adoption of image-checking tools such as Image Twin, which have become available only in the past few years. Portland Press, which has 0.41% of high-risk papers in its portfolio, says that it has taken corrective action, bringing in enhanced stringency checks.

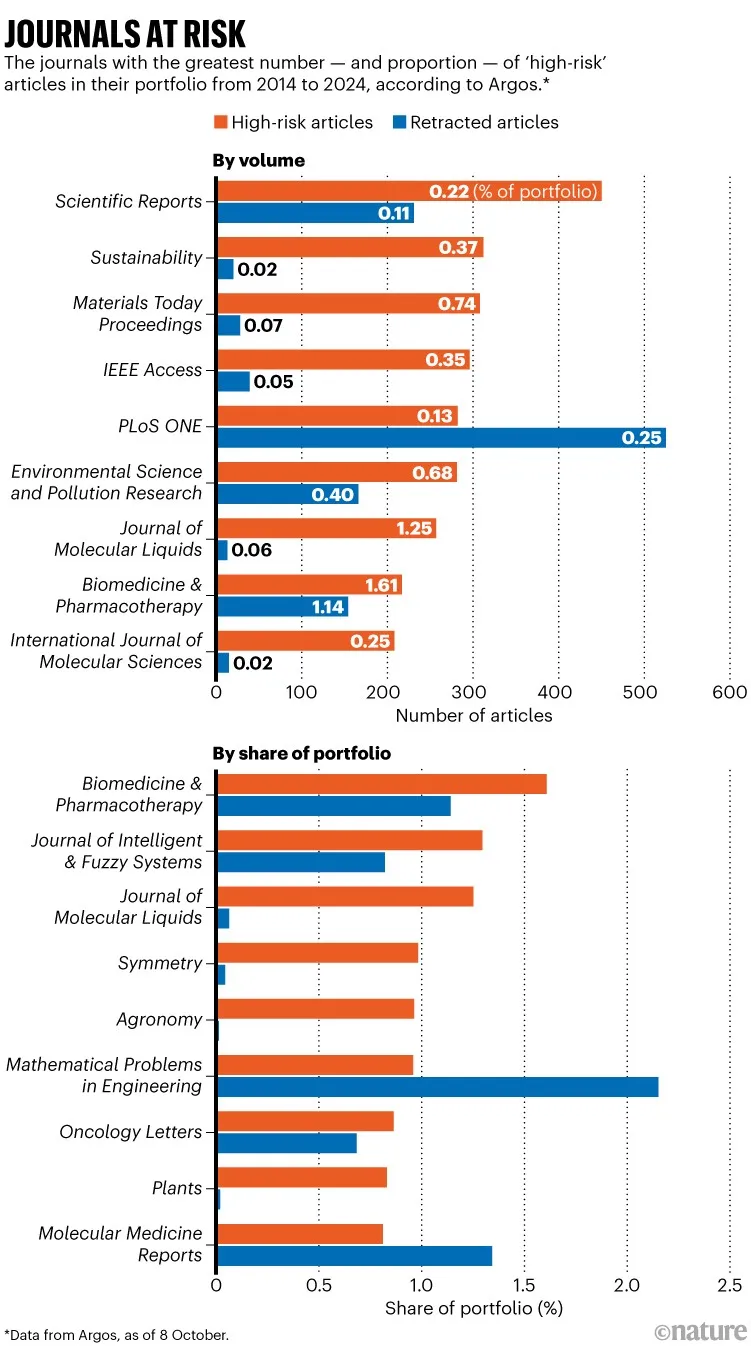

Journal risk ratings

Argos also provides figures for individual journals. Unsurprisingly, Hindawi titles stand out for both the number and proportion of papers that have been retracted, whereas other journals have a lot of what Argos identified as high-risk work remaining (see ‘Journals at risk’). By volume, Springer Nature’s mega-journal Scientific Reports leads, with 450 high-risk papers and 231 retractions, together around 0.3% of its output. On 16 October, a group of sleuths penned an open letter to Springer Nature raising concerns about problematic articles in the journal.

Paper-mill detector put to the test in push to stamp out fake science

In response, Chris Graf, head of research integrity at Springer Nature, says that the journal investigates every issue raised with it. He adds that the proportion of its content that has been highlighted is comparatively low given its size.

Journals with particularly large gaps between the number of retracted works and potentially suspect papers include MDPI’s Sustainability (20 retractions and 312 high-risk papers; 0.4% of its output) and Elsevier’s Materials Today Proceedings (28 retractions and 308 high-risk papers; 0.8% of its output). Elsevier’s Biomedicine & Pharmacotherapy has the highest proportion of high-risk papers — 1.61% of its output.

“The volume of fraudulent materials is increasing at scale, boosted by systematic manipulation, such as ‘paper mills’ that produce fraudulent content for commercial gain, and AI-generated content,” says a spokesperson for Elsevier, adding that in response “we are increasing our investment in human oversight, expertise and technology”.

Source: Argos.

Open data

Argos’s creators emphasize that the site relies on open data collected by others. Its sources include the website Retraction Watch, which maintains a database of retracted papers — made free through a deal with the non-profit organization CrossRef — that includes the reasons for a retraction, so that tools examining author records can focus on retractions that mention misconduct. The analysis also relies on records of articles that heavily cite retracted papers, collated by Guillaume Cabanac, a computer scientist at the University of Toulouse, France.

Although Argos also follows analysts that focus on networks of authors with a history of misconduct, other research-integrity tools also flag papers on the basis of suspicious content, such as close textual similarity to bogus work, or ‘tortured phrases’, a term coined by Cabanac, when authors make strange wording choices to avoid triggering plagiarism detectors.

What makes an undercover science sleuth tick? Fake-paper detective speaks out

“Both approaches have merit, but identifying networks of researchers engaged in malpractice is likely to be more valuable,” says James Butcher, a former publisher at Nature-branded journals and The Lancet, who now runs the consultancy Journalology in Liverpool, UK. That is because AI-assisted writing tools might be used to help fraudsters to avoid obvious textual tells, he says. Butcher adds that many major publishers have built or acquired their own integrity tools to screen for various red flags in manuscripts.

One of the trickiest issues for integrity tools that rely mostly on author retraction records is correctly distinguishing between authors with similar names — an issue that might skew Argos’s figures. “The author disambiguation problem is the single biggest problem the industry has,” says Adam Day, founder of Clear Skies.

De Boer, who formerly worked at Springer Nature, says that anyone can create an account to access Argos for free, but Scitility aims to sell a version of the tool to big publishers and institutions, who could plug it directly into their manuscript-screening workflows.

Butcher applauds the Argos team’s transparency. “There needs to be more visibility on journals and publishers that cut corners and fail to do appropriate due diligence on the papers that they publish and monetize,” he says.