Abstract

The event detection technique has been introduced to light-field microscopy, boosting its imaging speed in orders of magnitude with simultaneous axial resolution enhancement in scattering medium.

As lift science has rapidly developed in the last decades, the demand for novel optical instruments is becoming increasingly urgent, helping discover new biomedical phenomena and mechanisms with novel optical imaging functions regarding information dimension, imaging resolution, acquisition speed, and so on. In this regard, the light-field microscopy (LFM) technique has arisen as a novel imaging tool to acquire not only spatial but also angular information of specimens, thus realizing volumetric imaging that can reveal axial depth information for useful perspective views and focal stacks1. The general working principle of LFM is placing a microlens array at either the image or Fourier plane, enabling to encode angular information of different regions into a snapshot. The volumetric data cube can then be recovered from the measurement using reconstruction algorithms. Such a representative computational imaging modality maintains 3D imaging and aberration correction abilities, and has been widely applied in neuronal activity recording2, subcellular interaction observation3, turbulence-corrected telescope4, and so on.

As the spatial and angular resolution of LFM have been improved in recent years using high-resolution image sensors, advanced optics design, and cutting-edge deep-learning algorithms5,6,7,8, the imaging speed is still a remaining challenge that is directly limited by the camera’s acquisition speed9,10. Even using high-speed cameras, the output large number of frames put a great load on system bandwidth and post-processing algorithms. Further, the decreased exposure time of high-speed acquisition may increase measurement noise that degrades imaging quality. In this sense, the development of LFM falls into the tradeoff among imaging resolution, imaging speed, and processing efficiency under limited throughput and computing power.

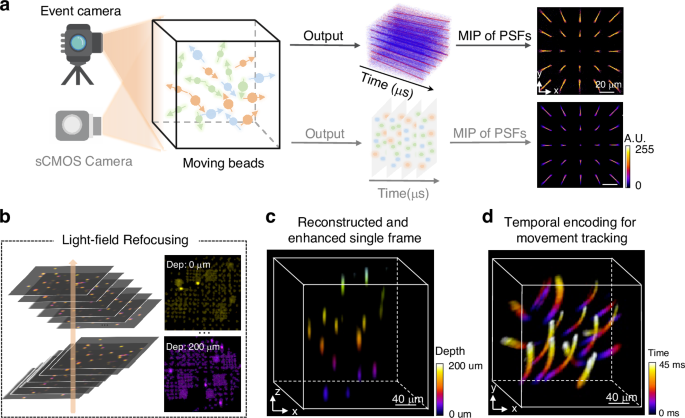

To break the imaging speed limitation, in a newly published paper11 in Light: Science & Applications, Ruipeng Guo, Qianwan Yang, Andrew S. Chang, Guorong Hu, Joseph Greene, Christopher V. Gabel, Lei Tian from Boston University, and Sixian You from Massachusetts Institute of Technology, have reported an EventLFM technique that realized ultra-fast light-field imaging at kHz frame rates (Fig. 1). Different from the conventional LFM principle that directly captures light brightness, the EventLFM technique introduces an event camera to only acquire brightness variation instead, thus enabling transient feature acquisition and simultaneous data amount reduction, bypassing the limitations of low frame rate and large data amount. The authors have also developed a deep-learning-based reconstruction algorithm to recover 3D dynamics from the snapshot event measurement. Experiments demonstrate successful reconstruction of fast-moving and rapidly blinking 3D fluorescent samples at kHz frame rates. With such an event acquisition modality, the technique naturally maintains anti-scattering ability due to free of intensity accumulation in scattering medium, and has demonstrated blinking neuronal signal recording in scattering mouse brain tissues and 3D tracking of GFP-labeled neurons in freely moving C. elegans.

a Comparison of event camera and sCMOS camera for light-field microscopy. b Event stream is utilized to generate time-surface frame with the corresponding algorithm, then each time-surface frame is reconstructed using a light-field refocusing algorithm. c Color-coded 3D light-field reconstruction of the object with an optional deep-learning technique. d Color-coded 3D motion trajectory reconstructed over a 45 ms time span. The subfigures are cropped from the corresponding reference

This work initiates a novel research perspective for high-speed and anti-scattering imaging in microscopy. Besides, the event detection technique can also be incorporated into different computational imaging systems to enhance imaging speed, such as snapshot compressive imaging12, ptychographic imaging13, and so on. Along with the event detection mechanism, as the encoding process is regarding brightness variation, novel decoding algorithms are correspondingly necessary with different-format measurements, and also with special attention on measurement noise that may be more serious than intensity detection.

Following the heuristic idea of introducing novel detection devices into existing imaging systems for performance enhancement, the research perspective can be expanded to a variety of different cutting-edge image sensors and devices. Regarding detection sensitivity, the emerging single-photon detector14, spiking camera15, quantum detection system16 and corresponding processing techniques17 can replace the conventional CMOS or CCD detectors for unprecedented sensitivity of weak signals (Fig. 2). For imaging resolution, the advanced gigapixel camera18 can provide more pixels to reveal fine details. Concerning information dimension, high-dimensional detectors can be introduced to acquire more information of spectrum19,20, phase21,22, polarization23,24, semantics such as edge25 or feature26, and so on. The higher imaging performance and additional information beyond intensity may open a new venue for subsequent intelligent processing, thus enabling challenging applications such as in-vivo deep-tissue imaging27, astronomical imaging4 non-line-of-sight imaging28, and so on.

The subfigures are cropped from corresponding references

One should note that although the introduced novel devices can indeed enhance imaging performance in certain aspects, “every coin has two sides.” In the event or spiking detection case, the fast imaging speed along with binary signals sacrifices bright field information that are more in line with human vision15. In high-resolution or high-dimensional detection situations, the increased data amount puts a heavier load on data transmission and post-processing. In this regard, one should consider each pros and cons for different specific applications, thus finding the most suitable elements to obtain the most needed imaging performance. On the other hand, introducing the multimodal fusion strategy29,30 can back up each other and alleviate the shortcomings of different devices.

Looking forward, the advancements in multiple fields including material science, integrated circuit, computer science, together with their interdisciplinarity, will boost the development of next-generation optical sources, elements, and detectors, leading to groundbreaking imaging techniques in not only microscopy but also mesoscopic and macroscopic detection31,32. Especially in the era of artificial intelligence and large models, imaging systems can further obtain intelligence to create new applications and revolutionize our observation and understanding of the natural world.

References

-

Levoy, M. et al. Light field microscopy. In Proceedings of the SIGGRAPH ‘06: ACM SIGGRAPH 2006. 924–934 (ACM, Boston, 2006).

-

Prevedel, R. et al. Simultaneous whole-animal 3D imaging of neuronal activity using light-field microscopy. Nat. Methods 11, 727–730 (2014).

Google Scholar

-

Lu, Z. et al. Long-term intravital subcellular imaging with confocal scanning light-field microscopy. Nat. Biotechnol. https://doi.org/10.1038/s41587-024-02249-5 (2024).

-

Wu, J. M. et al. An integrated imaging sensor for aberration-corrected 3D photography. Nature 612, 62–71 (2022).

Google Scholar

-

Xiong, B. et al. Mirror-enhanced scanning light-field microscopy for long-term high-speed 3D imaging with isotropic resolution. Light Sci. Appl. 10, 227 (2021).

Google Scholar

-

Zhang, Y. L. et al. DiLFM: an artifact-suppressed and noise-robust light-field microscopy through dictionary learning. Light Sci. Appl. 10, 152 (2021).

Google Scholar

-

Zhang, Y. et al. Computational optical sectioning with an incoherent multiscale scattering model for light-field microscopy. Nat. Commun. 12, 6391 (2021).

Google Scholar

-

Wang, Z. Q. et al. Real-time volumetric reconstruction of biological dynamics with light-field microscopy and deep learning. Nat. Methods 18, 551–556 (2021).

Google Scholar

-

Lu, Z. et al. Virtual-scanning light-field microscopy for robust snapshot high-resolution volumetric imaging. Nat. Methods 20, 735–746 (2023).

Google Scholar

-

Zhang, Z. K. et al. Imaging volumetric dynamics at high speed in mouse and zebrafish brain with confocal light field microscopy. Nat. Biotechnol. 39, 74–83 (2021).

Google Scholar

-

Guo, R. P. et al. EventLFM: event camera integrated Fourier light field microscopy for ultrafast 3D imaging. Light Sci. Appl. 13, 144 (2024).

Google Scholar

-

Yuan, X., Brady, D. J. & Katsaggelos, A. K. Snapshot compressive imaging: theory, algorithms, and applications. IEEE Signal Process. Mag. 38, 65–88 (2021).

Google Scholar

-

Zheng, G. A. et al. Concept, implementations and applications of Fourier ptychography. Nat. Rev. Phys. 3, 207–223 (2021).

Google Scholar

-

Hadfield, R. H. Single-photon detectors for optical quantum information applications. Nat. Photonics 3, 696–705 (2009).

Google Scholar

-

Zhu, L. et al. Ultra-high temporal resolution visual reconstruction from a fovea-like spike camera via spiking neuron model. IEEE Trans. Pattern Anal. Mach. Intell. 45, 1233–1249 (2022).

Google Scholar

-

Healey, A. J. et al. Quantum microscopy with van der Waals heterostructures. Nat. Phys. 19, 87–91 (2023).

Google Scholar

-

Bian, L. H. et al. High-resolution single-photon imaging with physics-informed deep learning. Nat. Commun. 14, 5902 (2023).

Google Scholar

-

Brady, D. J. et al. Multiscale gigapixel photography. Nature 486, 386–389 (2012).

Google Scholar

-

Zhu, X. X. et al. Broadband perovskite quantum dot spectrometer beyond human visual resolution. Light Sci. Appl. 9, 73 (2020).

Google Scholar

-

Bian, L. H. et al. A broadband hyperspectral image sensor with high spatio-temporal resolution. Preprint at https://arxiv.org/abs/2306.11583 (2023).

-

Camphausen, R. et al. A quantum-enhanced wide-field phase imager. Sci. Adv. 7, eabj2155 (2021).

Google Scholar

-

Park, Y., Depeursinge, C. & Popescu, G. Quantitative phase imaging in biomedicine. Nat. Photonics 12, 578–589 (2018).

Google Scholar

-

Garcia, M. et al. Bio-inspired color-polarization imager for real-time in situ imaging. Optica 4, 1263–1271 (2017).

Google Scholar

-

Garcia, M. et al. Bioinspired polarization imager with high dynamic range. Optica 5, 1240–1246 (2018).

Google Scholar

-

Lee, S. et al. Programmable black phosphorus image sensor for broadband optoelectronic edge computing. Nat. Commun. 13, 1485 (2022).

Google Scholar

-

Ballard, Z. et al. Machine learning and computation-enabled intelligent sensor design. Nat. Mach. Intell. 3, 556–565 (2021).

Google Scholar

-

Chen, Y., Wang, S. F. & Zhang, F. Near-infrared luminescence high-contrast in vivo biomedical imaging. Nat. Rev. Bioeng. 1, 60–78 (2023).

Google Scholar

-

Faccio, D., Velten, A. & Wetzstein, G. Non-line-of-sight imaging. Nat. Rev. Phys. 2, 318–327 (2020).

Google Scholar

-

Yankeelov, T. E., Abramson, R. G. & Quarles, C. C. Quantitative multimodality imaging in cancer research and therapy. Nat. Rev. Clin. Oncol. 11, 670–680 (2014).

Google Scholar

-

Zhang, Y. D. et al. Advances in multimodal data fusion in neuroimaging: overview, challenges, and novel orientation. Inf. Fusion 64, 149–187 (2020).

Google Scholar

-

Cardin, J. A., Crair, M. C. & Higley, M. J. Mesoscopic imaging: shining a wide light on large-scale neural dynamics. Neuron 108, 33–43 (2020).

Google Scholar

-

Omar, M., Aguirre, J. & Ntziachristos, V. Optoacoustic mesoscopy for biomedicine. Nat. Biomed. Eng. 3, 354–370 (2019).

Google Scholar

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Reprints and permissions

About this article

Cite this article

Bian, L., Chang, X., Xu, H. et al. Ultra-fast light-field microscopy with event detection.

Light Sci Appl 13, 306 (2024). https://doi.org/10.1038/s41377-024-01603-1

-

Published: 07 November 2024

-

DOI: https://doi.org/10.1038/s41377-024-01603-1