Abstract

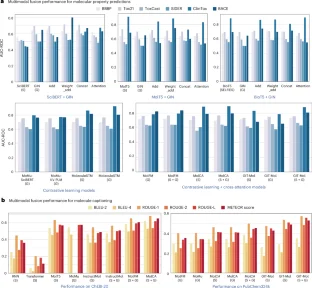

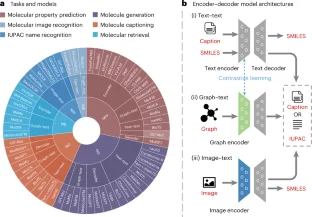

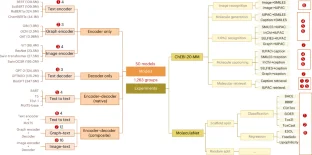

Deep learning has significantly advanced molecular modelling and design, enabling an efficient understanding and discovery of novel molecules. In particular, large language models introduce a fresh research paradigm to tackle scientific problems from a natural language processing perspective. Large language models significantly enhance our understanding and generation of molecules, often surpassing existing methods with their capabilities to decode and synthesize complex molecular patterns. However, two key issues remain: how to quantify the match between model and data modalities and how to identify the knowledge-learning preferences of models. To address these challenges, we propose a multimodal benchmark, named ChEBI-20-MM, and perform 1,263 experiments to assess the model’s compatibility with data modalities and knowledge acquisition. Through the modal transition probability matrix, we provide insights into the most suitable modalities for tasks. Furthermore, we introduce a statistically interpretable approach to discover context-specific knowledge mapping by localized feature filtering. Our analysis offers an exploration of the learning mechanism and paves the way for advancing large language models in molecular science.

This is a preview of subscription content, access via your institution

Access options

/* style specs end */

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

/* style specs start */

style {

display: none !important;

}

.LiveAreaSection * {

align-content: stretch;

align-items: stretch;

align-self: auto;

animation-delay: 0s;

animation-direction: normal;

animation-duration: 0s;

animation-fill-mode: none;

animation-iteration-count: 1;

animation-name: none;

animation-play-state: running;

animation-timing-function: ease;

azimuth: center;

backface-visibility: visible;

background-attachment: scroll;

background-blend-mode: normal;

background-clip: borderBox;

background-color: transparent;

background-image: none;

background-origin: paddingBox;

background-position: 0 0;

background-repeat: repeat;

background-size: auto auto;

block-size: auto;

border-block-end-color: currentcolor;

border-block-end-style: none;

border-block-end-width: medium;

border-block-start-color: currentcolor;

border-block-start-style: none;

border-block-start-width: medium;

border-bottom-color: currentcolor;

border-bottom-left-radius: 0;

border-bottom-right-radius: 0;

border-bottom-style: none;

border-bottom-width: medium;

border-collapse: separate;

border-image-outset: 0s;

border-image-repeat: stretch;

border-image-slice: 100%;

border-image-source: none;

border-image-width: 1;

border-inline-end-color: currentcolor;

border-inline-end-style: none;

border-inline-end-width: medium;

border-inline-start-color: currentcolor;

border-inline-start-style: none;

border-inline-start-width: medium;

border-left-color: currentcolor;

border-left-style: none;

border-left-width: medium;

border-right-color: currentcolor;

border-right-style: none;

border-right-width: medium;

border-spacing: 0;

border-top-color: currentcolor;

border-top-left-radius: 0;

border-top-right-radius: 0;

border-top-style: none;

border-top-width: medium;

bottom: auto;

box-decoration-break: slice;

box-shadow: none;

box-sizing: border-box;

break-after: auto;

break-before: auto;

break-inside: auto;

caption-side: top;

caret-color: auto;

clear: none;

clip: auto;

clip-path: none;

color: initial;

column-count: auto;

column-fill: balance;

column-gap: normal;

column-rule-color: currentcolor;

column-rule-style: none;

column-rule-width: medium;

column-span: none;

column-width: auto;

content: normal;

counter-increment: none;

counter-reset: none;

cursor: auto;

display: inline;

empty-cells: show;

filter: none;

flex-basis: auto;

flex-direction: row;

flex-grow: 0;

flex-shrink: 1;

flex-wrap: nowrap;

float: none;

font-family: initial;

font-feature-settings: normal;

font-kerning: auto;

font-language-override: normal;

font-size: medium;

font-size-adjust: none;

font-stretch: normal;

font-style: normal;

font-synthesis: weight style;

font-variant: normal;

font-variant-alternates: normal;

font-variant-caps: normal;

font-variant-east-asian: normal;

font-variant-ligatures: normal;

font-variant-numeric: normal;

font-variant-position: normal;

font-weight: 400;

grid-auto-columns: auto;

grid-auto-flow: row;

grid-auto-rows: auto;

grid-column-end: auto;

grid-column-gap: 0;

grid-column-start: auto;

grid-row-end: auto;

grid-row-gap: 0;

grid-row-start: auto;

grid-template-areas: none;

grid-template-columns: none;

grid-template-rows: none;

height: auto;

hyphens: manual;

image-orientation: 0deg;

image-rendering: auto;

image-resolution: 1dppx;

ime-mode: auto;

inline-size: auto;

isolation: auto;

justify-content: flexStart;

left: auto;

letter-spacing: normal;

line-break: auto;

line-height: normal;

list-style-image: none;

list-style-position: outside;

list-style-type: disc;

margin-block-end: 0;

margin-block-start: 0;

margin-bottom: 0;

margin-inline-end: 0;

margin-inline-start: 0;

margin-left: 0;

margin-right: 0;

margin-top: 0;

mask-clip: borderBox;

mask-composite: add;

mask-image: none;

mask-mode: matchSource;

mask-origin: borderBox;

mask-position: 0 0;

mask-repeat: repeat;

mask-size: auto;

mask-type: luminance;

max-height: none;

max-width: none;

min-block-size: 0;

min-height: 0;

min-inline-size: 0;

min-width: 0;

mix-blend-mode: normal;

object-fit: fill;

object-position: 50% 50%;

offset-block-end: auto;

offset-block-start: auto;

offset-inline-end: auto;

offset-inline-start: auto;

opacity: 1;

order: 0;

orphans: 2;

outline-color: initial;

outline-offset: 0;

outline-style: none;

outline-width: medium;

overflow: visible;

overflow-wrap: normal;

overflow-x: visible;

overflow-y: visible;

padding-block-end: 0;

padding-block-start: 0;

padding-bottom: 0;

padding-inline-end: 0;

padding-inline-start: 0;

padding-left: 0;

padding-right: 0;

padding-top: 0;

page-break-after: auto;

page-break-before: auto;

page-break-inside: auto;

perspective: none;

perspective-origin: 50% 50%;

pointer-events: auto;

position: static;

quotes: initial;

resize: none;

right: auto;

ruby-align: spaceAround;

ruby-merge: separate;

ruby-position: over;

scroll-behavior: auto;

scroll-snap-coordinate: none;

scroll-snap-destination: 0 0;

scroll-snap-points-x: none;

scroll-snap-points-y: none;

scroll-snap-type: none;

shape-image-threshold: 0;

shape-margin: 0;

shape-outside: none;

tab-size: 8;

table-layout: auto;

text-align: initial;

text-align-last: auto;

text-combine-upright: none;

text-decoration-color: currentcolor;

text-decoration-line: none;

text-decoration-style: solid;

text-emphasis-color: currentcolor;

text-emphasis-position: over right;

text-emphasis-style: none;

text-indent: 0;

text-justify: auto;

text-orientation: mixed;

text-overflow: clip;

text-rendering: auto;

text-shadow: none;

text-transform: none;

text-underline-position: auto;

top: auto;

touch-action: auto;

transform: none;

transform-box: borderBox;

transform-origin: 50% 50%0;

transform-style: flat;

transition-delay: 0s;

transition-duration: 0s;

transition-property: all;

transition-timing-function: ease;

vertical-align: baseline;

visibility: visible;

white-space: normal;

widows: 2;

width: auto;

will-change: auto;

word-break: normal;

word-spacing: normal;

word-wrap: normal;

writing-mode: horizontalTb;

z-index: auto;

-webkit-appearance: none;

-moz-appearance: none;

-ms-appearance: none;

appearance: none;

margin: 0;

}

.LiveAreaSection {

width: 100%;

}

.LiveAreaSection .login-option-buybox {

display: block;

width: 100%;

font-size: 17px;

line-height: 30px;

color: #222;

padding-top: 30px;

font-family: Harding, Palatino, serif;

}

.LiveAreaSection .additional-access-options {

display: block;

font-weight: 700;

font-size: 17px;

line-height: 30px;

color: #222;

font-family: Harding, Palatino, serif;

}

.LiveAreaSection .additional-login > li:not(:first-child)::before {

transform: translateY(-50%);

content: “”;

height: 1rem;

position: absolute;

top: 50%;

left: 0;

border-left: 2px solid #999;

}

.LiveAreaSection .additional-login > li:not(:first-child) {

padding-left: 10px;

}

.LiveAreaSection .additional-login > li {

display: inline-block;

position: relative;

vertical-align: middle;

padding-right: 10px;

}

.BuyBoxSection {

display: flex;

flex-wrap: wrap;

flex: 1;

flex-direction: row-reverse;

margin: -30px -15px 0;

}

.BuyBoxSection .box-inner {

width: 100%;

height: 100%;

padding: 30px 5px;

display: flex;

flex-direction: column;

justify-content: space-between;

}

.BuyBoxSection p {

margin: 0;

}

.BuyBoxSection .readcube-buybox {

background-color: #f3f3f3;

flex-shrink: 1;

flex-grow: 1;

flex-basis: 255px;

background-clip: content-box;

padding: 0 15px;

margin-top: 30px;

}

.BuyBoxSection .subscribe-buybox {

background-color: #f3f3f3;

flex-shrink: 1;

flex-grow: 4;

flex-basis: 300px;

background-clip: content-box;

padding: 0 15px;

margin-top: 30px;

}

.BuyBoxSection .subscribe-buybox-nature-plus {

background-color: #f3f3f3;

flex-shrink: 1;

flex-grow: 4;

flex-basis: 100%;

background-clip: content-box;

padding: 0 15px;

margin-top: 30px;

}

.BuyBoxSection .title-readcube,

.BuyBoxSection .title-buybox {

display: block;

margin: 0;

margin-right: 10%;

margin-left: 10%;

font-size: 24px;

line-height: 32px;

color: #222;

text-align: center;

font-family: Harding, Palatino, serif;

}

.BuyBoxSection .title-asia-buybox {

display: block;

margin: 0;

margin-right: 5%;

margin-left: 5%;

font-size: 24px;

line-height: 32px;

color: #222;

text-align: center;

font-family: Harding, Palatino, serif;

}

.BuyBoxSection .asia-link,

.Link-328123652,

.Link-2926870917,

.Link-2291679238,

.Link-595459207 {

color: #069;

cursor: pointer;

text-decoration: none;

font-size: 1.05em;

font-family: -apple-system, BlinkMacSystemFont, “Segoe UI”, Roboto,

Oxygen-Sans, Ubuntu, Cantarell, “Helvetica Neue”, sans-serif;

line-height: 1.05em6;

}

.BuyBoxSection .access-readcube {

display: block;

margin: 0;

margin-right: 10%;

margin-left: 10%;

font-size: 14px;

color: #222;

padding-top: 10px;

text-align: center;

font-family: -apple-system, BlinkMacSystemFont, “Segoe UI”, Roboto,

Oxygen-Sans, Ubuntu, Cantarell, “Helvetica Neue”, sans-serif;

line-height: 20px;

}

.BuyBoxSection ul {

margin: 0;

}

.BuyBoxSection .link-usp {

display: list-item;

margin: 0;

margin-left: 20px;

padding-top: 6px;

list-style-position: inside;

}

.BuyBoxSection .link-usp span {

font-size: 14px;

color: #222;

font-family: -apple-system, BlinkMacSystemFont, “Segoe UI”, Roboto,

Oxygen-Sans, Ubuntu, Cantarell, “Helvetica Neue”, sans-serif;

line-height: 20px;

}

.BuyBoxSection .access-asia-buybox {

display: block;

margin: 0;

margin-right: 5%;

margin-left: 5%;

font-size: 14px;

color: #222;

padding-top: 10px;

text-align: center;

font-family: -apple-system, BlinkMacSystemFont, “Segoe UI”, Roboto,

Oxygen-Sans, Ubuntu, Cantarell, “Helvetica Neue”, sans-serif;

line-height: 20px;

}

.BuyBoxSection .access-buybox {

display: block;

margin: 0;

margin-right: 10%;

margin-left: 10%;

font-size: 14px;

color: #222;

opacity: 0.8px;

padding-top: 10px;

text-align: center;

font-family: -apple-system, BlinkMacSystemFont, “Segoe UI”, Roboto,

Oxygen-Sans, Ubuntu, Cantarell, “Helvetica Neue”, sans-serif;

line-height: 20px;

}

.BuyBoxSection .price-buybox {

display: block;

font-size: 30px;

color: #222;

font-family: -apple-system, BlinkMacSystemFont, “Segoe UI”, Roboto,

Oxygen-Sans, Ubuntu, Cantarell, “Helvetica Neue”, sans-serif;

padding-top: 30px;

text-align: center;

}

.BuyBoxSection .price-buybox-to {

display: block;

font-size: 30px;

color: #222;

font-family: -apple-system, BlinkMacSystemFont, “Segoe UI”, Roboto,

Oxygen-Sans, Ubuntu, Cantarell, “Helvetica Neue”, sans-serif;

text-align: center;

}

.BuyBoxSection .price-info-text {

font-size: 16px;

padding-right: 10px;

color: #222;

font-family: -apple-system, BlinkMacSystemFont, “Segoe UI”, Roboto,

Oxygen-Sans, Ubuntu, Cantarell, “Helvetica Neue”, sans-serif;

}

.BuyBoxSection .price-value {

font-size: 30px;

font-family: -apple-system, BlinkMacSystemFont, “Segoe UI”, Roboto,

Oxygen-Sans, Ubuntu, Cantarell, “Helvetica Neue”, sans-serif;

}

.BuyBoxSection .price-per-period {

font-family: -apple-system, BlinkMacSystemFont, “Segoe UI”, Roboto,

Oxygen-Sans, Ubuntu, Cantarell, “Helvetica Neue”, sans-serif;

}

.BuyBoxSection .price-from {

font-size: 14px;

padding-right: 10px;

color: #222;

font-family: -apple-system, BlinkMacSystemFont, “Segoe UI”, Roboto,

Oxygen-Sans, Ubuntu, Cantarell, “Helvetica Neue”, sans-serif;

line-height: 20px;

}

.BuyBoxSection .issue-buybox {

display: block;

font-size: 13px;

text-align: center;

color: #222;

font-family: -apple-system, BlinkMacSystemFont, “Segoe UI”, Roboto,

Oxygen-Sans, Ubuntu, Cantarell, “Helvetica Neue”, sans-serif;

line-height: 19px;

}

.BuyBoxSection .no-price-buybox {

display: block;

font-size: 13px;

line-height: 18px;

text-align: center;

padding-right: 10%;

padding-left: 10%;

padding-bottom: 20px;

padding-top: 30px;

color: #222;

font-family: -apple-system, BlinkMacSystemFont, “Segoe UI”, Roboto,

Oxygen-Sans, Ubuntu, Cantarell, “Helvetica Neue”, sans-serif;

}

.BuyBoxSection .vat-buybox {

display: block;

margin-top: 5px;

margin-right: 20%;

margin-left: 20%;

font-size: 11px;

color: #222;

padding-top: 10px;

padding-bottom: 15px;

text-align: center;

font-family: -apple-system, BlinkMacSystemFont, “Segoe UI”, Roboto,

Oxygen-Sans, Ubuntu, Cantarell, “Helvetica Neue”, sans-serif;

line-height: 17px;

}

.BuyBoxSection .tax-buybox {

display: block;

width: 100%;

color: #222;

padding: 20px 16px;

text-align: center;

font-family: -apple-system, BlinkMacSystemFont, “Segoe UI”, Roboto,

Oxygen-Sans, Ubuntu, Cantarell, “Helvetica Neue”, sans-serif;

line-height: NaNpx;

}

.BuyBoxSection .button-container {

display: flex;

padding-right: 20px;

padding-left: 20px;

justify-content: center;

}

.BuyBoxSection .button-container > * {

flex: 1px;

}

.BuyBoxSection .button-container > a:hover,

.Button-505204839:hover,

.Button-1078489254:hover,

.Button-2737859108:hover {

text-decoration: none;

}

.BuyBoxSection .btn-secondary {

background: #fff;

}

.BuyBoxSection .button-asia {

background: #069;

border: 1px solid #069;

border-radius: 0;

cursor: pointer;

display: block;

padding: 9px;

outline: 0;

text-align: center;

text-decoration: none;

min-width: 80px;

margin-top: 75px;

}

.BuyBoxSection .button-label-asia,

.ButtonLabel-3869432492,

.ButtonLabel-3296148077,

.ButtonLabel-1636778223 {

display: block;

color: #fff;

font-size: 17px;

line-height: 20px;

font-family: -apple-system, BlinkMacSystemFont, “Segoe UI”, Roboto,

Oxygen-Sans, Ubuntu, Cantarell, “Helvetica Neue”, sans-serif;

text-align: center;

text-decoration: none;

cursor: pointer;

}

.Button-505204839,

.Button-1078489254,

.Button-2737859108 {

background: #069;

border: 1px solid #069;

border-radius: 0;

cursor: pointer;

display: block;

padding: 9px;

outline: 0;

text-align: center;

text-decoration: none;

min-width: 80px;

max-width: 320px;

margin-top: 20px;

}

.Button-505204839 .btn-secondary-label,

.Button-1078489254 .btn-secondary-label,

.Button-2737859108 .btn-secondary-label {

color: #069;

}

.uList-2102244549 {

list-style: none;

padding: 0;

margin: 0;

}

/* style specs end */

Data availability

The dataset ChEBI-20-MM49 can be accessed at https://huggingface.co/datasets/liupf/ChEBI-20-MM.

Code availability

The open-source project repository is available via Zenodo (https://doi.org/10.5281/zenodo.14293309)50 and via GitHub (https://github.com/AI-HPC-Research-Team/SLM4Mol). It is available for non-commercial use.

References

-

Creswell, A. et al. Generative adversarial networks: an overview. IEEE Signal Process. Magazine 35, 53–65 (2018).

Google Scholar

-

Xu, M. et al. GeoDiff: a geometric diffusion model for molecular conformation generation. In Proc. International Conference on Learning Representations 2181 (ICLR, 2022).

-

Heikamp, K. & Bajorath, J. Support vector machines for drug discovery. Expert Opin. Drug Discov. 9, 93–104 (2014).

Google Scholar

-

Xu, K., Hu, W., Leskovec, J. & Jegelka, S. How powerful are graph neural networks? In Proc. International Conference on Learning Representations 9104–9121 (ICLR, 2019).

-

Kipf, T. N. & Welling, M. Semi-supervised classification with graph convolutional networks. In Proc. International Conference on Learning Representations 2713–2727 (ICLR, 2017).

-

Veličković, P. et al. Graph attention networks. In Proc. International Conference on Learning Representations 2920–2932 (ICLR, 2018).

-

Vaswani, A. et al. Attention is all you need. In Advances in Neural Information Processing Systems (2017).

-

Schulman, J. et al. ChatGPT: optimizing language models for dialogue. OpenAI Blog https://openai.com/index/chatgpt/ (2022).

-

OpenAI. GPT-4 technical report. Preprint at https://arxiv.org/abs/2303.08774 (2023).

-

Du, Y., Fu, T., Sun, J. & Liu, S. Molgensurvey: a systematic survey in machine learning models for molecule design. Preprint at https://arxiv.org/abs/2203.14500 (2022).

-

Fang, Y., Zhang, Q., Chen, Z., Fan, X. & Chen, H. Knowledge-informed molecular learning: a survey on paradigm transfer. In Proc. International Conference on Knowledge Science, Engineering and Management 86–98 (Springer Nature Singapore, 2024).

-

Guo, T. et al. in Advances in Neural Information Processing Systems Vol. 36 (eds Oh, A., Naumann, T., Globerson, A., Saenko, K., Hardt, M. & Levine, S.) 59662–59688 (Curran Associates, 2023).

-

Fang, Y. et al. Mol-Instructions: a large-scale biomolecular instruction dataset for large language models. In Proc. The Twelfth International Conference on Learning Representations https://openreview.net/pdf?id=Tlsdsb6l9n (ICLR, 2024).

-

Weininger, D. SMILES, a chemical language and information system. 1. Introduction to methodology and encoding rules. J. Chem. Inf. Comput. Sci. 28, 31–36 (1988).

Google Scholar

-

Heller, S., McNaught, A., Stein, S., Tchekhovskoi, D. & Pletnev, I. InChI—the worldwide chemical structure identifier standard. J. Cheminform. 5, 7 (2013).

Google Scholar

-

Krenn, M., Häse, F., Nigam, A., Friederich, P. & Aspuru-Guzik, A. Self-referencing embedded strings (SELFIES): a 100% robust molecular string representation. Mach. Learn. Sci. Technol. 1, 045024 (2020).

Google Scholar

-

Landrum, G. et al. RDKit: a software suite for cheminformatics, computational chemistry, and predictive modeling. Greg Landrum 8, 5281 (2013).

Google Scholar

-

Long, G. L. & Winefordner, J. D. Limit of detection. A closer look at the IUPAC definition. Anal. Chem. 55, 712A–724A (1983).

-

Wu, Z. et al. MoleculeNet: a benchmark for molecular machine learning. Chem. Sci. 9, 513–530 (2018).

Google Scholar

-

Luo, R. et al. BioGPT: generative pre-trained transformer for biomedical text generation and mining. Brief. Bioinform. 23, bbac409 (2022).

Google Scholar

-

Edwards, C. et al. Translation between molecules and natural language. In Proc. 2022 Conference on Empirical Methods in Natural Language Processing 375–413 (2022).

-

Liu, Z. et al. MolCA: molecular graph-language modeling with cross-modal projector and uni-modal adapter. In Proc. 2023 Conference on Empirical Methods in Natural Language Processing 15623–15638 (Association for Computational Linguistics, 2023).

-

Radford, A. et al. Language models are unsupervised multitask learners. OpenAI Blog https://cdn.openai.com/better-language-models/language-models.pdf (2019).

-

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: pre-training of deep bidirectional transformers for language understanding. In Proc. NAACL-HLT 4171–4186 (2019).

-

Liu, Y. et al. RoBERTa: a robustly optimized BERT pretraining approach. Preprint at https://arxiv.org/abs/1907.11692 (2019).

-

Beltagy, I., Lo, K. & Cohan, A. SciBERT: a pretrained language model for scientific text. In Proc. 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP) 3615–3620 (Association for Computational Linguistics, 2019).

-

Chithrananda, S., Grand, G. & Ramsundar, B. ChemBERTa: large-scale self-supervised pretraining for molecular property prediction. Preprint at https://arxiv.org/abs/2010.09885 (2020).

-

Liu, Z. et al. MolXPT: wrapping molecules with text for generative pre-training. In Proc. 61st Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers) 1606–1616 (Association for Computational Linguistics, 2023).

-

Li, J. et al. Empowering molecule discovery for molecule-caption translation with large language models: a ChatGPT perspective. IEEE Trans. Knowl. Data Eng. 36, 6071–6083 (2024).

-

Raffel, C. et al. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 21, 5485–5551 (2020).

Google Scholar

-

Lewis, M. et al. BART: denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. In Proc. 58th Annual Meeting of the Association for Computational Linguistics 7871–7880 (Association for Computational Linguistics, 2020).

-

Kim, S. et al. PubChem 2023 update. Nucleic Acids Res. 51, D1373–D1380 (2023).

Google Scholar

-

Su, B. et al. A molecular multimodal foundation model associating molecule graphs with natural language. Preprint at https://arxiv.org/abs/2209.05481 (2022).

-

Luo, Y., Yang, K., Hong, M., Liu, X. & Nie, Z. MolFM: a multimodal molecular foundation model. Preprint at https://arxiv.org/abs/2307.09484 (2023).

-

Liu, P., Ren, Y., Tao, J. & Ren, Z. Git-mol: a multi-modal large language model for molecular science with graph, image, and text. Comput. Biol. Med. 171, 108073 (2024).

Google Scholar

-

Li, J., Li, D., Savarese, S. & Hoi, S. BLIP-2: bootstrapping language-image pre-training with frozen image encoders and large language models. In International Conference on Machine Learning 19730–19742 (PMLR, 2023).

-

Krasnov, L., Khokhlov, I., Fedorov, M. & Sosnin, S. Transformer-based artificial neural network for the conversion between chemical notations. Sci. Rep. 11, 14798 (2021).

-

Mao, J. et al. Transformer-based molecular generative model for antiviral drug design. J. Chem. Inf. Model. 64, 2733–2745 (2023).

-

Zeng, Z., Yao, Y., Liu, Z. & Sun, M. A deep-learning system bridging molecule structure and biomedical text with comprehension comparable to human professionals. Nat. Commun. 13, 862 (2022).

Google Scholar

-

Edwards, C., Zhai, C. & Ji, H. Text2Mol: cross-modal molecule retrieval with natural language queries. In Proc. 2021 Conference on Empirical Methods in Natural Language Processing 595–607 (Association for Computational Linguistics, 2021).

-

Rajan, K., Zielesny, A. & Steinbeck, C. DECIMER 1.0: deep learning for chemical image recognition using transformers. J. Cheminform. 13, 61 (2021).

Google Scholar

-

Khokhlov, I., Krasnov, L., Fedorov, M. V. & Sosnin, S. Image2SMILES: transformer-based molecular optical recognition engine. Chem. Methods 2, e202100069 (2022).

Google Scholar

-

Yi, J. et al. MICER: a pre-trained encoder-decoder architecture for molecular image captioning. Bioinformatics 38, 4562–4572 (2022).

Google Scholar

-

Xu, Z., Li, J., Yang, Z., Li, S. & Li, H. SwinOCSR: end-to-end optical chemical structure recognition using a swin transformer. J. Cheminform. 14, 41 (2022).

Google Scholar

-

Liu, Z. et al. Swin transformer: hierarchical vision transformer using shifted windows. In Proc. IEEE/CVF International Conference on Computer Vision 10012–10022 (2021).

-

Pei, Q. et al. BioT5: enriching cross-modal integration in biology with chemical knowledge and natural language associations. In Proc. 2023 Conference on Empirical Methods in Natural Language Processing 1102–1123 (Association for Computational Linguistics, 2023).

-

Liu, S. et al. Multi-modal molecule structure–text model for text-based retrieval and editing. Nat. Mach. Intell. 5, 1447–1457 (2023).

Google Scholar

-

Pei, Q. et al. BioT5+: towards generalized biological understanding with IUPAC integration and multi-task tuning. In Findings of the Association for Computational Linguistics: ACL 2024 1216–1240 (Association for Computational Linguistics, 2024).

-

Liu, P. ChEBI-20-MM Dataset (revision 7d44959). Huggingface https://huggingface.co/datasets/liupf/ChEBI-20-MM (2024).

-

Liu, P. & Ren, Z. AI-HPC-Research-Team/SLM4Mol: 1.0. Zenodo https://doi.org/10.5281/zenodo.14293309 (2024).

Acknowledgements

This work is supported by grants from the National Natural Science Foundation of China (grant nos. 62372484 and 62172456), the Major Key Project of PCL PCL2021A13 and Pengcheng Cloud Brain.

Author information

Authors and Affiliations

Contributions

P.L. was involved in the conceptualization, methodology, data curation, model training and writing (original draft). J.T. was involved in the funding acquisition and writing (review and editing). Z.R. was involved in the conceptualization, formal analysis, supervision, funding acquisition and writing (review and editing).

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Leroy Cronin, Karl Grantham and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Visualization of data source suitability and chemical space diversity.

The top section analyzes molecular text length distributions, tokenizer-processed lengths and representative scaffolds. The bottom section visualizes molecular property distributions and their interrelationships.

Supplementary information

Supplementary Information

Supplementary Figs. 1 and 2, Tables 1–10 and Discussion.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Reprints and permissions

About this article

Cite this article

Liu, P., Tao, J. & Ren, Z. A quantitative analysis of knowledge-learning preferences in large language models in molecular science.

Nat Mach Intell (2025). https://doi.org/10.1038/s42256-024-00977-6

-

Received: 06 February 2024

-

Accepted: 18 December 2024

-

Published: 17 January 2025

-

DOI: https://doi.org/10.1038/s42256-024-00977-6