Artificial intelligence’s next influences might be coming to weather forecasts. There’s a new study showing the possibilities of what it could bring to meteorology.

Meteorologists like our Weatherfirst team already use so much technology. What could this all mean for how meteorologists go about forecasting?

Meteorologists have a lot of tools at their disposal. They all come with their strengths and weaknesses.

One of the tools is simply weather observation data. Meteorologists get all kinds of atmospheric data from ground sensors to upper atmospheric readings from weather balloons, weather radar, and space-based satellites.

However, that data is mostly focused over land, leaving critical gaps over the vast oceans. This starts to introduce major limitations for weather prediction as a whole.

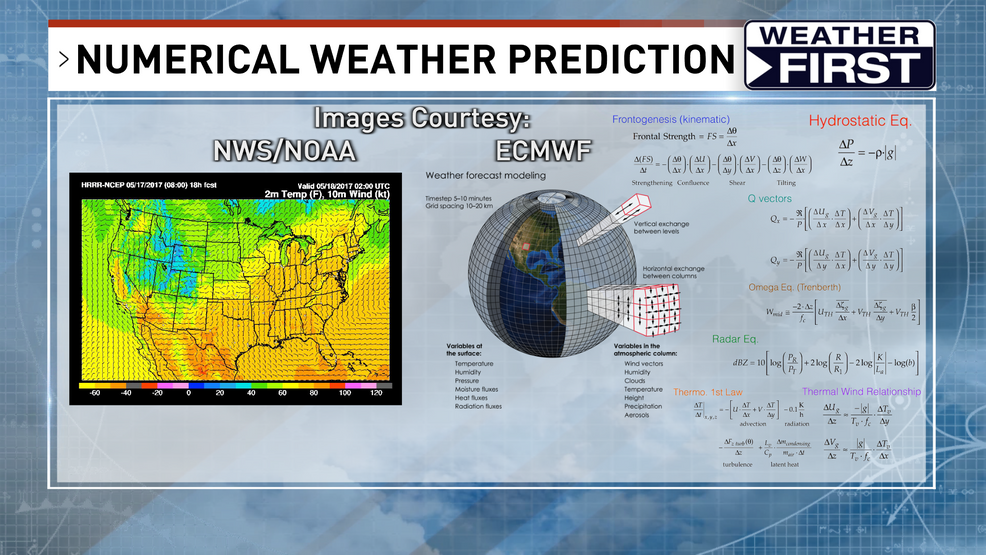

Current observations are great, but can only go so far for forecasting, where meteorologists use numerical weather prediction models. These take in all of that atmospheric data and plug it into multiple complex equations. Calculating outcomes for all sorts of things like temperatures, winds, pressure, cloud cover, to rain! These models have been the workhorse for forecasting since the advent of computer modeling began in the ’50s.

All of those complexities have limitations, running these models takes up lots of computing power and time. Limiting how often and where these forecasts can be done. Fortunately, most governments run these simulations on high-end supercomputers and release the outputs of those models freely to the public.

Numerical weather prediction models, otherwise known as deterministic models range from large-scale global models, capable of producing a resolution of about 17 miles by 17 miles, running a few times a day. Down to small-scale models, capable of producing a resolution of 2 miles by 2 miles, and in some cases down to half a mile! These smaller-scale models can update every hour and are great for forecasting thunderstorms locally. However, the tradeoff here is that computation power can only handle so much. So their scope is limited to just the United States, or wherever the focus needs to be, whereas large-scale models can simulate the entire world.

Another limiting factor to these deterministic models is just that, they’re deterministic. Meaning they run the simulation just once. If there are any errors in the equations, or the data isn’t good enough, errors quickly arise. Which is part of the reason meteorology isn’t an exact science.

Meteorologists can reduce some of those errors by using an ensemble. Ensembles run a single model multiple times changing some of the initial data just a bit to rule out any errors in the data, then starts attempting to find an average output. This is where we get things like “Spaghetti Models”. Many meteorologists think ensembles can be the future of numerical weather forecasting as they have proven to work great, especially for large-scale patterns. Because of all the extra iterations of a model run, running an ensemble takes up even more time and computing power, typically running only twice per day.

Another trick to rule out any potential errors is by looking at multiple runs of a single model averaging the outputs and filtering it through climatological data. This method can filter out any biases a model may have from the kinds of equations or parameters it uses. This is known as MOS or Model Output Statistics. This method works pretty well but is limited by how it’s “trained” or what data it’s filtering the outputs through. This takes a lot of work and isn’t the most reliable at times.

Stepping away from numerical-based forecasting, meteorologists can use a different type of modeled technique called an analog. This works by comparing the output of a model’s forecast to historical events and based on patterns and outcomes from those events can extrapolate what may occur for the current forecast. This is a technique similar to stock investing using past events.

Analogs are where machine learning comes in, mostly to recognize and compare patterns. Recognizing and comparing patterns from decades of weather data to the latest weather model output is virtually impossible to do by hand. Researchers developed this technique alongside machine learning, otherwise known as artificial intelligence.

Meteorologists have used AI in analogs for quite a while and has proven quite successful. In some cases, like this latest publication, shows that these can sometimes outperform some of our best weather models. Especially when it comes to large-scale global systems. Another huge advantage is because it’s not running so many computations it can run faster and use a fraction of the processing power. Making weather modeling much more accessible.

Like everything in running simulations, you have some give and take. Because AI modeling and analogs only look at physical patterns instead of using physics-based equations like numerical models, these tend to struggle at smaller scales.

The latest publication, showing the newest model called GraphCast from Google DeepMind, has a spatial resolution of only 17 miles by 17 miles. The same resolution as the global American model, the GFS. Plus, it runs its data based on outputs from the European global model, meaning it’s limited by that model’s strengths and weaknesses.

This latest release is just one of three big companies working with the European Center for Mid-range Weather Forecasting (ECMWF) on machine learning models. Other than Google DeepMind’s GraphCast, NVIDIA has its own version called FourCast Net, and Huawei has Pangu-Weather.

There are links to some of these research papers below if you’d like to learn more but only time will tell what the future of weather forecasting will be! These newest developments are excellent tools to better protect the public in our ever-changing climate.

Google DeepMind — GraphCast — Research Paper

ECMWF — How AI models are transforming weather forecasting: a showcase of data-driven systems

ECMWF — The rise of machine learning in weather forecasting