The Sun’s poles—which have never been seen before—have been revealed for the first time by what experts are calling a “virtual observatory.”

Observations of the sun are typically limited to side-on views only. Even the satellite missions we have sent to study the sun have mainly been constrained to studying it from an equatorial perspective.

Researchers fed two-dimensional images of the sun into an artificial intelligence (AI), which then translated them into a 3D reconstruction showing the star’s surface changing over time.

Once the AI had learned how to accurately reconstruct the sun’s past appearance for those areas observed by satellite, the team had it extrapolate the appearance of the poles, too.

The result, the scientists explained, provides a more complete image of our host star and will help us understand how its radiation is likely to impact technologies like power grids, satellites, and radio communications.

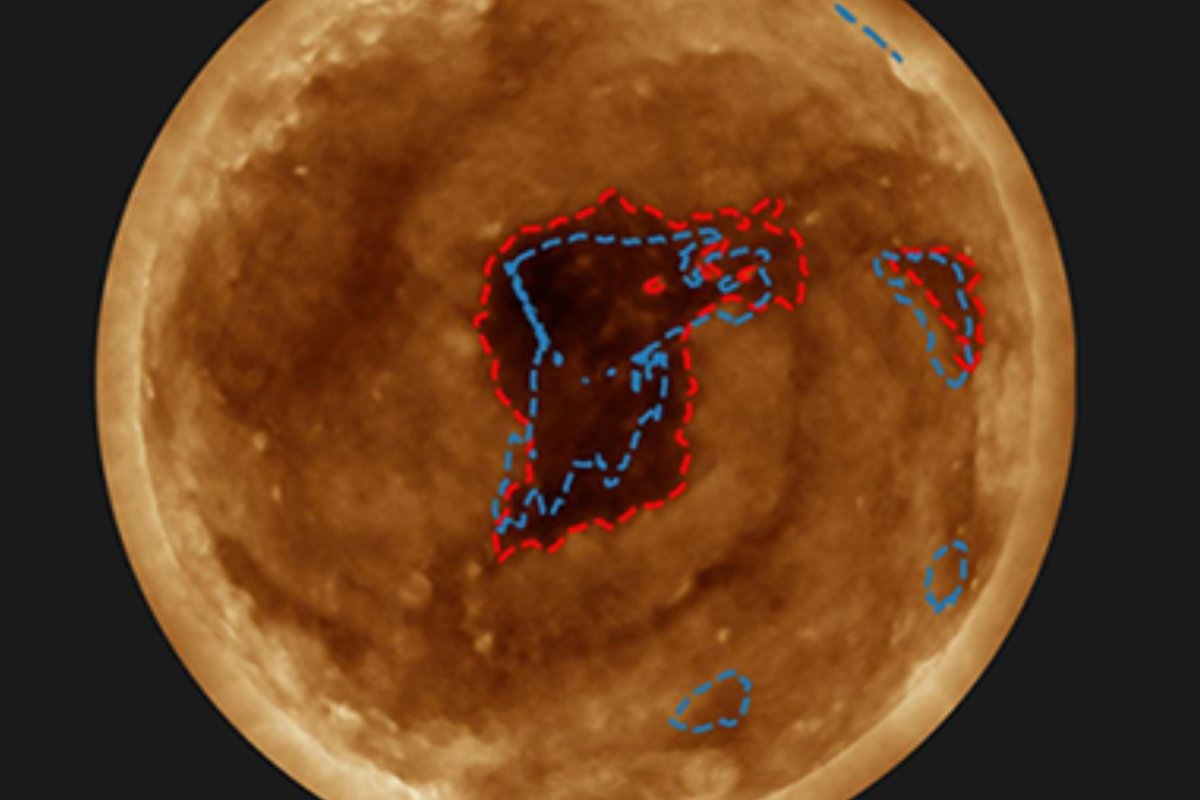

Tremblay et al. / National Center for Atmospheric Research

The study was undertaken by solar physicist Benoit Tremblay of the National Center for Atmospheric Research (NCAR) in Colorado and his colleagues.

“The best way to see the solar poles is obviously to send more satellites, but that is very expensive,” Tremblay said in a statement. “By taking the information we do have, we can use AI to create a virtual observatory and give us a pretty good idea of what the poles look like for a fraction of the cost.”

To develop their model of the sun, the researchers turned to artificial neural networks known as “Neural Radiance Fields” (NeRFs), which are capable of taking two-dimensional images and turning them into complex three-dimensional scenes.

The first challenge came in the fact that NeRFs have never been used on extreme ultraviolet images of plasma—the observations that astronomers make to study the Sun’s atmosphere and capture flares and other solar eruptions.

Tremblay and his colleagues had, therefore, to adapt the neural networks to enable them to work with the physical reality of our star, creating a new system they have dubbed sun Neural Radiance Fields, or “SuNeRFs.”

The SuNeRFs were trained on a time series of images captured by three satellites that had observed the sun in extreme ultraviolet from different angles.

Tremblay et al. / National Center for Atmospheric Research

The team cautioned that the model of the sun produced by their AI is only an “educated guess” but nevertheless forms a useful approximation that could help study the sun and inform future missions sent to study our star up close.

“Using AI in this way allows us to leverage the information we have, but then break away from it and change the way we approach research,” Trembley said. “AI changes fast and I’m excited to see how advancements improve our models and what else we can do with AI.”

With their initial study complete, the team are now looking to use NCAR’s Derecho supercomputer to increase the resolution of their model.

They are also looking to explore new AI methods to improve the accuracy of the inferences about the appearance of the solar poles, as well as using similar approaches to model Earth’s atmosphere.

Uncommon Knowledge

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.