Abstract

We asked whether, in the first year of life, the infant brain can support the dynamic crossmodal interactions between vision and somatosensation that are required to represent peripersonal space. Infants aged 4 (n = 20, 9 female) and 8 (n = 20, 10 female) months were presented with a visual object that moved towards their body or receded away from it. This was presented in the bottom half of the screen and not fixated upon by the infants, who were instead focusing on an attention getter at the top of the screen. The visual moving object then disappeared and was followed by a vibrotactile stimulus occurring later in time and in a different location in space (on their hands). The 4-month-olds’ somatosensory evoked potentials (SEPs) were enhanced when tactile stimuli were preceded by unattended approaching visual motion, demonstrating that the dynamic visual-somatosensory cortical interactions underpinning representations of the body and peripersonal space begin early in the first year of life. Within the 8-month-olds’ sample, SEPs were increasingly enhanced by (unexpected) tactile stimuli following receding visual motion as age in days increased, demonstrating changes in the neural underpinnings of the representations of peripersonal space across the first year of life.

Introduction

Acting in the environment and perceiving one’s place in it requires the ability to represent the dynamic relations between sensory events originating in external space (often audiovisual features of objects or people) and sensory events on the body (often somatosensory stimuli)1,2,3. The last 20 years have seen significant advances in our understanding of this ability to represent events in peripersonal space4,5. Given the dynamic nature of peripersonal spatial interactions—where events in external (visual and auditory) space influence subsequent somatosensory events and vice versa—more recent investigations have considered the role of sensory predictions6,7,8 in underpinning human adults’ representations of peripersonal space1,9,10,11. Whereas a handful of studies have investigated the development of multisensory space in human infancy12,13, no research has yet examined the development of an ability to perceive the dynamic multisensory interactions across spatial and temporal gaps that underpin the perception of sensory events characterising the interaction of the body with its environment. Here we begin to address this by reporting the findings of an investigation into the influences, in 4- and 8-month-old human infants, of visual motion in extrapersonal space (towards or away from the body) on the processing of somatosensory stimulation occurring on the body after a temporal interval.

Over the years there have been a number of attempts to trace the origins of human infants’ ability to perceive sensory events in relation to the self14,15,16,17,18,19,20. Orioli et al.19,20 have recently demonstrated that even newborns can differentiate visual events based on their motion direction relative to the body, showing a visual preference for objects moving towards them versus away from them. Other studies have established that from early in the first year infants are sensitive to temporal and spatial multisensory contingencies between visual, auditory and tactile stimuli that are likely to play a fundamental role in peripersonal space representations12,21,22,23,24,25,26. What remains unclear is the extent to which early sensitivity to such multisensory contingencies can support infants’ representations of dynamic events in peripersonal space. Such a representational ability requires the encoding of visual-tactile correspondences across spatiotemporal gaps (e.g., when seeing an object moving in extrapersonal space that subsequently touches the body)9,10.

Recent studies in human adults have shown that they are sensitive to crossmodal spatiotemporal dynamics between external objects and the body. In fact, a number of authors now argue that the special status of representations of peripersonal spatial events in the brain and behaviour (shown, e.g., in speeded responses to stimuli close to the body) may be explained by the predictive mechanisms at play when somatosensory processing is modulated by prior visual, auditory or audiovisual stimuli that move towards the body1,11,27. Evidence for this account comes from a number of studies demonstrating that responses to tactile stimuli can be modulated by predictive but spatially or temporally distant stimuli in a different sensory modality (i.e. vision)9,10. The key novelty of these findings is their focus on crossmodal interactions via predictive relations between visual and somatosensory events, which cannot be mediated via exogenous crossmodal effects due to colocation or synchrony between the visual and tactile stimuli28,29. These findings support the existence of predictive mechanisms using visual motion cues to make judgments about the time and location of an impending tactile stimulus and enhancing tactile processing at the time and location of impending contact10.

A precursory requirement for making predictions about tactile events from preceding visual cues is a sensitivity to visual-tactile crossmodal interactions across spatiotemporal gaps. To investigate the developmental origins of this ability, we presented infants with tactile stimuli on their hands and examined how prior moving visual stimuli modulated subsequent somatosensory evoked potentials (SEPs) recorded from the scalp using electroencephalography (EEG). More specifically, we examined whether somatosensory evoked potentials recorded from the scalp were modulated by the direction of motion of an earlier presented unattended visual object (towards vs away from the body). We decided to convey motion information using visual stimuli because, to sighted humans, these provide the richest and most continuous dynamic spatiotemporal information about objects’ movements with respect to the self, making visuotactile interactions the most important in supporting the development of the ability to represent the relation between objects in external space and the body. Additionally, the choice of visuotactile stimuli is consistent with previous adult research9,10. We chose to include in the study two groups of infants aged 4 and 8 months: these age groups were chosen in light of the relevant developmental changes taking place between 4 and 8 months of life. Infants’ ability to localise touch in relation to external spatial coordinates develops between 4 and 6 months30 and, relatedly, postural information begins to influence the neural correlates of infants’ tactile perception after 6.5 months of life31. Additionally, infants’ ability to reach for and handle objects becomes established from around 5 months32. Given the intrinsic link between acting on the environment and perceiving body-related motion in it, we anticipated that the acquisition of sensorimotor experience between 4 and 8 months of age would lead to developmental changes in infants’ visual-tactile processing.

Given the high adaptive value of perceiving and predicting the contact of visual objects with the body, it would be reasonable to expect that the mechanisms supporting it develop early in life12. At the same time, multisensory postnatal experience likely plays an important role in shaping the ways in which infants come to integrate visual stimuli specifying imminent contact and subsequent tactile stimuli taking place at the expected time and location of contact. This is particularly relevant with regards to visual moving stimuli, which, unlike auditory ones, can only be experienced by infants in their postnatal life. For these reasons, and in light of the aforementioned important developmental changes taking place during the first year of life30,31,32, we expected to find evidence of developmental changes in the visual modulation of touch in both of the age groups involved in this study. Predicted perceptual outcomes are known to enhance early perceptual components in event-related potentials (ERPs) gathered from 1-year-old infants33. Based on this, we expected that any modulation of SEPs observed in either age group would be in the form of an enhanced response to the tactile stimulus following approaching motion. This hypothesised pattern of results would also be consistent with the finding that when adults anticipate a tickling sensation they show enhanced activity in contralateral primary somatosensory cortex34.

In this study, we presented infants with tactile stimuli on their hands preceded by dynamic visual stimuli on a screen, rendered to specify 3D trajectories either approaching their hands or moving away from them. The dynamic visual stimuli were presented in the bottom half of the screen, while an attention-getting visual stimulus was presented at the top of the screen. When triggering the presentation of the experimental stimuli, we ensured that the infants were focusing on the attention getter. The approaching display showed a small ball approaching the participants’ hands and disappearing halfway through its trajectory from its starting point to the infant’s hands, while the receding display was the approaching sequence played backwards. After an interval, ensuring that there was no spatial nor temporal proximity between the visual and the tactile stimuli, the infants felt, on 50% of the trials, a tactile stimulus on both hands, which were held at the expected location of contact as signalled by the approaching visual motion trajectory. We recorded the infants’ brain activity throughout the study using EEG and analysed the SEPs measured in response to the tactile stimuli. We calculated a difference waveform between the trials with and without the tactile stimulation to measure and compare purely the somatosensory responses and ensure that any event related electrical activity on the scalp that was driven purely by the visual elements of the stimulation, common across all trials, was removed by the subtraction. We then compared the difference waveforms for the approaching vs the receding visual motion conditions, to explore the influence of unattended visual motion direction on the processing of a subsequent tactile stimulus on the body.

Results

To compare the responses to the tactile stimulus in the approaching vs receding visual motion conditions we used two complementary analysis strategies. Firstly, we ran a sample-point by sample-point simulation, which allowed us to analyse the full time-course of the response controlling for the correlation between consecutive sample points. The reason for running this analysis, which is common to other studies investigating infant SEPs31,35, lies in the limited number of reference studies available to define a-priori the latency of the components of interest. Secondly, we looked more specifically at each of the prominent features identifiable in the grand averaged waveforms by comparing the mean individual amplitude of the response between conditions. This analysis allowed us to further reconfirm the findings of the simulation and at the same time to complement them, given that sample-point simulations are insensitive to waveform differences occurring in brief periods during the ERP epoch.

Because SEP components change significantly in amplitude and latency during the first year of life36,37, we treated the responses of the 4- and the 8-month-old infants separately.

4-month-olds

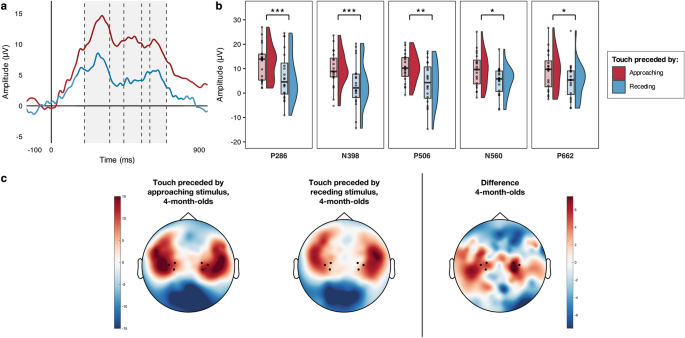

First, we ran simulations to determine whether any differences between conditions in the 4-month-olds SEPs were statistically reliable on a sample-point by sample-point basis. This simulation approach examined differences in the SEPs at each sample point between 100 ms prior to the tactile stimulus onset and 900 ms after it, correcting for the auto-correlation of consecutive sample points38. The simulation generated 1000 random datasets with the same level of auto-correlation of the observed data, as well as the same number of participants and sample points. Two-tailed one sample t-tests comparing the SEP difference waves (by Condition) vs. zero (α = 0.05, uncorrected) were applied to the simulated data at each time point. In each of the 1000 simulations the longest sequence of consecutive significant t-test outcomes was computed. We then used the 95th percentile of that simulated distribution of “longest sequence lengths” to determine where a sequence of differences in the SEP by Condition were statistically reliable. Here the simulation identified as statistically reliable any sequences of consecutive significant t-tests longer than 220 ms, and thus highlighted a statistically reliable sequence between 202 and 700 ms after the onset of the tactile stimulus (Fig. 1a). This showed, in agreement with our hypotheses, a larger SEP in response to the tactile stimuli following approaching vs. receding motion.

Modulation of 4-month-old infants’ SEPs by the direction (approaching vs receding) of prior unattended visual motion. (a) Grand average SEPs across hemispheres; the statistically reliable difference identified by the sample-point by sample-point analysis is indicated by the light grey shading; dashed lines separate the components that were subjected to statistical comparison. (b) Voltage differences in the grand averaged mean individual amplitude of the SEPs in the two conditions for the five components of interest; significant comparisons are indicated (*p < 0.05, **p < 0.01, ***p ≤ 0.001). (c) Grand average topographical representations of the voltage distribution over the scalp in the two conditions between 202 and 700 ms following the tactile stimulus onset (this was the time-window during which the sample-point by sample-point analysis revealed a statistically reliable difference), with a Touch following Approaching motion—Touch following Receding motion difference map to the right; channels averaged for the analyses are highlighted (36, 41, 42 in the left hemisphere and 93, 103, 104 in the right hemisphere).

Next, we examined each of the salient components (i.e., prominent features) in the SEPs, comparing their amplitudes across conditions. As we had no prior information to determine the latency of the components of interest, we isolated the main components identifiable within the time window of significance35 using a collapsed localisers approach39, which reduces the chance of biased measurements by defining the latency of the components to be analysed on the average waveform across conditions. We identified five main components, labelled according to their polarity and peak latency: P286 (202–354 ms); N398 (356–440 ms); P506 (442–548 ms); N560 (550–598 ms); P662 (600–700 ms). Within each component, we ran a paired t-test to compare the mean individual amplitudes of the waveform between the two conditions (Fig. 1b). The comparisons confirmed that in all the identified components the mean individual amplitude of the SEPs was significantly larger when the tactile stimulus had been preceded by approaching motion compared to when it had been preceded by receding motion (Table 1).

8-month-olds

As for the younger age group, we first ran simulations to determine whether any differences between conditions were statistically reliable on a sample-point by sample-point basis. The simulation identified as statistically reliable any sequences of consecutive significant t-tests longer than 106 ms. Based on this criterion, no sequences of statistically reliable differences between conditions were found in the 8-month-olds SEPs (Fig. 2a). It is important to note that this method is insensitive to waveform differences occurring on brief segments of time38, and so, as in our analysis of the 4-month-olds SEPs, we further probed those components (i.e., prominent features) that were identifiable within the time window of differences highlighted in the 4-month-olds group (i.e., between 202 and 700 ms after the tactile stimulus onset). Four components were identified, and labelled according to their polarity and peak latency: P240 (202–310 ms); N362 (312–418 ms); P470 (420–526 ms); N572 (528–636 ms). For each component, t-tests comparing the mean individual amplitude of the response between conditions revealed no significant differences, confirming the findings from the simulations reported above (Table 2 and Fig. 2b).

Modulation of 8-month-old infants’ SEPs by the direction (approaching cs receding) of prior unattended visual motion. (a) Grand average SEPs across hemispheres; dashed lines separate the components that were subjected to statistical comparison. (b) Voltage differences in the grand averaged mean individual amplitude of the SEPs in the two conditions for the four components of interest. (c) Grand average topographical representations of the voltage distribution over the scalp in the two conditions between 202 and 700 ms following tactile stimulus onset (this was the time-window during which the sample-point by sample-point analysis revealed a statistically reliable difference in the 4-month-olds group), with a Touch following Approaching motion—Touch following Receding motion difference map to the right; channels averaged for the analyses are highlighted (41, 46 47 in the left hemisphere and 98, 102, 103 in the right hemisphere).

The absence of any effects of the direction of prior and unattended visual motion on somatosensory processing in 8-month-olds is surprising considering the robust effect of Condition observed in the 4-month-olds. To better understand this developmental change between 4 and 8 months of age, we conducted further exploratory analyses on the 8-month-olds’ data, investigating whether any differences between conditions could be masked by individual variations in the participants’ developmental status. Using age in days as a proxy for developmental status, we fitted 3 linear mixed-effects models (LMMs)40 for each of the four main components identified (P240, N362, P470, N572), including a categorical fixed effect (Condition), a continuous fixed effect (Age in days) and a random effect (the individual Participant). The first model (m1) included only Condition as a fixed effect and Participant as a random effect; the second (m2) added Age as a fixed effect; the third (m3) added the Interaction between Condition and Age. The models were fitted using the R software41, specifically the “lme4” and “lmerTest” packages for LMMs42,43.

Likelihood Ratio Tests (LRTs) were conducted to compare how well the three models explained the data (Table 3). In the first 3 components (P240, N362 and P470), m3 explained the collected data better than any other model. This model included the interaction between Condition and Age, which was significant across all three components [P240: t(18) = 2.662, p = 0.016; N362: t(18) = 2.916, p = 0.009; P470: t(18) = 2.135, p = 0.047]. This showed how, with increasing age in days, infants SEPs changed from an enhanced response to tactile stimuli preceded by approaching visual motion to an enhanced response to tactile stimuli preceded by receding visual motion (Fig. 3a). Additionally, the models highlighted a significant main effect of Condition once Age and the random effect of Participants were taken into account [P240: t(18) = − 2.679, p = 0.015; N362: t(18) = − 2.947, p = 0.009; P470: t(18) = − 2.155, p = 0.045]. In the fourth component (N572), m2, including the fixed effects of Condition and Age, and the random effect of Participant, was the best fit. Reflecting the better fit compared to m1 there was a main effect of Age [t(18) = − 2.215, p = 0.04]: this describes, across both conditions, a decline in the SEPs amplitude with age (Fig. 3a).

Effect of condition and age (in days) on the SEPs of 8-month-old infants. (a) Scatter plots illustrating, for each component of interest, the relation between the mean individual amplitude of the SEPs in each condition and the infants’ age in days, with regression lines (and S.E.M., shaded) for each condition. (b) For illustrative purposes, in light of the results of the LMMs, we plotted the grand averaged SEPs for the younger and the older 8-month-olds (2 groups of 9 infants each, created based on the median age value, 249 days); the plots suggest that the direction of the difference between the SEPs in response to a tactile stimulus following approaching vs receding motion might reverse between the younger and the older 8-month-old infants.

Altogether, these results indicate that the amplitude of 8-month-olds’ SEPs were modulated by whether, prior to the tactile stimulus, they were presented with an unattended visual object approaching them or receding away from them. This was demonstrated via an interaction between the direction of the visual motion and age in days in affecting the amplitude of SEP components. More specifically, the younger 8-month-old participants showed, as did 4-month-olds, a larger response to tactile stimuli preceded by approaching motion, while the older 8-month-olds participants showed the opposite pattern (Fig. 3b).

For completeness, we fitted the same models for the 4-month-old infants. In this group, neither m2 nor m3 significantly improved on the fit of m1, which included only Condition as a fixed effect and Participant as a random effect (Supplementary Table S1). This result, obtained for all components tested, confirms our previous findings that 4-month-old infants demonstrate an enhancement of the response to the tactile stimulus when it was preceded by unattended approaching vs receding visual motion, irrespective of the participants’ age in days (Supplementary Fig. S1).

Discussion

From at least 4 months of age human infants’ cortical somatosensory processing is modulated by the direction of movement relative to the body of a previously presented (and unattended) visual object. Previous studies have already identified that young infants are sensitive to the movement of visual objects with respect to the body19,20,24, and to spatial and temporal correspondences between tactile and visual stimuli on their body21,22,23. However, the current study shows for the first time that young infants can combine these abilities and are thereby sensitive to the dynamic relations between visual and tactile stimuli when these stimuli are presented in different places (in the external space and on the body) and across a temporal gap (in this case 333 ms between the disappearance of a moving visual object on a screen, and the onset of a somatosensory stimulus on the hands). This is important because such an ability is a prerequisite for infants to be able to perceive the multisensory interactions that characterise the dynamic interactions between objects and the body in peripersonal space.

Our findings show that 4-month-olds’ (and younger 8-month-olds’) SEPs are enhanced following approaching visual motion, while older 8-month-olds seem to show the reverse pattern, with larger SEPs in response to tactile stimuli following receding motion. Overall, it is clear that in both 4- and 8-month-old infants visual information specifying motion in relation to the observer (towards or away from their body), even when not fixated upon visually, affects subsequent somatosensory processing. The present findings therefore prompt the striking conclusion that human infants, from as young as 4 months of age, can coordinate multisensory information presented across different locations (the body and the external space) and at different times (i.e., when one stimulus is predictive of the following one). This fundamental multisensory ability is among those that enable mature humans and animals to perceive their bodily selves in their dynamic relationships with the world around them.

Having established that this sensitivity to tactile-visual interactions in peripersonal space is available at 4 months of age is significant. Infants do not typically make successful reaches to objects that they have targeted in vision before 5 months of age32, and so the current findings indicate that infants can learn about the visual-tactile structure of peripersonal space despite an extremely limited ability to undertake skilled action in the environment. This is not to say that pre-reaching infants are not exposed to rich visual-tactile multisensory experiences in the first postnatal months. Indeed, we might speculate that the associations between visual motion and tactile contact that are necessary to explain the present findings might in part result from multisensory input arising from rich interpersonal infant-carer interactions occurring during activities such as feeding and tickling. Further research investigating the nature of early multisensory experiences in the first months of life44 could shed valuable light on the early origins of infants’ perceptions of the links between their bodies and the visual environment.

Studies of the neural basis of peripersonal space in mature primates5,45 and human adults46,47,48, have identified a neural network subserving fast neural and behavioural responses to objects and sounds approaching the body. These responses are thought to be underpinned by predictive mechanisms using visual motion cues to make judgments about the time and location of an impending tactile stimulus and subsequently enhancing tactile processing at the time and location of impending contact10. Our findings show that from 4 months of age human infants are capable of representing dynamic crossmodal spatiotemporal links between visual stimuli and subsequent tactile events. Although it seems reasonable that this multisensory perceptual capacity would be a necessary developmental precursor of an ability to make predictions about tactile contact on the basis of prior visual events, based on our current findings we cannot conclude that infants of 4 and 8 months of age are able to make such predictions.

One possible account of our findings that does not rest on a predictive ability is that the multisensory interactions reported here are mediated by crossmodal spatial attention49. For instance, in the Approaching condition, the visual object moving towards the infant may have cued them to (covertly) shift their attention towards the body where the tactile stimulus was later presented (NB: any trials where an overt eye movement was made were excluded). To our knowledge, no research has yet investigated crossmodal effects in selective attention in human infancy, therefore at present there is no directly relevant evidence to determine whether this is a plausible account of our findings in 4- and 8-month-old infants. Nonetheless there is evidence that both exogeneous and endogenous orienting mechanisms are available within the same sensory modality by 4 months of age50, though with considerable development of endogenous orienting through to childhood and beyond51,52,53. Furthermore, given the evidence of the development of endogenous attention in the first year of life52 we might consider whether this could contribute to explain the rapid changes in the SEPs that we observed in 8-month-old infants in the present study.

Another possible explanation of the reported modulation of young infants’ SEPs by visual stimuli may be that the SEPs reflect motor preparation responses triggered by the visual cues54. Indeed, as Eimer et al.54 show, in adults the mechanisms involved in attentional shifts and motor preparation appear to be very similar. Additionally, it has been demonstrated that approaching visual stimuli can trigger, in adults, the activation of sensorimotor networks responsible for the preparation of a timed motor response55,56. Nonetheless, it is of note that we have found evidence of a modulation of the SEP by prior visual events in infants as young as 4 months of age. At this age infants would generally not have obtained the motor skills to coordinate visual and tactile space with the hands or feet57 and, as such, it does not seem likely that they would be able to make the rapid motor responses that would be required if the reported effects on their SEPs were entirely driven by motor preparation.

A final possibility is that, even though the visual and tactile stimuli were presented separately in time and space, the infants may have perceived them simultaneously by virtue of an extended visual-tactile temporal binding window. Previous research has demonstrated a developmental narrowing of the visual-tactile temporal binding window in childhood58, and it seems reasonable to speculate that there might be an additional narrowing of visual-tactile binding between early infancy and later development. Therefore, there is a possibility that infants’ visual-tactile window might be longer than the temporal interval between the end of the visual stimulation and the beginning of the tactile stimulation. By extension, it may be that despite the spatial and temporal gap between the stimuli, the infants might have showed visual modulations of somatosensory processing because they perceived the visual and tactile events simultaneously. If this is the case, 4-month-old infants’ enhanced SEPs following approaching motion could have resulted from the congruency between approaching motion and a subsequent tactile contact (where this congruency may have been learned from prior encounters with objects approaching the body and touching it).

We have offered four potential accounts of the visual-tactile abilities here observed in 4- and 8-month-olds: that infants are able to process visual-tactile peripersonal events via (i) visual-tactile predictive process, (ii) visual-tactile crossmodal attention, (iii) visual cuing of motor preparation, and (iv) visual-tactile crossmodal binding. None of these accounts are mutually exclusive of the others, and indeed the various perceptual abilities implied may all play roles in supporting infants’ perceptual awareness of events in peripersonal space at various points in development and may be developmentally related to each other. For example, one possibility is that more crude coordination of visual and somatosensory interactions (via an extended visual-tactile temporal binding window), may provide a developmental bootstrap for more complex perceptual representations and skills used by older infants, children, and adults (e.g., visual-tactile crossmodal spatial attention and/or rapid visual-tactile predictive processing). In order to be able to differentiate between these possibilities and their developmental relationships, researchers will need to employ both neural and behavioural measures of multisensory functioning within a number of cross-sectional and longitudinal designs. Crucially however, all these accounts imply that from 4 months of age, infants have a means to coordinate their somatosensory (bodily) responses in a temporally contiguous way with prior dynamic visual information presented in extrapersonal space, thereby supporting a means of representing multisensory peripersonal events.

Lastly, we consider the differences between the 4- and 8-month-olds’ processing of visual tactile peripersonal events reported here. We observed that the enhancement of somatosensory responses following visual approach seen in 4-month-olds was not apparent in 8-month-olds. We also reported some exploratory analyses that tentatively established a relation between 8-month-old infants’ age in days and the modulation of their SEPs in response to somatosensory stimuli preceded by visual approaching or receding motion. The enhancement seen in the 4-month-old infants was also seen in the younger 8-month-olds, but gradually reversed with age in days such that by 260 days of age, infants show enhanced SEPs following receding motion on prominent components (P240, N362 and P470). These observed differences between 4- and 8-month-olds’ SEPs could indicate developmental changes in the brain and behavioural basis of peripersonal spatial representations, and this possibility is commensurate with a number of findings from other studies concerning the development of body representations and somatosensory processing in early life30,31,59. An alternative developmental explanation in the current study is attentional control. It may be that the older 8-month-old infants were able to better focus on the attention getter and to ignore the peripheral visual motion leading to smaller enhancements of their SEPs. Nonetheless, we consider this alternative explanation unlikely as, even in adults whose attentional control is more efficient than infants’, the detection of tactile stimuli is facilitated by unattended approaching visual motion9,10. Another alternative account is that the developmental trend towards increasing enhancement of SEPs in the receding condition represents the development of a neural process involved in signalling prediction error (i.e., that the tactile contact experienced following receding motion is unexpected). As such, the gradual emergence across the 8-month-old group of greater responses to unexpected tactile contact might represent the emergence of top-down influences of predictions on the perceptual processing of somatosensory information60. Based on this finding, we may speculate that if a group of adults were presented with these same stimuli, they would show a pattern of SEP responses like that shown by the older 8-month-old participants reported here. Research into infants’ sensory predictions33,61,62,63,64, has shown that, even in the first year of life, infants’ early sensory processing can be modulated by top-down influences, suggesting that infants can generate an internal model of the environment and form predictions about it63,65. The age-related changes in the visual modulation of SEPs reported here may represent a feature of the emergence of an ability to predict tactile outcomes on the body based on the prior movements of visual stimuli in extrapersonal and peripersonal space.

Here we have shown that, from as early as 4 months of age, infants process somatosensory information differently when it has been preceded by a temporally and spatially distant visual object approaching the body. This finding indicates that fundamental aspects of the multisensory processes underpinning peripersonal space representations, and self-awareness more generally, are in place prior to the onset of skilled action. Nevertheless, there are striking developmental changes in how infants’ brains process visual-tactile events occurring across peripersonal space between 4 and 8 months of age. As infants approach 9 months we increasingly see, in later somatosensory components, a greater processing of those tactile stimuli that were not predicted by preceding unattended visual motion. These findings yield exciting new clues to the ontogeny of human self-awareness in the first year of life, suggesting important postnatal developments in the ability to form expectations about the interactions between the body and the external environment.

Method

Participants

The 4-month-old age-group included 20 infants (9 female) aged on average 125 days (4.09 months, SD = 9.24 days, range between 109 and 137 days, recruited between 3.5 and 4.5 months of life). Further 31 4-month-olds participated, but were excluded due to restlessness or lack of interest in the screen (n = 9), insufficient artefact-free trials (n = 5), high impedance around the reference (n = 1), or experimental error (n = 16). The 8-month-old age-group included 20 infants (10 female), aged on average 248 days (8.11 months, SD = 8.06 days, range between 230 and 257 days, recruited between 7.5 and 8.5 months of life). Further 34 8-month-olds participated, but were excluded due to restlessness or lack of interest in the screen (n = 14), insufficient artefact-free trials (n = 3), high impedance around the reference (n = 3), or experimental error (n = 14). This rejection rate is not unusual for ERP research in infancy66, particularly in studies, like the current, where infants are required to attend each stimulus for a number of seconds67. As no previous studies used similar designs and manipulations, there were no strong expectations concerning effect sizes. The sample size for each age group (n = 20) was therefore determined based on prior studies using a similar method with infants of similar age35,68. The selected sample size was later corroborated by a power analysis based on a study investigating the modulation of SEPs in 6.5- and 10-month-old infants31. This study indicated an effect size of d = 0.73 for the effect of condition on the SEPs, which given α = 0.05 and with an expected power of 0.85 would require a sample of 19 infants.

Testing took place when the infants were awake and alert. The parents were informed about the procedure and provided informed consent for their child’s participation. The participants were recruited from the Goldsmiths InfantLab database and received a small gift to thank them for their participation. Ethical approval was gained from the Institutional Ethics Committee, Goldsmiths, University of London, and the study was conducted in accordance with the Declaration of Helsinki.

Design

The study included two conditions, based on the direction of the visual motion events presented: Approaching and Receding. Each condition comprised two types of trials: Touch and No-Touch. In both types of trials of each condition, the infants were presented with a set of visual events on screen, including an attention-getter in the top half of the screen and visual motion events in the bottom half. In the Touch trials, they were also presented with vibrotactile stimuli on both hands. The No-Touch trials were included to ensure that the visual elements of the stimulation could be subtracted from the somatosensory responses (see “EEG recording and analyses”).

The trials were presented in groups of 4, within which each condition and trial type were presented in a random order, and grouped in blocks of 8 trials each. After each block, a 12 s video was presented to break up the repetitiveness of the stimuli. Each infant was presented with blocks of trials until their attention lasted, up to a maximum of 10 blocks.

Procedure, stimuli and apparatus

Each infant sat on their parent’s lap on a chair in front of a 24″ screen in a dimly lit room. The parent kept their child’s hands close together about 30 cm away from the screen and held them as still as possible, by holding the wrists. While this led to tactile stimuli for the infant, such touches are very unlikely to have had an impact on the reported SEPs for two reasons: (i) the tactile stimulation from the parents was a varying constant throughout the study, and not time-locked to the experimentally presented tactile stimuli, and was therefore unlikely to have an impact on event-related responses to time locked tactile stimuli; (ii) any changes in the parents’ holding of their infants’ wrists (e.g., repositioning, changes in pressure), would be completely random across participants and conditions, and therefore very unlikely to influence grand averaged ERPs or differences between conditions. An infant-friendly video attracted the infant’s attention to the screen: as soon as the infant was looking at the screen, the experiment began.

Throughout each trial, the infant was shown an “attention-getting” animal character face in the top half of the screen. This was constantly rotating, alternately clockwise and anticlockwise (the first motion direction was counterbalanced across participants), between an orientation where the upper portion of its vertical axis was 45° clockwise from the vertical, and one where it was 45° anticlockwise from the vertical. The animal face (22.17° × 20.43°) was randomly selected from a set of 10 on a trial-by-trial basis. The attention-getter was intended to attract and hold the participant’s attention during the trial, in particular when the visual moving stimulus was presented: any trials where the infant looked away from the attention getter during the presentation of the visual moving stimulus were identified by offline coding and excluded from the analyses.

Once the infant had fixated the attention-getter (verified via live video feed), the experimenter triggered the stimuli. A 3D rendered red ball (5.90° × 5.57° at its smallest) appeared in the lower half of the screen and either approached the infant’s hands or receded towards the background, moving for 333 ms. The duration of the motion was based on a previous study with adult participants10 and doubled to ensure that young infants could perceive the visual motion. The ball moved within a 3D rendered room, whose width, measured at the bottom of the screen, was 40 cm. This measure was used as a common reference between the real and the simulated worlds to calculate the distance of the simulated background from the screen surface. We wanted the screen surface to be perceived as halfway between the background and the infant’s hands, which were 30 cm away from the screen. The 40 cm width of the simulated room corresponded to 28 measurement units in the rendering software, therefore we located the background 21 measurement units away from the front, at a simulated distance of 30 cm from the screen surface and of 60 cm from the participants’ hands. In the Approaching condition, the ball moved from the background towards the infant’s hands but disappeared when the rendering specified that it reached halfway through its trajectory. In the Receding condition, the ball moved from the halfway point towards the background.

In both conditions, after the ball disappeared there was an interval (333 ms) followed, in the Touch trials only (50% of the total), by a 200 ms vibrotactile stimulus on both hands (Fig. 4 and Supplementary Movie S1). The interval before the tactile stimulus lasted as long as the motion: as the ball disappeared when it reached the simulated halfway point between the background and the infant’s hands, this ensured that the tactile stimulus was presented at the expected time to contact of the simulated moving ball with the hands in the Approaching condition.

Schematic representation of the experimental procedure. When the infant fixated on the attention-getting animal face, the experimenter triggered the presentation of the experimental stimuli. A red ball appeared in the lower half of the screen and either approached the infant’s hands or receded towards the background for 333 ms. After a 333 ms interval, the infant received, on 50% of the trials, a vibrotactile stimulus on both hands, lasting 200 ms. During the study, the parent was instructed to keep the infant’s hands close to each other and along the midline, i.e., along the simulated trajectory of the moving ball.

Each trial lasted minimum 4 s, including: minimum 2 s when only the attention-getter was presented, 333 ms of visual motion, the 333 ms interval, and 1333 ms of response collection time, whose first 200 ms corresponded to the tactile stimulation in the Touch trials. The duration of the response collection time was chosen to ensure that the SEPs in response to each tactile stimulus had completely subsided before a new tactile stimulus was presented.

The vibrotactile stimuli were delivered via custom-built voice coil tactile stimulators (tactors), driven by a 220 Hz sine wave. One tactor was placed in each of the infant’s hands and fixed to the palms with self-adherent bandage; the infant’s hands and the tactors were covered with cotton mittens. An audio track made of a lullaby and white noise was played ambiently to mask the noise of the tactors. The 3D stimuli were rendered using Blender 2.79b (Blender Foundation, Amsterdam, Netherlands); the stimuli were presented using MatLab 2006a (7.2.0.232) and Psychtoolbox 3 3.0.9 (beta).

EEG recording and analyses

The participants’ electrical brain activity was continuously recorded using a Hydrocel Geodesic Sensor Net (Electrical Geodesics Inc., Eugene, Oregon), consisting of 128 silver-silver chloride electrodes evenly distributed across the scalp and referenced to the vertex. The potential was amplified with 0.1 to 100 Hz band-pass and digitized at 500 Hz sampling rate67. The raw data were processed offline using NetStation 4.5.1 (Electrical Geodesics Inc., Eugene, Oregon). Continuous EEG data were high-pass filtered at 0.3 Hz and low-pass filtered at 30 Hz using digital elliptical filtering67. They were segmented in epochs from 300 ms before the tactile stimulus onset to 1300 ms after it and baseline-corrected to the average amplitude of the 100 ms preceding the tactile stimulus onset. Epochs containing movement artefacts or more than 12 bad electrodes67 were visually detected and rejected. Bad electrodes were interpolated trial-by-trial using spherical interpolation of neighbouring channel values. Artefact free data (4mo, M = 9.85, 8mo, M = 9.63, see Supplementary Table S2; small number of trials are usual in infancy research67) were re-referenced to the average potential over the scalp, then individual averages were calculated. Additionally, the video recordings of the session were coded offline to identify and exclude any trials where the participant: (i) was not looking at the screen during the presentation of the visual moving stimulus, (ii) was looking at the visual moving stimulus rather than the attention-getter, (iii) had their hands in an incorrect position. The number of trials excluded for due to these codings was exiguous compared to the number of trials presented (see Supplementary Table S3 for details).

We wanted to compare the responses to the tactile stimuli themselves, ensuring that the analysed waveforms did not include any event related electrical activity on the scalp that was driven purely by the visual elements of the stimulation. To achieve this, for both visual motion conditions, we removed any electrical activity driven by the visual elements of the stimulation, which were common across the two types of trials, by computing the difference waveform obtained subtracting the response recorded in the No-Touch trials from that recorded in the Touch trials (see “Design”).

We were interested in the SEP responses recorded from sites near somatosensory areas. To identify the electrode clusters for the analyses, we averaged the difference waves and inspected the topographic maps representing the scalp distribution of the electrical activity39, confirming the presence of hotspots in the regions surrounding CP3 and CP4 in the 10–20 system69,70 (Figs. 1c and 2c). Next, we visually inspected the recordings from the electrodes within these areas and isolated, for each hemisphere, the electrode cluster showing the most pronounced SEP components35: for the 4-month-old group, 36, 41, 42 (left hemisphere) and 93, 103, 104 (right hemisphere); for the 8-month-old group, 41, 46 47 (left hemisphere) and 98, 102, 103 (right hemisphere).

Data availability

The present study has not been formally preregistered. The datasets generated and/or analysed during the current study are available in the University of Birmingham eData repository and can be retrieved from https://doi.org/10.25500/edata.bham.00000447. The scripts and datasets used to perform the analyses reported in this manuscript are available online on the Open Science Framework website and can be retrieved from https://osf.io/jg7xf/. A version of this manuscript has been posted as a preprint71, made available under a CC-BY-NC-ND 4.0 International license, and can be retrieved from: https://www.biorxiv.org/content/10.1101/2020.09.07.279984v1.

References

-

Cléry, J. & Ben Hamed, S. Frontier of self and impact prediction. Front. Psychol. 9, 1073 (2018).

Google Scholar

-

de Vignemont, F. & Iannetti, G. D. How many peripersonal spaces?. Neuropsychologia 70, 327–334 (2015).

Google Scholar

-

de Vignemont, F. & Alsmith, A. The Subject’s Matter. Self Consciousness and the Body. Representation and Mind (MIT Press, 2017).

Google Scholar

-

Brozzoli, C., Makin, T. R., Cardinali, L., Holmes, N. P. & Farnè, A. Peripersonal space: A multisensory interface for body-object interactions. In The Neural Bases of Multisensory Processes (eds Murray, M. M. & Wallace, M. T.) 449–466 (Taylor & Francis, 2012).

-

Rizzolatti, G., Fadiga, L., Fogassi, L. & Gallese, V. The space around us. Science 277, 190–191 (1997).

Google Scholar

-

Clark, A. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav. Brain Sci. 36, 181–204 (2013).

Google Scholar

-

Friston, K. A theory of cortical responses. Philos. Trans. R. Soc. B Biol. Sci. 360, 815–836 (2005).

Google Scholar

-

Friston, K. The free-energy principle: A unified brain theory?. Nat. Rev. Neurosci. 11, 127–138 (2010).

Google Scholar

-

Cléry, J., Guipponi, O., Odouard, S., Wardak, C. & Ben Hamed, S. Impact prediction by looming visual stimuli enhances tactile detection. J. Neurosci. 35, 4179–4189 (2015).

Google Scholar

-

Kandula, M., Hofman, D. & Dijkerman, H. C. Visuo-tactile interactions are dependent on the predictive value of the visual stimulus. Neuropsychologia 70, 358–366 (2015).

Google Scholar

-

Kandula, M., Van der Stoep, N., Hofman, D. & Dijkerman, H. C. On the contribution of overt tactile expectations to visuo-tactile interactions within the peripersonal space. Exp. Brain Res. 235, 2511–2522 (2017).

Google Scholar

-

Orioli, G., Santoni, A., Dragovic, D. & Farroni, T. Identifying peripersonal space boundaries in newborns. Sci. Rep. 9, 9370 (2019).

Google Scholar

-

Ronga, I. et al. Spatial tuning of electrophysiological responses to multisensory stimuli reveals a primitive coding of the body boundaries in newborns. Proc. Natl. Acad. Sci. USA. 118, 1–3 (2021).

Google Scholar

-

Ball, W. & Tronick, E. Infant responses to impending collision: Optical and real. Science 171, 818–820 (1971).

Google Scholar

-

Bower, T. G. R., Broughton, J. M. & Moore, M. K. Demonstration of intention in the reaching behaviour of neonate humans. Nature 228, 679–681 (1970).

Google Scholar

-

Náñez, J. E. Perception of impending collision in 3-to 6-week-old human infants. Infant Behav. Dev. 11, 447–463 (1988).

Google Scholar

-

Yonas, A. et al. Development of sensitivity to information for impending collision. Percept. Psychophys. 21, 97–104 (1977).

Google Scholar

-

Schmuckler, M., Collimore, L. M. & Dannemiller, J. L. Infants’ reactions to object collision on hit and miss trajectories. Infancy 12, 105–118 (2007).

Google Scholar

-

Orioli, G. Peripersonal Space Representation in the First Year of Life: A Behavioural and Electroencephalographic Investigation of the Perception of Unimodal and Multimodal Events Taking Place in the Space Surrounding the Body (University of Padova, 2017).

-

Orioli, G., Filippetti, M. L., Gerbino, W., Dragovic, D. & Farroni, T. Trajectory discrimination and peripersonal space perception in newborns. Infancy 23, 252–267 (2018).

Google Scholar

-

Filippetti, M. L., Johnson, M. H., Lloyd-Fox, S., Dragovic, D. & Farroni, T. Body perception in newborns. Curr. Biol. 23, 2413–2416 (2013).

Google Scholar

-

Filippetti, M. L., Orioli, G., Johnson, M. H. & Farroni, T. Newborn body perception: Sensitivity to spatial congruency. Infancy 20, 455–465 (2015).

Google Scholar

-

Freier, L., Mason, L. & Bremner, A. J. Perception of visual-tactile colocation in the first year of life. Dev. Psychol. 52, 2184–2190 (2016).

Google Scholar

-

Orioli, G., Bremner, A. J. & Farroni, T. Multisensory perception of looming and receding objects in human newborns. Curr. Biol. 28, R1294–R1295 (2018).

Google Scholar

-

Thomas, R. L. et al. Sensitivity to auditory-tactile colocation in early infancy. Dev. Sci. 21, e12597 (2018).

-

Zmyj, N., Jank, J., Schütz-Bosbach, S. & Daum, M. M. Detection of visual-tactile contingency in the first year after birth. Cognition 120, 82–89 (2011).

Google Scholar

-

Serino, A. et al. Peripersonal space: An index of multisensory body–environment interactions in real, virtual, and mixed realities. Front. ICT 4, 2 (2018).

Google Scholar

-

Driver, J. & Spence, C. Cross-modal links in spatial attention. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 353, 1319–1331 (1998).

Google Scholar

-

Macaluso, E. & Maravita, A. The representation of space near the body through touch and vision. Neuropsychologia 48, 782–795 (2010).

Google Scholar

-

Begum Ali, J., Spence, C. & Bremner, A. J. Human infants’ ability to perceive touch in external space develops postnatally. Curr. Biol. 25, R978–R979 (2015).

Google Scholar

-

Rigato, S. et al. The neural basis of somatosensory remapping develops in human infancy. Curr. Biol. 24, 1222–1226 (2014).

Google Scholar

-

Von Hofsten, C. An action perspective on motor development. Trends Cogn. Sci. 8, 266–272 (2004).

Google Scholar

-

Kouider, S. et al. Neural dynamics of prediction and surprise in infants. Nat. Commun. 6, 8537 (2015).

Google Scholar

-

Carlsson, K., Petrovic, P., Skare, S., Petersson, K. M. & Ingvar, M. Tickling expectations: Neural processing in anticipation of a sensory stimulus. J. Cogn. Neurosci. 12, 691–703 (2000).

Google Scholar

-

Rigato, S. et al. Cortical signatures of vicarious tactile experience in four-month-old infants. Dev. Cogn. Neurosci. 35, 75–80 (2019).

Google Scholar

-

de Haan, M. Infant EEG and Event-Related Potentials. Infant EEG and Event-Related Potentials (Psychology Press, 2007). https://doi.org/10.4324/9780203759660.

Google Scholar

-

Webb, S. J., Long, J. D. & Nelson, C. A. A longitudinal investigation of visual event-related potentials in the first year of life. Dev. Sci. 8, 605–616 (2005).

Google Scholar

-

Guthrie, D. & Buchwald, J. S. Significance testing of difference potentials. Psychophysiology 28, 240–244 (1991).

Google Scholar

-

Luck, S. J. & Gaspelin, N. How to get statistically significant effects in any ERP experiment (and why you shouldn’t). Psychophysiology 54, 146–157 (2017).

Google Scholar

-

Pinheiro, J. & Bates, D. M. Mixed-Effects Models in S and S-Plus (Springer Science & Business Media, 2000). https://doi.org/10.1198/jasa.2001.s411.

Google Scholar

-

R Core Team. R: A Language and Environment for Statistical Computing. (2018).

-

Bates, D. M., Mächler, M., Bolker, B. & Walker, S. Fitting linear mixed-effects models using {lme4}. J. Stat. Softw. 67, 1–48 (2015).

Google Scholar

-

Kuznetsova, A., Brockhoff, P. B. & Christensen, R. H. B. lmerTest package: Tests in linear mixed effects models. J. Stat. Softw. 82, 1–26 (2017).

Google Scholar

-

Smith, L. B., Jayaraman, S., Clerkin, E. & Yu, C. The developing infant creates a curriculum for statistical learning. Trends Cogn. Sci. 22, 325–336 (2018).

Google Scholar

-

Graziano, M. S. A. & Cooke, D. F. Parieto-frontal interactions, personal space, and defensive behavior. Neuropsychologia 44, 2621–2635 (2006).

Google Scholar

-

Holmes, N. P. & Spence, C. The body schema and multisensory representation(s) of peripersonal space. Cogn. Process. 5, 94–105 (2004).

Google Scholar

-

Makin, T. R., Holmes, N. P. & Zohary, E. Is that near my hand? Multisensory representation of peripersonal space in human intraparietal sulcus. J. Neurosci. 27, 731–740 (2007).

Google Scholar

-

Serino, A. Peripersonal space (PPS) as a multisensory interface between the individual and the environment, defining the space of the self. Neurosci. Biobehav. Rev. 99, 138–159 (2019).

Google Scholar

-

Spence, C. & Driver, J. Crossmodal Space and Crossmodal Attention (Oxford University Press, 2004).

Google Scholar

-

Csibra, G., Tucker, L. A. & Johnson, M. H. Differential frontal cortex activation before anticipatory and reactive saccades in infants. Infancy 2, 159–174 (2001).

Google Scholar

-

Colombo, J. & Cheatham, C. L. The emergence and basis of endogenous attention in infancy and early childhood. Adv. Child Dev. Behav. 34, 283–322 (2006).

Google Scholar

-

Oakes, L. M., Kannass, K. N. & Shaddy, D. J. Developmental changes in endogenous control of attention: The role of target familiarity on infants’ distraction latency. Child Dev. 73, 1644–1655 (2002).

Google Scholar

-

Waszak, F., Li, S. C. & Hommel, B. The development of attentional networks: Cross-sectional findings from a life span sample. Dev. Psychol. 46, 337 (2010).

Google Scholar

-

Eimer, M., Forster, B., Van Velzen, J. & Prabhu, G. Covert manual response preparation triggers attentional shifts: ERP evidence for the premotor theory of attention. Neuropsychologia https://doi.org/10.1016/j.neuropsychologia.2004.08.011 (2005).

Google Scholar

-

Billington, J., Wilkie, R. M., Field, D. T. & Wann, J. P. Neural processing of imminent collision in humans. Proc. R. Soc. B Biol. Sci. 278, 1476–1481 (2011).

Google Scholar

-

Field, D. T. & Wann, J. P. Perceiving time to collision activates the sensorimotor cortex. Curr. Biol. 15, 453–458 (2005).

Google Scholar

-

Galloway, J. C. & Thelen, E. Feet first: Object exploration in young infants. Infant Behav. Dev. 27, 107–112 (2004).

Google Scholar

-

Chen, Y. C., Lewis, T. L., Shore, D. I., Spence, C. & Maurer, D. Developmental changes in the perception of visuotactile simultaneity. J. Exp. Child Psychol. 173, 304–317 (2018).

Google Scholar

-

Bremner, A. J., Holmes, N. P. & Spence, C. Infants lost in (peripersonal) space?. Trends Cogn. Sci. 12, 298–305 (2008).

Google Scholar

-

Vidal Gran, C., Sokoliuk, R., Bowman, H. & Cruse, D. Strategic and non-strategic semantic expectations hierarchically modulate neural processing. Eneuro 7, ENEURO.0229-20.2020 (2020).

Google Scholar

-

Emberson, L. L., Richards, J. E. & Aslin, R. N. Top-down modulation in the infant brain: Learning-induced expectations rapidly affect the sensory cortex at 6 months. Proc. Natl. Acad. Sci. USA 112, 9585–9590 (2015).

Google Scholar

-

Kayhan, E., Hunnius, S., O’Reilly, J. X. & Bekkering, H. Infants differentially update their internal models of a dynamic environment. Cognition 186, 139–146 (2019).

Google Scholar

-

Kayhan, E., Meyer, M., O’Reilly, J. X., Hunnius, S. & Bekkering, H. Nine-month-old infants update their predictive models of a changing environment. Dev. Cogn. Neurosci. 38, 100680 (2019).

Google Scholar

-

Zhang, F., Jaffe-Dax, S., Wilson, R. C. & Emberson, L. L. Prediction in infants and adults: A pupillometry study. Dev. Sci. 22, e12780 (2019).

Google Scholar

-

Köster, M., Kayhan, E., Langeloh, M. & Hoehl, S. Making sense of the world: Infant learning from a predictive processing perspective. Perspect. Psychol. Sci. 15, 562–571 (2020).

Google Scholar

-

Stets, M., Stahl, D. & Reid, V. M. A meta-analysis investigating factors underlying attrition rates in infant ERP studies. Dev. Neuropsychol. 37, 226–252 (2012).

Google Scholar

-

Hoehl, S. & Wahl, S. Recording infant ERP data for cognitive research. Dev. Neuropsychol. 37, 187–209 (2012).

Google Scholar

-

Saby, J. N., Meltzoff, A. N. & Marshall, P. J. Neural body maps in human infants: Somatotopic responses to tactile stimulation in 7-month-olds. Neuroimage 118, 74–78 (2015).

Google Scholar

-

Oostenveld, R., Fries, P., Maris, E. & Schoffelen, J.-M. FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intell. Neurosci. https://doi.org/10.1155/2011/156869 (2011).

Google Scholar

-

Popov, T., Oostenveld, R. & Schoffelen, J. M. FieldTrip made easy: An analysis protocol for group analysis of the auditory steady state brain response in time, frequency, and space. Front. Neurosci. 12, 711 (2018).

Google Scholar

-

Orioli, G., Parisi, I., van Velzen, J. L. & Bremner, A. J. The ontogeny of multisensory peripersonal space in human infancy: From visual-tactile links to conscious expectations. bioRxiv 1, 2020.09.07.279984 (2020).

Acknowledgements

We are grateful to our participants and their parents, for their invaluable contribution. This research was supported by a British Academy/Leverhulme Trust grant (SG170901) awarded to G.O. and A.B. and a Leverhulme Trust Early Career Fellowship (ECF-2019-563) awarded to G.O.

Author information

Authors and Affiliations

Contributions

G.O. conceived the study, designed it, collected the data, undertook data processing and analyses, and wrote the report. I.P. collected the data and undertook some data processing. A.B. and J.v.V. contributed to the design of the study and the interpretation of the findings. A.B. helped writing the report.

Corresponding author

Ethics declarations

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Supplementary Information.

Supplementary Video 1.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Reprints and Permissions

About this article

Cite this article

Orioli, G., Parisi, I., van Velzen, J.L. et al. Visual objects approaching the body modulate subsequent somatosensory processing at 4 months of age.

Sci Rep 13, 19300 (2023). https://doi.org/10.1038/s41598-023-45897-4

-

Received: 23 May 2022

-

Accepted: 25 October 2023

-

Published: 21 November 2023

-

DOI: https://doi.org/10.1038/s41598-023-45897-4

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.