Will artificial intelligence (AI) one day be able to read our brains and track our thoughts? A series of recent studies suggests that AI is capable of decoding and translating our cerebral activity, in the form of text and images, as we’re listening to a story, wanting to talk, or looking at images. The latest of this work, presented in Paris on Wednesday, October 18, by a team from Meta AI (formerly Facebook), demonstrates these spectacular advances.

Jean-Rémi King and his colleagues described how their AI algorithms were able to faithfully reproduce photographs viewed by volunteers, based on analysis of their brain activity. Their observations were presented in a preprint – a paper not yet peer-reviewed before publication in a scientific journal. They were based on data sets obtained by magnetoencephalography (MEG) and functional magnetic resonance imaging (fMRI) on volunteers who were shown photographs. This data was then submitted to a series of AI decoders, which learned to interpret and transcribe them back into images.

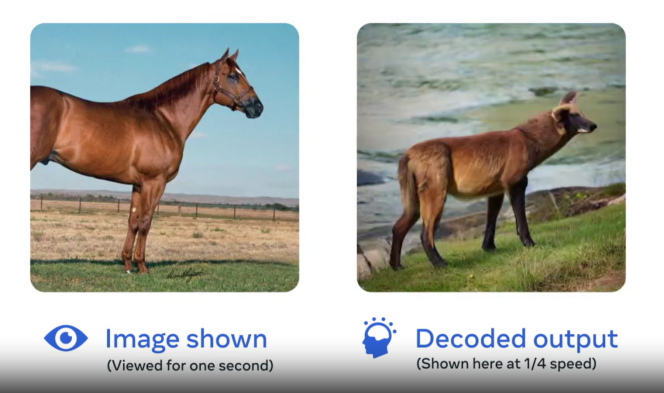

The result? Although the likeness between the original and the copy is not perfect, the similarities are often striking. A zebra is clad in a cross between cowhide and okapi, a little girl with a kite has an unidentified object above her head, but a surfer seems to be riding the same wave as the model in the photo, and a skier in a red wetsuit retains the same posture as the original. The “wow effect” is guaranteed, “even for the researchers on our team,” admitted King, an academic researcher (CNRS, ENS) currently on a five-year assignment with Meta AI. “Just two years ago, I would have never thought that this kind of result would be possible.”

Brain decoders

In recent months, several papers in the field of speech have demonstrated the potential of AI to decode brain activity, whether by invasive means – data collection via electrodes implanted in the brain of paralyzed people – or non-invasive ones, as with MEG, fMRI, large machines in which the subjects’ heads are immersed, but also with a simple cap for electroencephalograms (EEG).

On May 1 in the journal Nature Neuroscience, a team from the University of Austin (Texas), led by Alexander Huth, described an attempt to use an AI decoder to reproduce short stories heard by subjects placed in an fMRI scanner. The experiment was not entirely conclusive but showed that the AI could partially retrieve the words and general meaning. It was also able to produce a text from a story imagined by the volunteers, or from a video they watched. Part of the meaning was preserved, with greater fidelity than a purely random text. It’s as if the brain, through these various modalities – by listening to, imagining or observing the narrative – produced “a neural code shared between the visual and the spoken word,” said King.

You have 62.38% of this article left to read. The rest is for subscribers only.