Humane’s AI Pin which starts pre-orders on November 16 is a big leap for wearables, but does it answer the existential question at the heart of all AI?

If, on the off-chance, you feel your newest generation, avant-garde smartphone is too cumbersome to use, you’ll probably be glad to know the world is almost ready with alternative wearables. It puts into perspective a broader spectrum of technology around us, some of which already are in our homes and some we’d likely adopt in the coming years. Artificial Intelligence (AI) is percolating to the very core of functionality that defines the gadgets we use.

PREMIUM

PREMIUM Wearables, smart speakers, intelligent displays, and even your good old Windows PC with Copilot assistant, are getting a new lick of paint with AI. Yet, a gadget that builds completely with generative AI as its heart and brain, will only be as useful as a generative AI tool’s weakest link. Called generative because they can generate responses like text, voice or visuals to your questions, having been trained by large sets of data, generative AI is already an intelligent model. For example, a chatbot replying to your query, or a text-to-image tool that creates a virtual image based on your keywords.

So what are the weak links we’re talking about? Google’s Bard made factual errors during its first demo. It was asked, “What new discoveries from the James Webb Space Telescope can I tell my 9-year-old about?” The chatbot offered three points, including suggesting it “took the very first pictures of a planet outside of our own solar system.” That, as it turns out, was incorrect. The achievement was ticked off in 2004 by the European Southern Observatory’s Paranal Observatory’s NAOS+CONICA instrument.

But there is a deeper question that AI-tech makers need to ask themselves, and it hits at an existential core: Does their product have a definitive use case? How can AI take us forward?

Technology start-up Humane gave the world its first glimpse of the AI Pin in April. Here too, its weak link was on display. During the demo, it suggested that the April 8, 2024 solar eclipse will be best viewed from Australia and East Timor. Turns out, however, the best places will be North America, Canada and Mexico, according to NASA.

All the same, the company made the debut of the AI Pin on the runway at the Paris Fashion Week in September. It wouldn’t be hyperbole to call it the boldest bet with AI-powered wearables. Much like those badges from Star Trek. The question is, can a $699 (around ₹58,200; these ship in early 2024, and pre-orders begin on November 16) wearable really be effective as an interface mediator between you and your phone? Or are we yet to hit the home run in the melding of AI-exclusive gadgets?

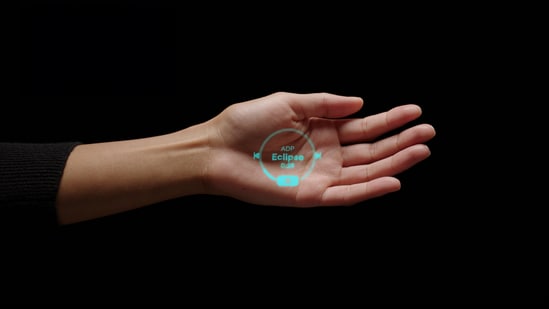

The AI-powered wearable is voice-enabled, or you need to press and hold a button on it to have a verbal conversation. Stretch your arm, open the palm, and a built-in compact projection system (Humane calls this a Laser Ink Display) gives a visual of what you’ve asked using your palm as the projection screen. Something that would allow the convenience of reading incoming messages without having to fish out the smartphone from a pocket or handbag.

Click your fingers to select something on the virtual interface. Or tap, double tap and swipe on the touch panel in the wearable itself, for tasks such as managing phone calls or the volume of music playback. A 13-megapixel camera you can tap or voice command to take a photo (videos will be enabled with a future software update), OpenAI’s ChatGPT chatbot for query responses and an AI layer that can catch you up on messages, emails and the calendar, in a crisp summary.

But is the Humane AI Pin that different from your smartwatch, which already acts as the go-between you and your smartphone? Managing calls, catching you up on messages and emails, tracking health, integrating an AI chatbot of some sort — it doesn’t seem much different in core functionalities. But the projection system is stuff straight out of sci-fi movies. Will potential buyers feel it’s better than interacting directly with their smartphone?

“Plenty of technology that looked like a sure bet ends up selling for 90 percent off at Best Buy,” OpenAI CEO Sam Altman, who is also an investor in Humane, said at last month at WSJ’s Future of AI conversation.

What the future looks like for Humane’s AI Pin is anyone’s guess.

Other AI makers are trying to answer fundamental questions of use.

Snap, an augmented reality (AR) tech company with plans for its next generation of smart spectacles, uses OpenAI in its new lenses. This is not the first time that Snap and OpenAI have worked on something together. The former’s My AI chatbot uses the GPT engine as the foundation for text conversations with a machine, much like OpenAI’s own ChatGPT and Microsoft’s Bing chatbot. Snap, with upcoming AI filters for smart spectacles, will have identified virtual reality experiences as a unique use case for AI to make it easier to build new tools.

Augmented reality (AR) headsets are expected to be in vogue next year, once Apple releases theirs. Snap is making an early move, and getting developers the tools they need.

Earlier this month, Snap shipped the Lens Studio 5.0 Beta to 3,30,000 developers who’ve created as many as 3.5 million lenses for the platform. Ask your smart spectacles how far away Neptune is from Earth, and you’ll have the answer flash in front of your eyes – approximately 2.7 billion miles.

While Google, Microsoft and OpenAI are some names leading the way with consumer-focused generative AI tools, Amazon is rapidly catching up. Later this year, beginning with a preview programme in the US region, a smarter and more conversant Alexa will arrive. A large language model (LLM) will give Alexa more contextual ability than it has at present, in the Echo range of smart speakers, smart displays and as an assistant in your phone.

Till a few years ago, Alexa (and Google Assistant and Apple Siri) was the pinnacle of smart tech. For the better part of the past decade, their response to our voice commands allowed us to search the web, add stuff to our Amazon shopping cart, learn about the weather, play music, control home devices and even learn sports scores.

Generative AI, in one fell swoop, succeeded these assistants: they had bigger data sets to learn from and could not only follow a conversation from one question to the next but also hold on to context as the conversation went along.

Generative AI for Alexa will mean three important things. The ability to have a proper conversation (more than what it already does), understand the context to personalise results for users and understand the complexity of commands. Amazon says they are also looking for ways to reduce latency, i.e., the time taken by the assistant to locate the necessary response to a query. This should, in theory, make conversations flow without pauses.

Not too long ago, HT tested Indian tech company Noise’s Luna Ring. It is a fitness tracking ring, that relies heavily on AI to calculate data collected by Infrared Photoplethysmography (PPG) sensors, skin temperature sensors, and a 3-axis accelerometer sensor. It tracks your movement through the day along with heart rate and blood oxygen levels, and your sleep quality along with body temperature.

All this is arranged into types of scores (readiness score, sleep score) to better understand how you’re doing, and how ready you may be to tackle the challenges of a busy day ahead. This added to the tracking duties of a fitness band or smartwatch, and effectively so, in our experience. Again, Noise too may have found a definitive use case for an increasingly relevant intersection between AI and health tracking using wearables. The health data set this AI model is learning from will only get better as more real-world user-generated data is available for it to calculate health and wellness metrics, such as sleep quality and energy levels.

Samsung is joining the AI race too, with Galaxy AI expected to arrive on its Android phones next year. As of now, what we know is that there will be an AI Live Call Translate feature, with audio and translations as close to real-time as possible, to let you have a useful call with someone speaking in another language. This will be a first-of-its-kind use of AI, particularly on smartphones, but specifics are scarce and it is too early to make any bets on accuracy or speed of translation.

The Humane AI Pin may just be the first true AI hardware of its kind. And this is just the beginning. Generative AI, chatbots and the search for new use cases (and form factors, such as clips and pendants; Rewind.ai is making one) will eventually hit the sweet spot of utility, accuracy and experience. This will define ultimate value.

Subscribe today by clicking the link and stay updated with the latest news!” Click here!

Subscribe today by clicking the link and stay updated with the latest news!” Click here!Continue reading with HT Premium Subscription