In today’s fast-paced business landscape, artificial intelligence has shifted from a buzzword to a crucial part of organizational strategy. Recognizing the imperative to stay ahead of this curve, Capgemini launched its Generative AI Lab.

Capgemini’s Generative AI Lab is an initiative designed to navigate the evolving topography of AI technologies. This lab is not just another cog in the machine; it’s a compass for the enterprise, setting the coordinates for AI implementation and management.

The aim of the lab is straightforward yet ambitious: to understand continual developments in AI. It runs under the umbrella of Capgemini’s “AI Futures” domain, tasked with finding new advances in AI early on and scrutinizing their potential implications, benefits, and risks. The lab operates as a dual-pronged mechanism: partly analytical, providing a clear-eyed assessment of the AI landscape, and partly operational, testing and integrating new technologies.

A FORWARD-LOOKING INITIATIVE

The Generative AI Lab also homes in on multifaceted research areas that promise to elevate the field of AI. On the technical front, it ventures into realms like multi-agent systems, where an amalgamation of specialized large language models (LLMs) each excel in particular tasks, such as text generation or sentiment analysis. These are supplemented by model-driven agents rooted in mathematical, physical, or logical reasoning to create a robust, versatile ecosystem.

Also, the lab is innovating ways to make LLMs more sensitive to real-world context, helping to curate AI behavior that’s not just intelligent but also intuitively aware. Then, the lab devotes considerable attention to socio-individual and psychological dimensions, exploring how generative AI interacts with and affects human behavior, social norms, and mental well-being.

Finally, it takes a truly global perspective by investigating the geo-political implications of AI, like how advancements could shift power balances or impact international relations. By casting such a wide net of inquiry, the lab ensures a holistic approach to AI research, one that promises to yield technology that’s technically advanced, socially responsible, and geopolitically aware.

THE LAB’S FRAMEWORK FOR CONFIDENCE IN AI

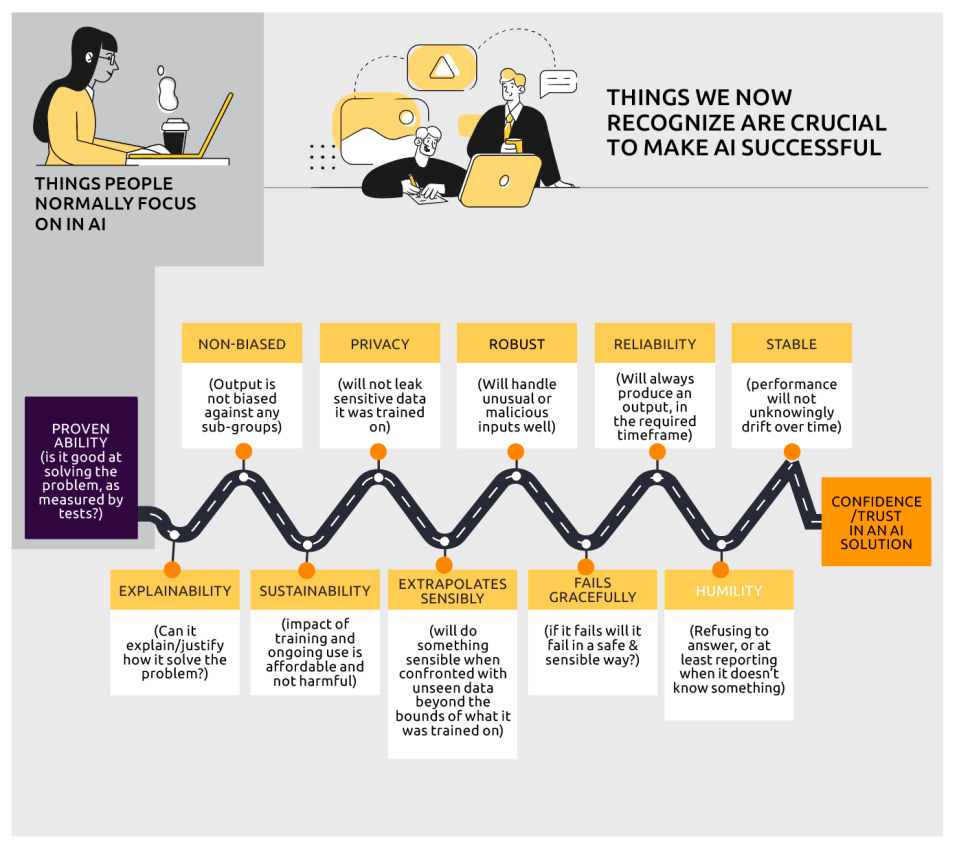

A noteworthy achievement of the lab thus far has been the development of a comprehensive framework focused on instilling confidence in AI. According to the lab’s research, contemporary technology has only managed to implement three factors necessary for total confidence in AI applications, particularly in decision-making scenarios. The lab has identified an additional eight factors that need to be considered, offering a 12-point blueprint that could serve as the industry standard for the ethical and effective implementation of AI.

Traditional considerations for AI usually highlight factors like “robustness,” ensuring the system performs reliably under a variety of conditions; “reliability,” so the performance is consistently up to par; and “stability,” meaning the system behaves predictably over time. These factors aim to ensure that an AI system works well, efficiently carrying out tasks and solving problems. However, the lab contends that this isn’t enough.

The lab brings into the limelight other equally important but often overlooked aspects, such as “humility,” the ability for the AI to recognize its limitations and not overstep its capabilities. Then there is the idea of “graceful degrading,” which refers to how the system handles errors or unexpected inputs; does it crash, start to hallucinate, or manage to keep some functionality? And, of course, “explainability,” which revolves around the system’s ability to articulate its actions and decisions in a way that’s understandable to humans and truthful to its contents.

For instance, a recommendation algorithm might be robust, reliable, and stable, meeting all the traditional criteria. But what if it starts recommending inappropriate or harmful content? Here, the lab’s factors like humility and explainability would come into play, supplying checks to ensure the algorithm understands its limits and can explain its rationale for the recommendations it makes.

The goal is to develop an AI system that’s not just effective, but also aligned with human needs, ethical considerations, and real-world unpredictability. The lab investigates and identifies solutions for integrating these more nuanced factors, aiming to produce AI technology that is both high-performing and socially responsible.

OPERATIONAL RESULTS

Since its start, the lab has made significant strides. It has found crucial issues surrounding generative AI, spearheaded the integration of “judgment” in AI systems across the organization, and discovered promising technologies and startups. It has also pioneered methods for using AI for heightened efficiency, superior results, and the facilitation of new organizational tasks. The lab has not only served as a think-tank but also as an operational wing that can apply its findings in a practical setting.

The lab’s workforce is its cornerstone. Composed of a multinational, global, cross-group, and cross-sector core team, it has the expertise to cover a wide array of disciplines. This team can dynamically expand based on the requirements of specific projects, ensuring that all needs – however specialized – are met with finesse.

REPORTING AND EARLY WARNING

Transparency and prompt communication are embedded in the lab’s operational DNA. It has an obligation for early warnings: alerting the organization about the potential hazards or advantages of new AI technologies. Regular reports support a steady stream of information flow, enabling informed decision-making at both the managerial and executive levels.

Another vital role the lab plays is that of an educator. By breaking down complex AI systems into digestible insights, it provides the leadership teams of both Capgemini and its clients with the tools they need to understand and direct the company’s AI strategy. It’s not just about staying updated; it’s about building a wide perspective that aligns with the broader strategic goals.

“BY ALIGNING A HIGH-PERFORMING AI SYSTEM WITH ONE THAT UNDERSTANDS AND RESPECTS ITS HUMAN USERS, THE LAB IS ON THE FRONTIER OF ONE OF THE MOST GROUNDBREAKING TECHNOLOGICAL SHIFTS OF OUR TIME.”

FUTURE OF AI

The Generative AI Lab at Capgemini has managed to go beyond the conventional boundaries of what an in-house tech lab usually achieves. It has successfully married theory with practice, innovation with implementation, and foresight with action. As the realm of AI continues to evolve unpredictably, the lab’s multi-dimensional approach – grounded in rigorous analysis, practical testing, and educational outreach – stands as a beacon for navigating the complex yet promising future of AI.

With this in-depth framework, the Generative AI Lab isn’t just looking at what makes AI work; it’s exploring what makes AI work well in a human-centered, ethical context. This initiative is a key step forward, one that promises to help shape the AI industry in ways that prioritize both technical excellence and ethical integrity.

By aligning a high-performing AI system with one that understands and respects its human users, the lab is on the frontier of one of the most groundbreaking technological shifts of our time. And as AI continues to weave itself into the fabric of our daily lives, initiatives like this are not just useful but essential, guiding us towards a future where technology serves humanity, and not the other way around. With its robust framework, multidisciplinary team, and actionable insights, the lab is indeed setting the stage for a more reliable, efficient, and ethically responsible AI-driven future.

INNOVATION TAKEAWAYS

NAVIGATIONAL COMPASS FOR AI

Capgemini’s Generative AI Lab serves as an enterprise compass, charting the course for AI application and management. This initiative is at the forefront of exploring multi-agent systems and enhancing LLMs with real-world context sensitivity. It’s about pioneering an AI landscape that’s not only intelligent but intuitively tuned to human nuance, reshaping how AI integrates into the fabric of society.

ETHICS AND UNDERSTANDING IN AI

The Lab’s 12-point Confidence in AI framework aims to set new industry standards for ethical AI implementation. By considering factors beyond the traditional – like AI humility, graceful degrading, and explainability – the lab aspires to create AI systems that are not just technically efficient but also socially responsible and aligned with human ethics, ensuring technology that can perform robustly and understand its boundaries.

FROM THINK-TANK TO ACTION

The lab transcends the typical role of an R&D unit by not only dissecting and developing AI advancements but also operationalizing these insights. Its multinational, multidisciplinary team has been crucial in integrating AI judgment across the organization and fostering technologies that enhance operational efficiency, elevating the lab’s role to that of both an innovator and an implementer.

Interesting read?Capgemini’s Innovation publication, Data-powered Innovation Review | Wave 7 features 16 such fascinating articles, crafted by leading experts from Capgemini, and partners like Aible, the Green Software Foundation, and Fivetran. Discover groundbreaking advancements in data-powered innovation, explore the broader applications of AI beyond language models, and learn how data and AI can contribute to creating a more sustainable planet and society. Find all previous Waves here.