Duke University researchers recently created “MadRadar,” a black box system that can attack automotive radars without any prior information.

As more vehicles inch toward autonomous driving, radar has played a key role by providing vision in adverse circumstances. These benefits, however, open the doors for malicious attacks using spoofing hardware like MadRadar.

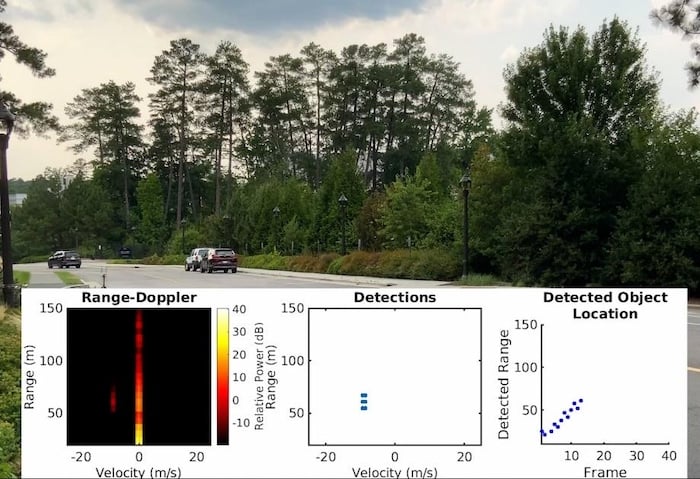

Duke University researchers have developed a system that can fool an automotive radar, removing its ability to detect car locations, as shown above. Image used courtesy of Duke University

The Duke University group, led by Dr. Miroslav Pajic and Dr. Tingjun Chen, hopes to shed light on the radar weaknesses MadRadar exploits, so OEMs can develop more secure autonomous vehicles.

The Basics of Automotive Radar

Automotive radar sensors use lower-frequency EM waves than light waves, allowing them to see in the dark, in poor visibility conditions, and in other scenarios where cameras may produce unclear images. Radar works as an EM counterpart to a flash camera, where the radar sends out an EM signal (or a “flash” in the case of a camera) and measures the reflected signals to learn about the sensing environment.

Radar serves many functions in modern vehicles, from park assistance to long-range measurement for adaptive cruise control. Image used courtesy of IEEE Signal Processing Magazine

This simplicity of operation, while certainly beneficial for measuring distances and velocities, opens the doors for spoofing attacks where malicious groups can confuse the radar by injecting a fabricated signal to add or remove targets. In an example case, Duke University researchers discuss how a fake oncoming car could cause an autonomous driving system to steer off the road, opening the door for attackers to steal the vulnerable vehicle.

False Positives, False Negatives, and Translation Attacks

The MadRadar system can perform three attacks on a radar system: false positive (FP), false negative (FN), and translation attacks. In each case, the MadRadar system first learns about the victim radar by receiving its transmitted signals to find out the bandwidth, chirp time, and frame times.

Block diagram of MadRadar. Image used courtesy of ArXiv Preprint

FP attacks emulate the response of a car when one is not present. They can be performed by precisely attenuating and phase delaying the received signal to “confuse” the victim radar into thinking an object is within its range. Automotive OEMs have long known about these attacks, however, and have thoroughly tested against them in security test setups.

MadRadar’s FN attacks work by obscuring real targets with what appears to be large background noise (a). As a result, the (b) CA-CFAR algorithm cannot detect the real target. Image used courtesy of ArXiv Preprint

FN attacks, on the other hand, are a new feature Duke researchers introduced in their study. FN attacks leverage the CA-CFAR technique of automotive radar systems, where the effects of noise and clutter can be reduced. By emulating a broad target with no discernable peaks, the MadRadar can fool a victim radar into believing no target is present when there actually is one.

Example traffic scenarios for an (a) ao attack, (b) false positive attack, (c) false negative attack, and (d) translation attack. Image used courtesy of ArXiv Preprint

Finally, translation attacks indicate that a real object is moving in an unreal way. For example, an oncoming car could appear to be shifting into a driver’s lane when, in fact, it is driving normally. From the car’s point of view, however, this can warrant evasive actions that can cause great harm to the driver and pedestrians.

Building More Secure Autonomous Vehicles

Duke University researchers hope their work can help OEMs strengthen automotive radar security against MadRadar and similar attacks. For example, they suggest that dynamic frequency hopping can randomize the radar operating point, preventing systems such as MadRadar from locking on to and predicting the radar’s response.