Abstract

Appearance-based gender identification of the horsehair crab [Erimacrus isenbeckii (Brandt, 1848)] is important for preventing indiscriminate fishing of female crabs. Although their gender is easily identified by visual observation of their abdomen because of a difference in the forms of their sex organs, most of the crabs settle with their shell side upward when placed on a floor, making visual gender identification difficult. Our objective is to use deep learning to identify the gender of the horsehair crab on the basis of images of their shell and abdomen sides. Deep learning was applied to a photograph of 60 males and 60 females captured in Funka Bay, Southern Hokkaido, Japan. The deep learning algorithms used the AlexNet, VGG-16, and ResNet-50 convolutional neural networks. The VGG-16 network achieved high accuracy. Heatmaps were enhanced near the forms of the sex organs in the abdomen side (F-1 measure: 98%). The bottom of the shell was enhanced in the heatmap of a male; by contrast, the upper part of the shell was enhanced in the heatmap of a female (F-1 measure: 95%). The image recognition of the shell side based on a deep learning algorithm enabled more precise gender identification than could be achieved by human-eye inspection.

Introduction

The horsehair crab [Erimacrus isenbeckii (Brandt, 1848)] is a high-quality marine product in Japan. The capture of female horsehair crabs is prohibited in Hokkaido, Japan, and their capture for academic research purposes requires permission. Immediate gender identification and selective capture within a limited time and in the limited space on a fishing boat are important. Fishermen in Hokkaido, who have many years of experience in the fisheries industry, can distinguish crab genders by visual inspection. Some academic research regarding the gender identification of crabs has been reported. Toyota et al. investigated morphometric gender identification of the horsehair crab1. Gender identification based on 3D measurement of the shell geometry of the horsehair crab has also been investigated2.

Computer vision can automatically detect the characteristics of medical images3,4 on the basis of intuitive and high-precision characteristics at high speed and is becoming an important method in medical fields. Studies in the field of medical gender identification have used, for example, an eyeground image5 and an image of a bone6,7. In biology, mosquito classification has been studied8,9,10,11. However, the literature contains few studies12,13,14 on the recognition and classification of crabs using machine learning and deep learning methods. Among the limited examples, Wu et al.15 used abdomen images for swimming crabs and mud crabs to identify individual crabs via deep neural networks.

Zhang et al. have suggested a shell detection–recognition method that combines principal component analysis (PCA) with YOLOv5 (You Only Look Once v5), resulting in an improved method to recognize individual Chinese mitten crabs16. Cui et al.17 developed a gender classification method for the Chinese mitten crab using a deep convolutional neural network. Their original algorithm comprised a batch normalization technique and a dropout technique, and their proposed method achieved 98.90% classification accuracy. Notably, gender identification of the horsehair crab using machine learning and deep learning has not been reported.

In the present study, we investigated gender identification and its prediction precision in the shell and abdomen images of horsehair crabs using deep learning. For deep learning, we employed established and conventional network architectures. In addition, visualization of class-discriminative localization maps of the present deep learning models explains which parts of the horsehair crab images are focused on in the process of gender identification.

Methods

Horsehair crab dataset

A total of 120 crabs consisting of 60 males and 60 females were collected in Funka Bay, Pacific Ocean, Southern Hokkaido, Japan, in May 2023. Images of the shell and abdomen geometry of the horsehair crabs were used in the present study. Fishing permission for horsehair crabs used in this study was granted by the Hokkaido Governor. The images were taken using a camera (ILCE-6600, SONY) with a macro lens (SEL30M35, SONY). Each original image had a resolution of 6000 × 4000 pixels with 24-bit RGB channels. The original images were cropped to smaller sizes of 3400 × 3400 pixels to remove extra edges of the images. The cropped images were then compressed to 224 × 224 pixels to match the input sizes of the following deep convolution neural network (DCNN) models. Through this manual image acquisition process, we collected ~ 120 images for each target crab for the shell or abdomen geometry, totaling ~ 240 images.

Sec2

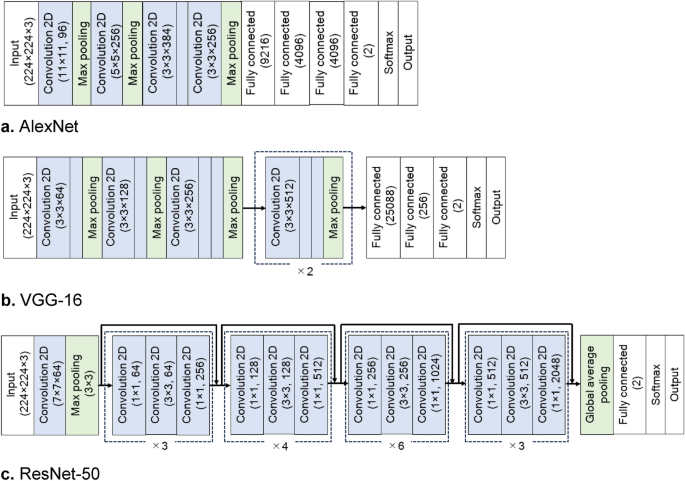

In the present study, we used the following established DCNN models: AlexNet18, VGG-1619, and ResNet-5020. These DCNN architectures are illustrated in Fig. 1. They consist of feature extraction and classification parts. The feature extraction part is formed by a series of convolution layers. The input RGB images are 224 × 224 pixels. Through successive convolution layers, the spatial dimensions of the feature maps were reduced from 224 × 224 to 13 × 13 (AlexNet), 14 × 14 (VGG-16), and 7 × 7 (ResNet-50). At the ends of the feature extraction part, the feature maps were flattened into a one-dimensional array for the classification through downstream fully connected (FC) layers. The present DCNN models were designed to classify binary classes of female and male so that the classification part was modified from its original structure. Outputs of the final FC layer for a target class c, denoted by yc, were fed into the softmax function to obtain the classification probability of each target class.

Deep convolutional neural network architectures used in the present study: (a) AlexNet, (b) VGG-16, and (c) ResNet-50.

Training the DCNNs

For the present dataset of the crab images, we employed fine-tuning to train the aforementioned DCNN models, except AlexNet. Pre-trained weights of AlexNet were not available. The model weights were initialized by the uniform random distribution and trained from scratch. In the cases of VGG-16 and ResNet-50, model weights pre-trained on ImageNet were used for their initial parameters. In the case of VGG-16, the pre-trained weights were partially retrained by the present dataset of the crab images. Only the last block consisted of the three consecutive convolution layers and the single max pooling layer before the FC layers were retrained together with the FC layers. In the case of ResNet-50, the pre-trained weights were totally retrained. As an optimizer, the stochastic gradient descent (SGD) was used with the learning rate set to 0.01. The categorial cross-entropy was employed as a loss function. The K-fold cross-validation was performed (K = 5 in the present study). The whole dataset was randomly partitioned into 80–20%, where 80% of the whole dataset was used for the training. Ten percent of the training dataset was employed for the validation during the training to monitor the training progress (Fig. 2). The remaining 20% of the whole dataset was reserved for testing of the classification performance. Each partitioned dataset contained the same quantities of female and male data. The training epoch was set to 2000, 400, and 1000 in the cases of AlexNet, VGG-16, and ResNet-50, respectively. Depending on the network architectures, the epoch to reach the optimal validation accuracy differed. For the model training and testing, we used the Keras framework on a Python 3.9.16 environment and an NVIDIA GeForce RTX 4080 16 GB GPU platform. Keras 2.9.0, TensorFlow 2.9.1, and NumPy 1.22.3 were employed.

Validation accuracy of the models during the training: (a) AlexNet, (b) VGG-16, and (c) ResNet-50. In all the models, optimal validation accuracy is achieved when fine-tuning is applied for VGG-16 and ResNet-50.

Results and discussion

Classification performance

In the present study, we evaluated both the F-1 measure and the accuracy, which are defined as

where TP, TN, FP, and FN represent true-positive, true-negative, false-positive, and false-negative results in the binary classification, respectively. Table 1 summarizes the classification performance of the present DCNN models. The F-1 measure is the five-time averaged value with the corresponding standard deviation. Notably, all the DCNN models achieved significantly high F-1 measures with a relatively low standard deviation, indicating that they all demonstrated sufficiently accurate and stable classification capability to distinguish crab gender. Although AlexNet has a relatively shallow network architecture, it demonstrated a relatively high classification capability, where the F-1 measure was greater than approximately 90% in both the shell and abdomen cases. VGG-16 and ResNet-50, which have deeper network architectures, achieved slightly higher classification performance. We speculatively attribute this better performance to the better feature extraction of the deeper network architecture and to the fine-tuning achieving more optimal weights for higher classification performance.

The high precision led to the results discussed in the preceding paragraph for VGG-16. To verify the result, an explainable visualization method for gender identification is presented in the following subsection.

Visualization of feature activation

To further comprehend the gender identification by the DCNN models, we employed Grad-CAM21 to visually explain the DCNN classification results. The class-discriminative localization map Lc of width u and height v for class c was computed using the following equation:

where ({alpha }_{k}^{c}) denotes the neuron importance weights and Ak denotes feature maps of a convolution layer. The neuron importance weights were evaluated by global average pooling the gradients as follows:

where (frac{partial {y}^{c}}{partial {A}_{ij}^{k}}) denotes the gradients via backpropagation until the convolution layer. Figure 3 shows the Grad-CAM scheme. The class-discriminative localization maps were originally coarse. They were resized to match the size of the input images by bilinear interpolation and then overlayed on the input image. Figures 4 and 5 show typical visualization explanations obtained by the Grad-CAM scheme for the VGG-16 neural network. The class-discriminative localization maps were generated from the last convolution layer in the VGG-16 architecture.

Schematic of the gender identification and visual explanation in the DCNN model. The class of a given horsehair crab image is predicted by two steps: (1) extracting hierarchical features and (2) classifying these features. In the feature-extraction step, feature maps are generated by filters at each convolution layer. The feature maps are used for the visual explanation by Grad-CAM.

Two major cases of classification in the shell side. Samples are shown for a (a) male and (b) female with their discriminative regions as heatmaps.

Two major cases of classification in the abdomen side. Samples are shown for a (a) male and (b) female with their discriminative regions as heatmaps.

Figure 4 shows the analysis of the image of the shell side. The heatmap is enhanced in the lower area for a male and in the upper area for a female. The highest point of these contours is completely divided at the carapace width (CW). Because the upper half of the female’s shell is highlighted and the lower half of the male’s shell is highlighted, in the case of gender identification of this shell side, using images of the whole shell is inevitable, consistent with Toyota et al.1 morphologic gender identification of the shell.

The abdomen geometry of a horsehair crab is shown in Fig. 5. Although Wu et al.15 identified individual crabs using a part-based deep learning network for texture features of the abdomen, our approach achieved gender identification without such a partition strategy. For this reason, in the case of gender identification, the partition strategy is not required for the present classification scheme because the form of the sexual organs is geometrically clear.

Conclusions

We demonstrated the effectiveness of deep neural networks for image recognition and revealed gender differences in the shell and abdomen geometry of the horsehair crab. We created a dataset that contained ~ 120 images of each shell and abdomen geometry of the horsehair crab. From the images of the abdomen geometry of a crab, the model could distinguish between a male and a female in the form of the sex organs, similar to gender identification by human visual inspection. The analysis of the images of the shell side included a more interesting result: Even though gender classification was impossible by human visual inspection, the present deep learning models enabled male and female classification with high precision. The F-1 measure reached approximately 95%. The discriminative region in the visual explanation was concentrated on the upper side of the shell for females and on the lower side of the shell for males.

Data availability

The datasets used and/or analyzed during the current study may be made available from the corresponding author and the first author (e-mail: [email protected]) on reasonable request.

References

-

Toyota, K., Izumi, K., Ichikawa, T., Ohira, T. & Takeuchi, K. Morphometric approaches reveal sexual differences in the carapace shape of the horsehair crab, Erimacrus isenbeckii (Brandt, 1848). Aquat. Anim. AA2020, AA2020–AA2021. https://doi.org/10.34394/aquaticanimals.AA2020.0_AA2020-1 (2020).

Google Scholar

-

Toyota, K., Arai, Y., Miyagawa, S., Kogo, Y. & Takeuchi, K. Novel validating indices to indicate sexual differences in the horsehair crab Erimacrus isenbeckii (Brandt, 1848). Aquat. Anim. AA2021, 1–8. https://doi.org/10.34394/aquaticanimals.AA2021.0_1_6 (2021).

Google Scholar

-

Ker, J., Wang, L. P., Rao, J. & Lim, T. Deep learning applications in medical image analysis. IEEE Access 6, 9375–9389. https://doi.org/10.1109/Access.2017.2788044 (2018).

Google Scholar

-

Babenko, B. et al. Detection of signs of disease in external photographs of the eyes via deep learning. Nat. Biomed. Eng. 6, 1370. https://doi.org/10.1038/s41551-022-00867-5 (2022).

Google Scholar

-

Korot, E. et al. Predicting sex from retinal fundus photographs using automated deep learning. Sci. Rep. https://doi.org/10.1038/s41598-021-89743-x (2021).

Google Scholar

-

Intasuwan, P., Palee, P., Sinthubua, A. & Mahakkanukrauh, P. Comparison of sex determination using three methods applied to the greater sciatic notch of os coxae in a Thai population: Dry bone morphology, 2-dimensional photograph morphometry, and deep learning artificial neural network. Med. Sci. Law 62, 261–268. https://doi.org/10.1177/00258024221079092 (2022).

Google Scholar

-

Malatong, Y., Intasuwan, P., Palee, P., Sinthubua, A. & Mahakkanukrauh, P. Deep learning and morphometric approach for sex determination of the lumbar vertebrae in a Thai population. Med. Sci. Law 63, 14–21. https://doi.org/10.1177/00258024221089073 (2023).

Google Scholar

-

Minakshi, M., Bharti, P., Bhuiyan, T., Kariev, S. & Chellappan, S. A framework based on deep neural networks to extract anatomy of mosquitoes from images. Sci. Rep. https://doi.org/10.1038/s41598-020-69964-2 (2020).

Google Scholar

-

Kittichai, V. et al. Deep learning approaches for challenging species and gender identification of mosquito vectors. Sci. Rep. 11, 4838. https://doi.org/10.1038/s41598-021-84219-4 (2021).

Google Scholar

-

Park, J., Kim, D. I., Choi, B., Kang, W. & Kwon, H. W. Classification and morphological analysis of vector mosquitoes using deep convolutional neural networks. Sci. Rep. 10, 1012. https://doi.org/10.1038/s41598-020-57875-1 (2020).

Google Scholar

-

Pataki, B. A. et al. Deep learning identification for citizen science surveillance of tiger mosquitoes. Sci. Rep. 11, 4718. https://doi.org/10.1038/s41598-021-83657-4 (2021).

Google Scholar

-

Wang, D. Y., Vinson, R., Holmes, M., Seibel, G. & Tao, Y. Convolutional neural network guided blue crab knuckle detection for autonomous crab meat picking machine. Opt. Eng. https://doi.org/10.1117/1.Oe.57.4.043103 (2018).

Google Scholar

-

Wang, D., Holmes, M., Vinson, R., Seibel, G. & Tao, Y. in 2018 ASABE Annual International Meeting ASABE Paper No. 1800570 1 (ASABE, St. Joseph, 2018).

-

Wang, H. et al. Quality grading of river crabs based on machine vision and GA-BPNN. Sensors https://doi.org/10.3390/s23115317 (2023).

Google Scholar

-

Wu, C. J. et al. A part-based deep learning network for identifying individual crabs using abdomen images. Front. Mar. Sci. https://doi.org/10.3389/fmars.2023.1093542 (2023).

Google Scholar

-

Zhang, J. Z., Wang, S. X., Zhang, S. M., Li, J. K. & Sun, Y. Y. Research on target detection and recognition algorithm of Eriocheir sinensis carapace. Multimed. Tools Appl. https://doi.org/10.1007/s11042-023-15228-w (2023).

Google Scholar

-

Cui, Y. H., Pan, T. H., Chen, S. & Zou, X. B. A gender classification method for Chinese mitten crab using deep convolutional neural network. Multimed. Tools Appl. 79, 7669–7684. https://doi.org/10.1007/s11042-019-08355-w (2020).

Google Scholar

-

Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet classification with deep convolutional neural networks. Commun. ACM 60, 84–90. https://doi.org/10.1145/3065386 (2017).

Google Scholar

-

Simonyan, K. Z., A. in ICLR (San Diego, 2015).

-

Shafiq, M. & Gu, Z. Q. Deep residual learning for image recognition: A survey. Appl Sci https://doi.org/10.3390/app12188972 (2022).

Google Scholar

-

Selvaraju, R. R. et al. Grad-CAM: Visual explanations from deep networks via gradient-based localization. Int. J. Comput. Vis. 128, 336–359. https://doi.org/10.1007/s11263-019-01228-7 (2020).

Google Scholar

Author information

Authors and Affiliations

Contributions

T.O., K.Takeuchi, and S.S. took photographs of crab samples. Y.U. constructed a dataset from the crab’s photographs and performed the entire process of training and visualizing DCNN models. Y.U. and S.S. choose models for the neural network architectures and wrote most part of the manuscript. K.Toyota and T.O. contributed to comparing morphological keys to the visualized feature activation. K.Takeuchi and S.S. designed the study. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Reprints and Permissions

About this article

Cite this article

Ueki, Y., Toyota, K., Ohira, T. et al. Gender identification of the horsehair crab, Erimacrus isenbeckii (Brandt, 1848), by image recognition with a deep neural network.

Sci Rep 13, 19190 (2023). https://doi.org/10.1038/s41598-023-46606-x

-

Received: 18 July 2023

-

Accepted: 02 November 2023

-

Published: 13 November 2023

-

DOI: https://doi.org/10.1038/s41598-023-46606-x

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.