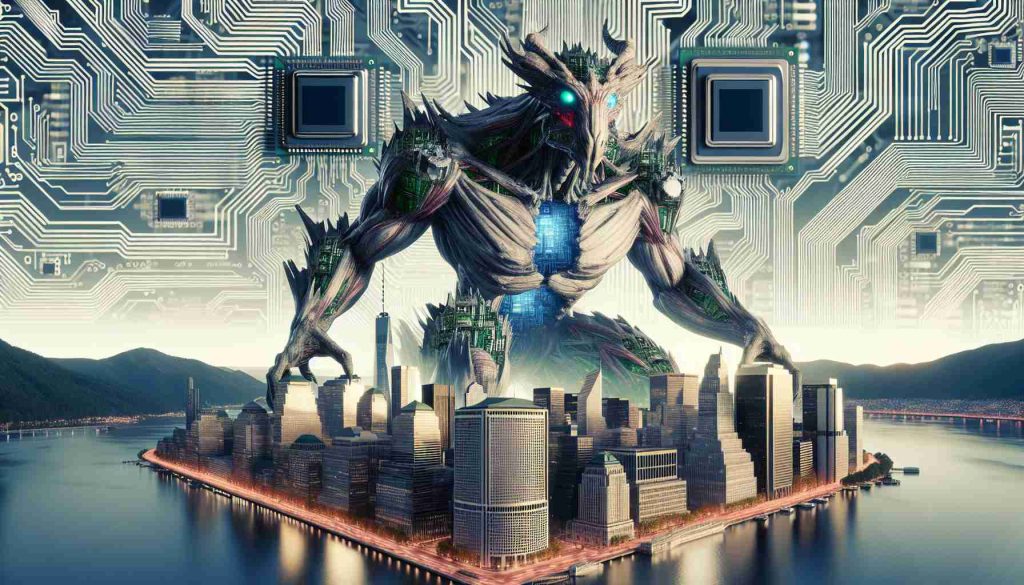

In a world where artificial intelligence is no longer just a sci-fi fantasy, a certain AI titan stands shoulders above the rest. Unraveling the secrets of language like a modern-day Rosetta Stone, OpenAI’s GPT-3 has captured the imaginations of many. Yet, behind its eloquence lies a voracious beast with an insatiable appetite for raw computing power. Welcome to the Silicon Jungle of GPUs – the lifeblood of this AI leviathan.

Before venturing deeper, let’s embark on a journey to comprehend the beasts of burden known as GPUs. These Graphical Processing Units have transcended their gaming origins, evolving into the stalwarts of deep learning. Their parallel processing prowess is the key to GPT-3’s formidable learning speed.

Behold GPT-3, the Generative Pre-trained Transformer 3, a linguistic colossus boasting 175 billion parameters. Training such a titan demands not just power, but a legion of GPUs. Whisperings from the silicon grapevine suggest OpenAI marshaled an army – a staggering 3,000 to 5,000 GPUs – for this epic computational crusade.

Imagine GPUs as countless scribes, simultaneously penning down the vast anthology of human knowledge that GPT-3 must absorb. This grand task, distributed like an ancient construction project, turned months into days and dreams into reality.

While the sheer number of required GPUs for GPT-3’s training might flabbergast some, those in the know will nod sagely, appreciating the gargantuan scale that OpenAI wrestled with. But this begs the question – what sort of sorcery allows these GPUs to perform in dazzling harmony?

The magic lies within the deep learning tasks themselves. The inherent parallelism within these tasks marries perfectly with the GPU’s architecture. Taming the computing chaos into a ballet of binary precision, these GPUs are the keymasters to unlocking artificial intelligence of unprecedented sophistication.

Yet, this GPU saga unfolds within the context of relative resource abundance and silicon largesse. Could such a spectacle become a relic as efficiency gains and algorithmic breakthroughs march on?

As OpenAI’s GPT-3 sculpts the edge of AI research like a master sculptor chipping away at marble, the GPU infrastructure is its studio, housing thousands of computational Picassos. But as with any art, evolution is inevitable. The technology of tomorrow may trim down the GPU horde like a sculptor removing the excess from a masterpiece.

To encapsulate, the remarkable AI feat that is GPT-3, with its 175 billion parameters, leverages a vast GPU arsenal ranging in the thousands. These computational cohorts enable the high-speed training and development of such a powerful model. As we look to the future, we can envision a horizon where hardware advancements and optimized techniques reduce the reliance on such extensive GPU firepower.

As we stand at the precipice of AI discovery, one thing is clear – the balance of machine learning brilliance and brute computing strength in shaping the future is as delicate as it is astonishing.

For further illumination on this technological marvel, the guardians of knowledge – AI researchers and OpenAI’s documentation – offer a lighthouse in the sprawling sea of machine learning. Let us take the torch and venture forth into the brave new world of AI, powered by a legion of GPUs.

[embedded content]

[embedded content]

Marcin Frąckiewicz is a renowned author and blogger, specializing in satellite communication and artificial intelligence. His insightful articles delve into the intricacies of these fields, offering readers a deep understanding of complex technological concepts. His work is known for its clarity and thoroughness.