Abstract

The optical microscope has revolutionized biology since at least the 17th Century. Since then, it has progressed from a largely observational tool to a powerful bioanalytical platform. However, realizing its full potential to study live specimens is hindered by a daunting array of technical challenges. Here, we delve into the current state of live imaging to explore the barriers that must be overcome and the possibilities that lie ahead. We venture to envision a future where we can visualize and study everything, everywhere, all at once – from the intricate inner workings of a single cell to the dynamic interplay across entire organisms, and a world where scientists could access the necessary microscopy technologies anywhere.

Introduction

Optical microscopy remains one of the most rapidly developing technologies in scientific research1,2,3. Compared to other techniques in life science, optical microscopy makes it possible for us to visualize biology in its physiological context. In a short span of less than three decades, several important breakthroughs in light microscopy have revolutionized life sciences. These include, but are not limited to: (i) genetically encoded fluorescent proteins for live cell imaging4,5,6, (ii) light sheet microscopy7,8,9,10,11,12, (iii) super-resolution microscopy13,14,15,16,17,18,19,20,21,22,23,24, (iv) label-free imaging approaches25,26,27,28,29, (v) machine learning30,31,32,33,34, and (vi) imaging technologies capable of adapting to the biology of the specimens35,36,37,38,39,40,41,42,43,44,45,46. Together, these innovations have allowed biologists to understand the fundamental processes of life across a large range of spatiotemporal scales or under conditions previously considered incompatible with imaging.

Despite these achievements, we continue to face obstacles in deciphering the interplay among the many processes that together sustain life. This is due largely to the limited capability of current technologies to combine all the essential imaging parameters required to comprehend the totality of the biology in question. For instance, it is extraordinarily challenging and often impossible to simultaneously optimize spatial resolution, imaging speed, signal-to-noise ratio and photodamage1,2,3,47,48,49,50,51. Current technologies remain largely inadequate in coping with (i) the unpredictability of biological events, (ii) biomolecules or phenomena that cannot be easily labeled, and (iii) replicating physiological conditions without perturbation. Overcoming these challenges necessitates a synergistic intersection of hardware, software, and wet lab development. In this Perspective, we discuss some of these key barriers that continue to stymie imaging science. With these current challenges as preambles, we explore how technologies must evolve to advance the various frontiers in life sciences. A complete picture of how life functions can only be attained if we leverage all these technologies together52. In essence, we reimagine the future of optical microscopy wherein we can image anything anywhere at any time.

Anytime

From the movement of single molecules at the millisecond level, through the delicate coordination of cell differentiation over days or weeks, the dynamics of life occur at time scales that span a staggering billion-fold range or more. Light microscopy stands as arguably the only analytical method that can characterize such a wide range of changes in living samples. It is the specimen, however, that will always restrict what can be captured in any single experiment. For instance, following the sub-cellular location of a fluorescent molecule with millisecond precision over several days is, in any practical sense, impossible. There are two important reasons for this: first, any fluorophore can only emit an intrinsically limited number of photons—termed its photon budget53. Second, prolonged continuous exposure to intense light will inevitably deteriorate the health of the specimen itself, negating the validity of the very observation being made.

Hence, life scientists have been largely forced to image a specimen either (i) rapidly, using high intensity light, for short amounts of time, or (ii) slowly and/or tolerating less signal to permit longer imaging durations. Worse, this decision must usually be made before the experiment starts— reflecting an often tenuous “best guess” as to the timescales involved. Fortunately, much work over the past decades has been aimed at helping researchers reduce such costly compromises. For example, by confining illumination to the focal plane, light sheet microscopy7,8,9,10,11 permits high-speed imaging across large sample volumes, while better preserving sample health and photon budget. This remarkable technique has empowered researchers to, for example, monitor whole-brain neuronal activity in zebrafish by imaging ~100,000 neurons every second at single-cell resolution10,54,55. Using suitable reporters, like genetically-encoded calcium and voltage sensors56,57, such volumetric and quantitative functional imaging becomes a powerful approach for gaining insights into dynamic biological processes in situ. In tandem, improved chemistry has produced a new generation of bright, genetically encodable dyes capable of withstanding more illumination for longer periods58. Further, high-quality information can now be extracted from very low-signal images through judicious use of machine learning techniques32,59,60,61,62,63,64. Yet despite these dazzling advancements, an uncomfortable truth remains: biological systems are replete with rare, transient, and unpredictable events that—while having profound effects—cannot be faithfully captured via time-lapse microscopy.

It is increasingly apparent that “smarter” tools are needed to truly transcend the wide range of biological relevant time scales. Rather than being mere passive observers, imaging systems that reconfigure themselves in response to a specimen present a new set of opportunities42,65. Previous developments in adaptive imaging techniques have laid critical groundwork. For example, Stimulated Emission Depletion (STED)66 microscopy has benefitted greatly from guided illumination, as was shown by ref. 67. In this case, avoiding high power illumination in specific areas of the malaria-causing agent Plasmodium falciparum avoided catastrophic sample damage. Moreover, dynamically altering light sheet microscopy alignment to continuously maximize image quality (and therefore optimize illumination power) was instrumental in capturing long-term developmental events in Drosophila, Zebrafish, and even mouse embryos with exquisite and unprecedented detail over days of continuous imaging68,69.

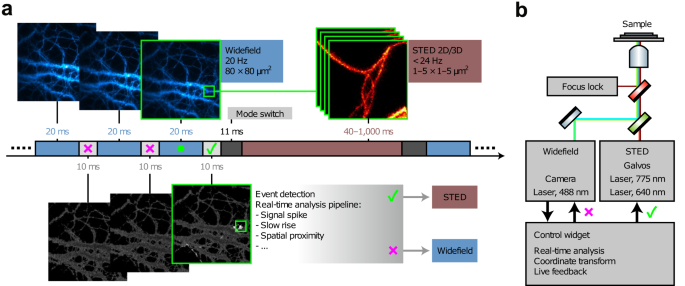

Yet, in these cases, the microscopes were completely ignorant to the specific biology they were observing. A more “content aware” methodology is needed to push beyond current barriers to capture other challenging, yet critical processes. Mitochondrial division, for instance, is particularly difficult to characterize with fluorescence microscopy. Individual events typically occur rapidly, sporadically, with high mobility within the cytoplasm, and are notoriously photosensitive. The adaptive illumination approaches outlined above would not ease these challenges. The critical missing ingredient is an “event detector”—a way for the microscope to identify an impending biological process, which can then be used to decide how the instrument should be configured. Recent work by Mahecic et al. 70 shows how such a “self-driving” microscope might work. Event-driven microscopy12,42,70,71,72,73,74 is not based on a novel optical design or advanced labeling technologies; rather it incorporates a model of biological change, together with a feedback loop. In the case by Mahecic et al., it was assumed that the accumulation of DRP1 protein in mitochondria, accompanied by characteristic shape changes of the organelle itself would indicate a looming fission event. In the absence of these indicators, the microscope adopted a “wait and see” mode—capturing images slowly to preserve photon budget and sample health. During this time, however, each image underwent a sophisticated neural network-based analysis to look for fission indicators. Once found, the microscope automatically switched to a high-speed configuration to capture the division process with high fidelity until it was completed; after which, the system returned to more gentle conditions awaiting the next event. The advantages of this approach were striking. Compared to fixed-rate acquisition, the imaging duration was extended tenfold, allowing five times as many events to be captured while simultaneously reducing photobleaching by the same factor. The same concept can even be used to drive more complex microscope re-configurations. Alvelid et al.73 deployed a biological event-driven trigger to switch between relatively gentle epi-fluorescence and illumination-intensive STED imaging modes to better track calcium-mediated endocytosis, as shown in Fig. 1. Multi-modal microscopy75,76,77,78 is eminently powerful in its own right, but using event-detection to autonomously switch imaging modes will undoubtedly lead to even larger impact. In short, event-driven microscopy stands to provide more biological information for less cost by applying already-developed technologies in a more intelligent manner.

a Scheme of an event-triggered STED (etSTED) experiment. Images from widefield calcium imaging of Oregon Green 488 bAPTA-1 in neurons (blue, top left images) are analyzed by a real-time analysis pipeline (light gray, bottom images). Detection of an event (small green box) triggers modality-switching to STED imaging at the corresponding location (red, top right image stack). b Schematic diagram of the etSTED microscope set-up, combining widefield and STED imaging under a control widget. Images are reproduced with permission from ref. 73.

A main hurdle to its widespread adoption, however, lies in the development of robust event detection algorithms. While biologists possess a well-honed ability to predict the onset of a biological process, teaching computers to perform this task accurately and consistently is a non-trivial endeavor. These algorithms rely entirely on the quality and specificity of the training data from which they are derived. Consequently, they are sensitive to both the particular biological problem at hand and the specific microscope used to generate the data. Thus, creating case-specific machine learning models, rather than “out of the box” or “generalized” event detection algorithms, is far more feasible. However, doing so requires easy-to-use tools that simplify this process for non-experts in machine learning. Without this, event-driven microscopy will remain a specialist’s tool, beyond the reach of an overwhelming majority of researchers. Presently, commercial microscopes often incorporate intelligent features, including feedback-driven acquisition. While they do not yet encompass all the advanced features envisioned here, they are engineered for adaptability through user APIs, representing a promising avenue for widespread adoption of event-driven microscopy in the future.

Event-driven microscopy excels at capturing dynamic events in an optimal manner, yet its full potential remains constrained by our ability to label specific molecules or events for observation. Realizing the true power of imaging anytime can only be accomplished if we are able to label anything of interest.

Anything

The basic constituents of life—proteins, nucleic acids, ions, carbohydrates, and lipids—belie an incomprehensible diversity of biological building blocks. Each is wholly indispensable; yet, optical microscopy has so far been woefully unbalanced in its ability to characterize the labyrinth of interactions between these molecules in living systems. Take, for example, a complex disease such as cancer. The insidiousness of this illness relies on its ability to modify any molecule necessary to ensure its survival and progression79,80,81. By focusing primarily on the proteomic and, to a lesser extent, genetic realms, we put ourselves at a severe disadvantage to even begin to understand the full spectrum of the devastatingly lethal havoc being wreaked in our body, let alone conquer it.

To a large extent, this reflects a difficult biochemical reality: some molecules are simply easier to label than others. The power of fluorescent protein fusions uses the cell’s own machinery to make any gene product visible4,5,6. The more recent revolution brought by CRISPR/Cas9 can even allow such fusions to be expressed from a protein’s genetic locus82,83,84. The same technology can also be used to label specific DNA sequences themselves85,86,87,88. Even mRNA can be visualized via techniques such as MS2 labeling with single-copy sensitivity89,90,91,92.

However, other types of biomolecular species have not been afforded such a wealth of available tools, for various reasons posed by the character of the molecule in question. Ions—and in particular metal cations—are unquestionably vital components as well. Yet their small size has thus far defied direct labeling approaches, requiring the use of indirect biosensors. Ca2+, and to a lesser extent, Zn2+ are two common targets with a bevy of available small-molecule indicators. However, their specificity has been questioned, and long-term toxicity remains a concern93,94. Moreover, they cannot be targeted to specific cell populations. Fortunately, it is now possible to use genetically encoded fluorescent proteins to image ionic species95,96,97,98. GCaMP and its variants have become indispensable tools for live-cell Ca2+ imaging56,99,100. Similar tools for visualizing Zn2+, pH, biomechanical forces, and voltage gradients are also available57,93,101,102,103,104,105. But the palette of genetically encoded ion sensors is still limited. Other vitally important species such as Na+, Mg2+, Fe2+, and others still await robust, reversible, and targetable sensors to render these vital ions visible. To do so requires the full power and ingenuity of the protein engineering field and beyond.

Lipids and carbohydrates, however, are arguably the most inaccessible “dark matter”106 in biology. The plasma membrane alone contains hundreds of distinct lipid types107, with various chemical modifications possible for many of them. Even more troubling, glycans108,109,110 represent the most abundant biomaterial on earth. It is ironic then, that targeting a specific variety of lipid or glycan for imaging remains a highly underdeveloped field. To date, two general approaches have been explored. The first uses a fluorescent lipid- or glyco-binding protein to infer the location of the target—provided there exists a suitably specific and sensitive protein available111,112,113. Yet this can only offer an indirect measure of content and function. Bioorthogonal or “click” chemistry113,114,115,116 can be used to synthesize a fluorescent lipid or carbohydrate within the cell (Fig. 2a). This method, while perhaps more elegant, requires significant chemical expertise to undertake. Despite several available commercial tools, click chemistry has not been as widely adopted as other labeling techniques.

a Target lipids that are transphosphatidylated by phospholipase D (PLD) can be visualized using click chemistry. The bottom images show labeled sites of PLD activity in HeLa cells. Scale bar = 10 µm (bottom left image), 50 µm (bottom right image). b Unmixed spectral image of an artificial mixture of 120 differently labeled E. coli. Scale bar = 25 µm. c Representative stimulated Raman scattering images of five melanoma cell lines (showing decreased differentiation from left to right) corresponding to the lipid peak (2,845 cm−1; top row in red), protein peak (2940 cm−1; middle row in blue), and the ratio of lipid/protein (bottom row). Scale bar = 20 µm. The images in (a–c) are reproduced with permission from refs. 113,124,136, respectively.

To be sure, developing more robust, broadly applicable, and most importantly, accessible labeling strategies for the full range of biomolecules is critical to the future of live cell imaging. But a more fundamental question looms. Being able to image anything naturally leads one to wonder— could we image everything? And should we? Much of biology relies on a reductionist approach whereby only a handful of molecular players are investigated at a time; this will undoubtedly remain a bedrock of bioscience. Yet bringing a systems-level approach to live imaging offers tantalizing possibilities. It is also a challenge of monumental proportions that resists a single “catch all” solution. The dream of simultaneously imaging the behavior of every molecular player in a signaling cascade may sound far-fetched. But, our current capabilities, if integrated in novel ways, may make this goal achievable. Spectrally-resolved imaging can increase the number of simultaneously detectable fluorophores 4- to 5-fold, provided a robust unmixing algorithm can be employed117,118,119. Further, we can look to other readouts besides fluorescence intensity and color. Fluorescence lifetime120,121,122 or photobleaching rates123 provides orthogonal imaging modes that can further increase the number of distinguishable biomolecules in an image.

However, systems-level live microscopy necessitates more than just better microscopes. Detecting ca. 100 distinct molecules (or more) requires even more ingenuity, as the number of suitable fluorophores (and our ability to introduce them in a sample) quickly becomes exhausted. “Bar code” labeling—where molecules are labeled with a unique ratio of multiple fluorophores—takes advantage of combinatorics to create effectively hundreds or even thousands of unique fluorescent signatures, which when combined with suitable analysis, can begin to reach systems-level analysis124 (Fig. 2b). Using bar-code imaging with chemigenetic tools such as halo, SNAP, and CLIP handles125,126 in living samples may make it possible to accomplish this with genetic specificity in live systems.

The use of fluorescent labels, however, comes with important considerations, as they may perturb, often in pernicious ways, native biological processes127,128,129. Data interpretation can be skewed by artifacts arising from fluorophore-fluorophore interactions, their susceptibility to the local microenvironment, and phototoxicity, among many other effects51,127,130,131. Consequently, it becomes essential to design experiments meticulously, including the use of proper controls, and to evaluate the imaging data with a critical eye. Effective checks include assessing whether the labeled samples maintain normal morphology and behavior, and whether consistent results are obtained by using different tags. In certain scenarios, the intrinsic optical characteristics of biological molecules can be leveraged for label-free imaging26,29. Quantitative phase imaging132,133, stimulated Raman microscopy134,135,136 (Fig. 2c), as well as second harmonic generation and other orthogonal imaging techniques25,26,27,28 can all be brought in to supplement and complement multiplexed fluorescence microscopy.

In short, the technologies needed to image anything—and perhaps even “everything”, may be in reach sooner than we think. However, crossing the finish line requires that chemical tool developers, optical engineers, and biologists work in even greater synchrony toward common goals. That being said, the size and complexity of such multidimensional data will surely overwhelm our current capacity to handle and analyze them. The dimensional reduction and analysis techniques already developed for bulk genetic and proteomic studies must be adapted to preserve the spatiotemporal resolution that only imaging can provide.

The combined capabilities to image at anytime and study anything will undoubtedly accelerate groundbreaking advancements in biological research. However, they will remain limited in their usefulness unless we overcome another significant obstacle—the ability to image anywhere.

Anywhere

While expanded and multiplexed molecular labeling technologies and “smart” microscopes will be critical to the future of imaging science, there remains the considerable challenge of applying these technologies anywhere within in a complex biological system. Many of the critical processes of life can only take place within a living organism under physiological conditions that cannot be replicated by single-cell models, or even in tissuemimetic systems such as organoids137,138,139. However, illumination light and signals of interest must be delivered to and detected from deep within an organism to faithfully image such processes. To gain sufficient penetration depth often requires invasive procedures, which can threaten the wellbeing of the specimen. These compounding difficulties can quickly become insurmountable with current technologies, thus restricting biologists from visualizing processes in their most relevant physiological contexts.

To be able to follow biological processes at-will means that an imaging system must contend with the optical properties of the specimen, as well as maximizing imaging depth and field of view (FOV). What fuels our motivation to image anywhere within a biological system is to explore its vast complexity in context. Yet, it is this very same complexity that distorts our view and misleads our interpretation. When imaging through heterogenous tissue, light is inevitably scattered, absorbed, or aberrated140. In recent years, the correction of optical aberrations has become reasonably achievable through adaptive optics (AO). By measuring the distortion of the optical wavefront propagating in and out of a specimen, a deformable mirror or other optic can apply the inverse distortion and restore image quality. The technology has been successfully demonstrated38,39,40,41 (Fig. 3a), yet remains largely inaccessible to the broader biological community. Before AO can be implemented universally, a more user-friendly or “one-click” interface must be adopted. Contrary to common assumption, AO is only useful in correcting aberrations—it cannot correct image degradation due to scattered or absorbed light. Unless a sample is truly transparent, increasing amounts of excitation and emission light will be scattered or absorbed by the specimen with increasing imaging depth. This has placed a fundamental limitation on where we can observe dynamic processes within a complex sample. Working with optically transparent samples such as chemically cleared specimens141,142, Danio Rerio, or Caenorhabditis Elegans can mitigate this issue even for something as big as a human embryo143. Ultimately, however, the holy grail is to visualize live specimens with sufficient resolution at any depth. Even commonly used intravital imaging techniques, like multiphoton microscopy144,145, limits accessibility to a few hundred microns. Imaging in the NIR II window (1000–1700 nm) shows promise for deeper tissue penetration (mm range) due to reduced light absorption, scattering, and lower autofluorescence. However, its widespread adoption hinges on multiple technical advancements, including better fluorophores and suitable detectors146,147,148. Additionally, signals that have been cloaked by optical aberrations from deep tissue imaging can now be partially recovered using software tools. Machine learning-based approaches31,32 can accomplish this if appropriate training data is provided. However, the validation and reproducibility of such models remains a challenge. Even so, no true single solution such as AO exists for reducing the deleterious effects of light scattering on an image—such a development would fundamentally reshape how live microscopy is performed.

a Comparison of different corrections, including the use of adaptive optics, on images of a live human stem cell-derived organoid expressing dynamin and clathrin obtained using a lattice light sheet microscope. b The top image shows a schematic of a MiniScope equipped with a GRIN lens mounted on a mouse’s head. The bottom image shows a representative fluorescent image of medium spiny neurons labeled with GCaMP6s. The traces indicate calcium transients from ROIs 1–9. Scale bar = 100 µm. c Projected image of the entire volume of a female Drosophila obtained using a Mesolens. Scale bar = 1 mm. d Benzyl alcohol/benzyl benzoate (BABB)-cleared Xenopus tropicalis tadpole stained for Atp1a1 (Alexa Fluor 594, orange) and nuclei (DAPI, grayscale). The sample was first imaged on a mesoSPIM light sheet microscope, and then using a Schmidt objective. The large Schmidt FOV allows imaging of both the entire head (~800 μm across) and individual developing photoreceptors in the eye. Scale bar = 500 µm, 100 µm, 50 µm, and 10 µm, respectively, for images going left to right. e Photoacoustic images of a 3-D reconstructed maximum amplitude projection and corresponding color-encoded depth-resolved image of a volunteer’s right hand. The blood vessel network can be clearly visualized. Scale bar = 3 cm. The images in (a–e) are reproduced with permission from refs. 38,154,157,158,178, respectively.

Further compounding the challenges posed by the sample, the objective lens working distance places an unassailable limit on the imaging depth. Working distance scales inversely with numerical aperture (NA), forcing a compromise between depth and resolution. One way to sidestep working distance is to eliminate it altogether. Rather than trying in vain to collect photons beyond the working distance, a Gradient-Index (GRIN) lens149 can be implanted into a specimen to relay photons from a deeper portion of the sample back to the focal plane of the objective. Likewise, a GRIN lens can also be affixed to an optical fiber to create an endomicroscope150,151,152,153,154 (Fig. 3b). This allows researchers to effectively image any location they can access with a small, flexible arm, which is especially powerful for accessing small canals. However, imaging at any location is hardly equivalent to imaging anywhere. The limited GRIN lens FOV immediately pigeonholes the biologist to a preselected, restricted region, blinding the observer to the broader, interrelated biological landscape. One additional, and rarely appreciated, complication is that such highly invasive procedures on the unfortunate specimen could have unintended, detrimental, and unpredictable biological consequences. A localized inflammatory response triggered by the insertion of the GRIN lens could be misattributed as the involvement of the immune system in the very biological event being observed155. Worse yet, such reactions may trigger other unrelated, secondary events that misguide data interpretation.

Drawing conclusions from data lacking in context is inherently prone to gross misinterpretation. In microscopy, context is in many ways closely linked to FOV. Unfortunately, FOV and resolution are fundamentally at odds, forcing microscopists to compromise between breadth and specificity. Circumventing FOV limitations can be readily achieved by image tiling, a commonly available feature. Yet, this approach suffers from poorer imaging speed and image stitching artifacts. A true solution requires a complete reimagination of objective lens design, as is exemplified by the Mesolens156. Combining a large (6 mm) FOV with a comparatively high NA (0.5) and a long working distance (3 mm), the Mesolens can capture an entire adult Drosophila with sub-cellular resolution157 (Fig. 3c), with a commercial version available in the form of the two-photon random access mesoscope (2p-RAM). Another recent solution is the Schmidt objective158 (Fig. 3d), inspired by the Schmidt telescope, that replaces lenses with a spherical mirror and a refractive correction plate. Such a radical redesign of a microscope objective endows it with a highly desirable combination of advantages—high NA (0.69-1.08), large FOV (1.1–1.7 mm), long working distance (11 mm), while being compatible with a wide range of sample immersion media. However, FOV is not a strictly two-dimensional concept. Collecting a canonical “z stack” is akin to 2D tiling for an increased FOV: multiplicatively slower, inversely related to resolution, and increasingly prone to photodamage and motion artifacts. A single-shot approach to collecting an entire 3D volume alleviates these burdens. This has been realized on small length scales through multifocal microscopes159,160 and large length scales through light field microscopes161,162,163. Recently, light field microscopy was paired with a mesoscopic objective lens for a lateral and axial FOV of 4 and 0.2 mm, respectively. Because of its single snapshot 3D capability, neural activity in a mouse cortex could be imaged at an astounding 18 volumes per second over this large FOV—a markedly impressive breakthrough in rapid 3D imaging164. An immediate challenge, however, is the sheer scale of large FOV, volumetric, live microscopy data. The logistical headaches for data storage, transfer, and analysis are considerable165,166,167,168,169. This burden can be somewhat ameliorated via lossless compression methods170 to reduce data size and/or adaptive microscopy techniques that can reduce collection of non-informative data. However, the challenges of big data extend beyond storage. Data processing and analysis requires access to powerful computational resources, appropriate software, and technical expertise to derive meaningful insights from the data32,165,169,171,172. The adoption of automated analysis workflows is imperative because manual analysis is impractical at such scales due to its laborious nature and susceptibility to human bias and error, and it is crucial to properly train machine learning models for this purpose. Given these substantial challenges, biologists no longer have the luxury to treat data handling and processing as an afterthought, and must proactively prepare and ensure all essential resources are in place before even commencing their experiments.

Equally worrisome are the challenges of applying large FOV volumetric imaging deep within a living organism. At these substantial depths, the spatial context of the surrounding tissue cannot be ignored, yet our current solutions for large FOV imaging still necessitate the invasive procedures previously described. Imaging the surrounding, native environment is effectively pointless if this very tissue must be destroyed simply for access. We can draw inspiration from clinical imaging to achieve this.

One clinically accepted, ethical way to peek at an unborn fetus is through ultrasound imaging173. It is the method of choice precisely because ultrasound is not encumbered by the limitations of photodamage and invasive approaches in conventional optical imaging. Furthermore, it routinely achieves centimeters scale imaging depth. Conversely, ultrasound lacks the molecular specificity and resolution that light microscopy routinely provides. The two imaging modalities, however, can complement each other synergistically if properly integrated. Specific molecules can be excited by certain wavelengths of light and the ultrasound originating from its thermal vibration can then be detected, forming the basis of photoacoustic imaging174,175,176,177. Many molecules emit signature photoacoustic signals, and thus can act as intrinsic contrast agents, enabling label-free imaging. A popular example is hemoglobin, which exhibits wavelength-specific photoacoustic effects in oxygenated and deoxygenated states, and is widely used for imaging tissue vascularization and quantification of tissue oxygen consumption177,178,179,180 (Fig. 3e). For cases when molecules of interest do not provide sufficient photoacoustic contrast, specificity can be introduced into the sample through exogenous contrast agents like gold nanoparticles and fluorescent dyes181. Another agent which provides specificity and multiplexing for photoacoustic imaging, similar to fluorescent proteins, are gas vesicles182. Initially identified in aquatic microbes as regulators of cellular buoyancy, these gas-filled protein nanostructures produce strong ultrasound contrast and can be tuned to collapse at different frequencies. They have even been utilized as genetically-encoded reporters183. Various other techniques176,184,185 such as magnetic resonance imaging186,187,188, computed tomography189,190, positron emission tomography imaging191,192, optical coherence tomography (OCT)193,194, bioluminescence imaging195,196,197, hyperspectral imaging117,118,119, etc., have also helped make significant progress in imaging biological processes in their native tissue environment. The natural progression is then to consider the entire environment surrounding the model organism itself.

Beyond the optical challenges of imaging deep into a complex specimen are the difficulties imaging samples that are themselves moving. Specimens often grow or move out of a FOV during a prolonged imaging experiment. Recent years have seen integrated software pipelines capable of monitoring and adjusting the sample position, which is specifically useful for developing embryos69. However, the future of imaging must include approaches to mitigate the movement of even more complex specimens. Intravital imaging198,199 is exceptionally powerful for imaging within living vertebrates such as mice or rats. By opening a window to the brain, kidneys, or other organs, one can visualize dynamic processes live within the animal200. However, the animal is often restrained and/or heavily sedated, which may alter the biological processes being studied. Systems capable of performing intravital imaging on animals free to walk, eat, and perform other normal functions are critical201,202,203,204,205,206; this requires, however, an overhaul of hardware, software, and wet lab tools. More fundamentally, this necessitates a shift in thinking from bringing the sample to the microscope to adapting the microscope to the sample.

Such a profound change in perspective makes it evident that the next logical step is to extend the notion of imaging anywhere to the environment in which the microscopy is being performed. Quantitative, research-grade microscopy is almost exclusively performed in laboratory spaces, requiring the specimen to be brought from the field to the microscope. This inherently, and often severely, restricts the problems that can be tackled with microscopy. This is especially the case regarding infectious disease research. In many pressing cases where microscopy can make an immediate impact on dire health crises, limited windows for sample viability and biosafety concerns will often restrict specimen transport and examination in a laboratory space. Therefore, it is imperative that the future of imaging consider the concept of moving the microscope to the biology, where the microscope is no longer seen as a static instrument but rather as a flexible tool adaptable to the diverse conditions of the field. For instance, the LoaScope207, which has been used to rapidly detect Loa loa microfilariae in peripheral blood, has successfully guided the treatment strategy for thousands of infected patients in Central Africa208. Many efforts among microscopy developers aspire towards gaining the highest spatial resolution, deepest penetration depth, or fastest imaging speed; while this is laudable, human health can often be better served by adapting current technologies to readily, and robustly, function in challenging point-of-care environments and other settings that do not lend themselves easily to microscopy. Some field microscopes, such as the Em1 portable microscope, are commercially available, although higher costs and reduced configurability may limit their applicability for some users. Open-source alternatives, like the Octopi microscope209, offer significant advantages in this regard as they can be more flexible for particular needs and are often more budget-friendly.

Outlook

Ironically, the ability to image multiple biological processes in action within a large volume of biological samples will create more problems than it can answer, as data “overload” will get more severe as imaging technologies progress. It will inevitably supersede the human capability to process and comprehend the information. In fact, it may even overwhelm the ability of artificial intelligence to tackle the challenge as its capacity is limited by how the system is trained. New biological theories that can potentially guide such effective and comprehensive training are yet to be fully developed, so the confusion will likely worsen before it gets better.

As much as we have advocated the importance of testable hypothesis in guiding microscopy-based experimental design53, in this case it may be a liability that would limit one’s ability to see beyond what is dictated by the hypothesis. This rigidity may blind the observer from potentially new discoveries hidden in the complex interplay of biological process – the raison d’etre of multi-dimensional imaging. Conversely, the complexity of modern microscopy datasets, while offering a vast landscape for exploration, can also quickly devolve into a labyrinth of spurious relationships and biased postulations unless they are further challenged by subsequent experiments. More importantly, such large-scale exploration may not be effectively accomplished by a single group. This argues for the importance of community-driven data mining, where the data can be viewed from multiple perspectives by a wide range of expertise. Such efforts have been actively ongoing for volumetric electron microscopy but remains at its infancy for optical imaging.

The future of our ability to visualize the processes of life in the context of living specimens is one that is both exciting and challenging. As we push the boundaries of live imaging, it is clear that current microscopy technologies cannot provide a complete picture by themselves. The solutions to these obstacles will necessarily come from the collective, interdisciplinary efforts52 of microscope builders, software specialists, and probe developers to develop new transformative technologies (Fig. 4). However, often forgotten or ignored in the development process is the end user—bioscientists who rely on these tools to navigate through the challenges inherent to biological research. Failing to keep their needs at the forefront risks isolating the target audience, thereby resulting in a poor outcome given the expenditure and efforts put into developing the technologies. To truly revolutionize the field of live cell imaging, this chasm between tool creation and its widespread adoption must be bridged. The involvement of the end users in the development process, and incorporating their input and feedback, is indispensable.

A culmination of various tool developments, integrated with easy and equitable access to these technologies, will enable imaging anytime, anything and anywhere, thereby powering biological breakthroughs.

In this paper, we consider the ability to perform imaging experiments “anywhere” to also include wider adoptability of the microscopy technology, especially in regions where accessibility to technologies and infrastructure is a challenge. The workflow of microscopy technology development cannot be considered complete upon publication of the technology but must be extended to include the necessary strategies to bring the newly developed tools into the hands of scientists who need them. This is an under-appreciated challenge of technology development, often overlooked even by funding organizations that support development of the very technology in question. Turning a blind eye to technology dissemination creates a missed opportunity for the scientific community to reap the utmost transformative power from the technology, and an even bigger loss for funders to maximize the impact-per-dollar of their investment. This clear demarcation of responsibility, in which technology developers do not see themselves as champions of their own inventions, is often tacitly endorsed by funders. In fact, many funders do not emphasize dissemination strategies as part of the success metrics of technology development. More importantly, to ignore dissemination is antithetical to the very spirit of technology development, for it channels the developer’s attention and effort away from taking the product past the finishing line where the technology can be widely usable. This has further precipitated a situation wherein academic research is saddled with potentially transformative technologies, rarely progressing beyond the proof-of-concept stage. The rare instances of successful and widespread commercialization, such as the lattice light sheet74, multiview light sheet69, swept confocally-aligned planar excitation210, and chip-based microscopes211, occurred precisely because of the active efforts of the developers to disseminate these technologies. Therefore, it is essential for all stakeholders, including researchers, funders, and technology developers, to foster an ecosystem of innovation that supports the entire lifecycle of technology development—from ideation to dissemination. However, commercialization is not the sole demonstration or proof of successful dissemination. While partnerships with industry to facilitate the distribution of new technologies is a positive step, the process of commercialization can take several years and the products may not be affordable to a broad range of researchers. Therefore, it is imperative, in parallel, to develop other avenues of technology dissemination via open-source tool development212, training and education programs213,214, and creation of open-access centers165. The emergence of affordable, open-source research-grade microscopes represents a rapidly advancing area, with innovations like the openFrame215 microscope and Flamingo microscope216 effectively lowering entry barriers and offering a modular design for convenient upgrades217. Similarly, open science initiatives for sharing of research reagents, such as Addgene plasmids218 and JaneliaFluor dye distribution program219, must be embraced and are necessary for the advancement of scientific knowledge and collaboration.

References

-

Cuny, A. P., Schlottmann, F. P., Ewald, J. C., Pelet, S. & Schmoller, K. M. Live cell microscopy: from image to insight. Biophys. Rev. 3, 021302 (2022).

Google Scholar

-

Hickey, S. M. et al. Fluorescence microscopy—an outline of hardware, biological handling, and fluorophore considerations. Cells 11, 35 (2022).

Google Scholar

-

Huang, Q. et al. The frontier of live tissue imaging across space and time. Cell Stem Cell 28, 603–622 (2021).

Google Scholar

-

Nienhaus, K. & Nienhaus, G. U. Genetically encodable fluorescent protein markers in advanced optical imaging. Methods Appl. Fluoresc. 10, 042002 (2022).

Google Scholar

-

Specht, E. A., Braselmann, E. & Palmer, A. E. A critical and comparative review of fluorescent tools for live-cell imaging. Annu. Rev. Physiol. 79, 93–117 (2017).

Google Scholar

-

Rodriguez, E. A. et al. The growing and glowing toolbox of fluorescent and photoactive proteins. Trends Biochem. Sci. 42, 111–129 (2017).

Google Scholar

-

Reynaud, E. G., Peychl, J., Huisken, J. & Tomancak, P. Guide to light-sheet microscopy for adventurous biologists. Nat. Methods 12, 30–34 (2015).

Google Scholar

-

Girkin, J. M. & Carvalho, M. T. The light-sheet microscopy revolution. J. Opt. 20, 053002 (2018).

Google Scholar

-

Wan, Y., McDole, K. & Keller, P. J. Light-sheet microscopy and its potential for understanding developmental processes. Annu. Rev. Cell Dev. Biol. 35, 655–681 (2019).

Google Scholar

-

Stelzer, E. H. K. et al. Light sheet fluorescence microscopy. Nat. Rev. Methods Prim. 1, 1–25 (2021).

-

Hobson, C. M. et al. Practical considerations for quantitative light sheet fluorescence microscopy. Nat. Methods 19, 1538–1549 (2022).

Google Scholar

-

Daetwyler, S. & Fiolka, R. P. Light-sheets and smart microscopy, an exciting future is dawning. Commun. Biol. 6, 1–11 (2023).

Google Scholar

-

Mishin, A. S. & Lukyanov, K. A. Live-cell super-resolution fluorescence microscopy. Biochem. Mosc. 84, 19–31 (2019).

Google Scholar

-

Schermelleh, L. et al. Super-resolution microscopy demystified. Nat. Cell Biol. 21, 72–84 (2019).

Google Scholar

-

Diaspro, A. & Bianchini, P. Optical nanoscopy. Riv. Nuovo Cim. 43, 385–455 (2020).

Google Scholar

-

Lelek, M. et al. Single-molecule localization microscopy. Nat. Rev. Methods Prim. 1, 1–27 (2021).

-

Hao, X. et al. Review of 4Pi fluorescence nanoscopy. Engineering 11, 146–153 (2022).

Google Scholar

-

Vangindertael, J. et al. An introduction to optical super-resolution microscopy for the adventurous biologist. Methods Appl. Fluoresc. 6, 022003 (2018).

Google Scholar

-

Jacquemet, G., Carisey, A. F., Hamidi, H., Henriques, R. & Leterrier, C. The cell biologist’s guide to super-resolution microscopy. J. Cell Sci. 133, 240713 (2020).

Google Scholar

-

Baumgart, F., Arnold, A. M., Rossboth, B. K., Brameshuber, M. & Schütz, G. J. What we talk about when we talk about nanoclusters. Methods Appl. Fluoresc. 7, 013001 (2018).

Google Scholar

-

Baddeley, D. & Bewersdorf, J. Biological insight from super-resolution microscopy: what we can learn from localization-based images. Annu. Rev. Biochem. 87, 965–989 (2018).

Google Scholar

-

Hugelier, S., Colosi, P. L. & Lakadamyali, M. Quantitative single-molecule localization microscopy. Annu. Rev. Biophys. 52, 139–160 (2023).

Google Scholar

-

Xiang, L., Chen, K. & Xu, K. Single molecules are your quanta: a bottom-up approach toward multidimensional super-resolution microscopy. ACS Nano 15, 12483–12496 (2021).

Google Scholar

-

Yan, R., Wang, B. & Xu, K. Functional super-resolution microscopy of the cell. Curr. Opin. Chem. Biol. 51, 92–97 (2019).

Google Scholar

-

Wang, S., Larina, I. V. & Larin, K. V. Label-free optical imaging in developmental biology [Invited]. Biomed. Opt. Express 11, 2017 (2020).

Google Scholar

-

Parodi, V. et al. Nonlinear optical microscopy: from fundamentals to applications in live bioimaging. Front. Bioeng. Biotechnol. 8, 585363 (2020).

Google Scholar

-

Borile, G., Sandrin, D., Filippi, A., Anderson, K. I. & Romanato, F. Label-free multiphoton microscopy: much more than fancy images. Int. J. Mol. Sci. 22, 2657 (2021).

Google Scholar

-

Hilzenrat, G., Gill, E. T. & McArthur, S. L. Imaging approaches for monitoring three-dimensional cell and tissue culture systems. J. Biophotonics 15, e202100380 (2022).

Google Scholar

-

Ghosh, B. & Agarwal, K. Viewing life without labels under optical microscopes. Commun. Biol. 6, 1–12 (2023).

Google Scholar

-

Kaderuppan, S. S., Wong, E. W. L., Sharma, A. & Woo, W. L. Smart nanoscopy: a review of computational approaches to achieve super-resolved optical microscopy. IEEE Access. 8, 214801–214831 (2020).

Google Scholar

-

Greener, J. G., Kandathil, S. M., Moffat, L. & Jones, D. T. A guide to machine learning for biologists. Nat. Rev. Mol. Cell Biol. 23, 40–55 (2022).

Google Scholar

-

von Chamier, L. et al. Democratising deep learning for microscopy with ZeroCostDL4Mic. Nat. Commun. 12, 2276 (2021).

Google Scholar

-

Moen, E. et al. Deep learning for cellular image analysis. Nat. Methods 16, 1233–1246 (2019).

Google Scholar

-

What’s next for bioimage analysis? Nat. Methods 20, 945–946 (2023).

-

Yoon, S. et al. Deep optical imaging within complex scattering media. Nat. Rev. Phys. 2, 141–158 (2020).

Google Scholar

-

Sahu, P. & Mazumder, N. Advances in adaptive optics–based two-photon fluorescence microscopy for brain imaging. Lasers Med. Sci. 35, 317–328 (2020).

Google Scholar

-

Ji, N. Adaptive optical fluorescence microscopy. Nat. Methods 14, 374–380 (2017).

Google Scholar

-

Liu, T.-L. et al. Observing the cell in its native state: Imaging subcellular dynamics in multicellular organisms. Science 360, eaaq1392 (2018).

Google Scholar

-

Hampson, K. M. et al. Adaptive optics for high-resolution imaging. Nat. Rev. Methods Prim. 1, 1–26 (2021).

-

Zhang, Q. et al. Adaptive optics for optical microscopy [Invited]. Biomed. Opt. Express 14, 1732–1756 (2023).

Google Scholar

-

Booth, M. J. Adaptive optical microscopy: the ongoing quest for a perfect image. Light Sci. Appl. 3, e165–e165 (2014).

Google Scholar

-

Madhusoodanan, J. Smart microscopes spot fleeting biology. Nature 614, 378–380 (2023).

Google Scholar

-

Scherf, N. & Huisken, J. The smart and gentle microscope. Nat. Biotechnol. 33, 815–818 (2015).

Google Scholar

-

Strack, R. Smarter microscopes. Nat. Methods 17, 23–23 (2020).

Google Scholar

-

Pinkard, H. & Waller, L. Microscopes are coming for your job. Nat. Methods 19, 1175–1176 (2022).

Google Scholar

-

Carpenter, A. E., Cimini, B. A. & Eliceiri, K. W. Smart microscopes of the future. Nat. Methods 20, 962–964 (2023).

Google Scholar

-

Wu, Y. & Shroff, H. Multiscale fluorescence imaging of living samples. Histochem. Cell Biol. 158, 301–323 (2022).

Google Scholar

-

Schneckenburger, H. & Richter, V. Challenges in 3D live cell imaging. Photonics 8, 275 (2021).

Google Scholar

-

Bon, P. & Cognet, L. On some current challenges in high-resolution optical bioimaging. ACS Photonics 9, 2538–2546 (2022).

Google Scholar

-

Tosheva, K. L., Yuan, Y., Pereira, P. M., Culley, S. & Henriques, R. Between life and death: strategies to reduce phototoxicity in super-resolution microscopy. J. Phys. Appl. Phys. 53, 163001 (2020).

Google Scholar

-

Icha, J., Weber, M., Waters, J. C. & Norden, C. Phototoxicity in live fluorescence microscopy, and how to avoid it. BioEssays 39, 1700003 (2017).

Google Scholar

-

Weber, M. & Huisken, J. Multidisciplinarity is critical to unlock the full potential of modern light microscopy. Front. Cell Dev. Biol. 9, 739015 (2021).

Google Scholar

-

Wait, E. C., Reiche, M. A. & Chew, T.-L. Hypothesis-driven quantitative fluorescence microscopy—the importance of reverse-thinking in experimental design. J. Cell Sci. 133, jcs250027 (2020).

Google Scholar

-

Ahrens, M. B., Orger, M. B., Robson, D. N., Li, J. M. & Keller, P. J. Whole-brain functional imaging at cellular resolution using light-sheet microscopy. Nat. Methods 10, 413–420 (2013).

Google Scholar

-

Keller, P. J. & Ahrens, M. B. Visualizing whole-brain activity and development at the single-cell level using light-sheet microscopy. Neuron 85, 462–483 (2015).

Google Scholar

-

Zhang, Y. & Looger, L. L. Fast and sensitive GCaMP calcium indicators for neuronal imaging. J. Physiol. (2023) https://doi.org/10.1113/JP283832.

-

Bando, Y., Grimm, C., Cornejo, V. H. & Yuste, R. Genetic voltage indicators. BMC Biol. 17, 71 (2019).

Google Scholar

-

Strack, R. Organic dyes for live imaging. Nat. Methods 18, 30–30 (2021).

Google Scholar

-

Weigert, M. et al. Content-aware image restoration: pushing the limits of fluorescence microscopy. Nat. Methods 15, 1090–1097 (2018).

Google Scholar

-

Laine, R. F., Jacquemet, G. & Krull, A. Imaging in focus: an introduction to denoising bioimages in the era of deep learning. Int. J. Biochem. Cell Biol. 140, 106077 (2021).

Google Scholar

-

Chen, J. et al. Three-dimensional residual channel attention networks denoise and sharpen fluorescence microscopy image volumes. Nat. Methods 18, 678–687 (2021).

Google Scholar

-

Krull, A., Buchholz, T.-O. & Jug, F. Noise2Void – Learning denoising from single noisy images. In Proc IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2129–2137 (IEEE, 2019). https://doi.org/10.1109/CVPR.2019.00223.

-

Krull, A., Vičar, T., Prakash, M., Lalit, M. & Jug, F. Probabilistic noise2Void: unsupervised content-aware denoising. Front. Comput. Sci. 2, 00005 (2020).

Google Scholar

-

Belthangady, C. & Royer, L. A. Applications, promises, and pitfalls of deep learning for fluorescence image reconstruction. Nat. Methods 16, 1215–1225 (2019).

Google Scholar

-

Tischer, C., Hilsenstein, V., Hanson, K. & Pepperkok, R. Adaptive fluorescence microscopy by online feedback image analysis. in Methods in Cell Biology (eds. Waters, J. C. & Wittman, T.) vol. 123 489–503 (Academic Press, 2014).

-

Vicidomini, G., Bianchini, P. & Diaspro, A. STED super-resolved microscopy. Nat. Methods 15, 173–182 (2018).

Google Scholar

-

Schloetel, J.-G., Heine, J., Cowman, A. F. & Pasternak, M. Guided STED nanoscopy enables super-resolution imaging of blood stage malaria parasites. Sci. Rep. 9, 4674 (2019).

Google Scholar

-

Royer, L. A. et al. Adaptive light-sheet microscopy for long-term, high-resolution imaging in living organisms. Nat. Biotechnol. 34, 1267–1278 (2016).

Google Scholar

-

McDole, K. et al. In toto imaging and reconstruction of post-implantation mouse development at the single-cell level. Cell 175, 859–876.e33 (2018).

Google Scholar

-

Mahecic, D. et al. Event-driven acquisition for content-enriched microscopy. Nat. Methods 19, 1262–1267 (2022).

Google Scholar

-

Almada, P. et al. Automating multimodal microscopy with NanoJ-Fluidics. Nat. Commun. 10, 1223 (2019).

Google Scholar

-

André, O., Kumra Ahnlide, J., Norlin, N., Swaminathan, V. & Nordenfelt, P. Data-driven microscopy allows for automated context-specific acquisition of high-fidelity image data. Cell Rep. Methods 3, 100419 (2023).

Google Scholar

-

Alvelid, J., Damenti, M., Sgattoni, C. & Testa, I. Event-triggered STED imaging. Nat. Methods 19, 1268–1275 (2022).

Google Scholar

-

Shi, Y. et al. Smart lattice light sheet microscopy for imaging rare and complex cellular events. 2023.03.07.531517 Preprint at https://doi.org/10.1101/2023.03.07.531517 (2023).

-

Hobson, C. M. & Aaron, J. S. Combining multiple fluorescence imaging techniques in biology: when one microscope is not enough. Mol. Biol. Cell 33, tp1 (2022).

Google Scholar

-

Sankaran, J. et al. Simultaneous spatiotemporal super-resolution and multi-parametric fluorescence microscopy. Nat. Commun. 12, 1748 (2021).

Google Scholar

-

Ando, T. et al. The 2018 correlative microscopy techniques roadmap. J. Phys. Appl. Phys. 51, 443001 (2018).

Google Scholar

-

Hauser, M. et al. Correlative super-resolution microscopy: new dimensions and new opportunities. Chem. Rev. 117, 7428–7456 (2017).

Google Scholar

-

Hanahan, D. Hallmarks of cancer: new dimensions. Cancer Discov. 12, 31–46 (2022).

Google Scholar

-

Hanselmann, R. G. & Welter, C. Origin of cancer: cell work is the key to understanding cancer initiation and progression. Front. Cell Dev. Biol. 10, 787995 (2022).

Google Scholar

-

Wishart, D. Metabolomics and the multi-omics view of cancer. Metabolites 12, 154 (2022).

Google Scholar

-

Roberts, B. et al. Systematic gene tagging using CRISPR/Cas9 in human stem cells to illuminate cell organization. Mol. Biol. Cell 28, 2854–2874 (2017).

Google Scholar

-

Zhong, H. et al. High-fidelity, efficient, and reversible labeling of endogenous proteins using CRISPR-based designer exon insertion. eLife 10, e64911 (2021).

Google Scholar

-

Sharma, A. et al. CRISPR/Cas9-mediated fluorescent tagging of endogenous proteins in human pluripotent stem cells. Curr. Protoc. Hum. Genet. 96, 21.11.1–21.11.20 (2018).

-

Deng, W., Shi, X., Tjian, R., Lionnet, T. & Singer, R. H. CASFISH: CRISPR/Cas9-mediated in situ labeling of genomic loci in fixed cells. Proc. Natl Acad. Sci. 112, 11870–11875 (2015).

Google Scholar

-

Chen, B., Zou, W., Xu, H., Liang, Y. & Huang, B. Efficient labeling and imaging of protein-coding genes in living cells using CRISPR-Tag. Nat. Commun. 9, 5065 (2018).

Google Scholar

-

Ma, H. et al. Multicolor CRISPR labeling of chromosomal loci in human cells. Proc. Natl Acad. Sci. 112, 3002–3007 (2015).

Google Scholar

-

Ye, H., Rong, Z. & Lin, Y. Live cell imaging of genomic loci using dCas9-SunTag system and a bright fluorescent protein. Protein Cell 8, 853–855 (2017).

Google Scholar

-

George, L., Indig, F. E., Abdelmohsen, K. & Gorospe, M. Intracellular RNA-tracking methods. Open Biol. 8, 180104 (2018).

Google Scholar

-

Hu, Y. et al. Enhanced single RNA imaging reveals dynamic gene expression in live animals. eLife 12, e82178 (2023).

Google Scholar

-

Li, W., Maekiniemi, A., Sato, H., Osman, C. & Singer, R. H. An improved imaging system that corrects MS2-induced RNA destabilization. Nat. Methods 19, 1558–1562 (2022).

Google Scholar

-

Pichon, X., Robert, M.-C., Bertrand, E., Singer, R. H. & Tutucci, E. New generations of MS2 variants and MCP fusions to detect single mRNAs in living eukaryotic cells. In RNA Tagging: Methods and Protocols (ed. Heinlein, M.) vol. 2166 121–144 (Springer US, 2020).

-

Carter, K. P., Young, A. M. & Palmer, A. E. Fluorescent sensors for measuring metal ions in living systems. Chem. Rev. 114, 4564–4601 (2014).

Google Scholar

-

Lazarou, T. S. & Buccella, D. Advances in imaging of understudied ions in signaling: a focus on magnesium. Curr. Opin. Chem. Biol. 57, 27–33 (2020).

Google Scholar

-

Hao, Z., Zhu, R. & Chen, P. R. Genetically encoded fluorescent sensors for measuring transition and heavy metals in biological systems. Curr. Opin. Chem. Biol. 43, 87–96 (2018).

Google Scholar

-

Torres-Ocampo, A. P. & Palmer, A. E. Genetically encoded fluorescent sensors for metals in biology. Curr. Opin. Chem. Biol. 74, 102284 (2023).

Google Scholar

-

Xiong, M. et al. DNAzyme-mediated genetically encoded sensors for ratiometric imaging of metal ions in living cells. Angew. Chem. Int. Ed. 59, 1891–1896 (2020).

Google Scholar

-

Bischof, H. et al. Live-cell imaging of physiologically relevant metal ions using genetically encoded FRET-based probes. Cells 8, 492 (2019).

Google Scholar

-

Zhang, Y. et al. Fast and sensitive GCaMP calcium indicators for imaging neural populations. Nature 615, 884–891 (2023).

Google Scholar

-

Farrants, H. et al. A modular chemigenetic calcium indicator enables in vivo functional imaging with near-infrared light. 2023.07.18.549527 Preprint at https://doi.org/10.1101/2023.07.18.549527 (2023).

-

Abdelfattah, A. S. et al. Sensitivity optimization of a rhodopsin-based fluorescent voltage indicator. Neuron 111, 1547–1563 (2023).

Google Scholar

-

Di Costanzo, L. & Panunzi, B. Visual pH sensors: from a chemical perspective to new bioengineered materials. Molecules 26, 2952 (2021).

Google Scholar

-

Germond, A., Fujita, H., Ichimura, T. & Watanabe, T. M. Design and development of genetically encoded fluorescent sensors to monitor intracellular chemical and physical parameters. Biophys. Rev. 8, 121–138 (2016).

Google Scholar

-

Hande, P. E., Shelke, Y. G., Datta, A. & Gharpure, S. J. Recent advances in small molecule-based intracellular pH probes. ChemBioChem 23, e202100448 (2022).

Google Scholar

-

Hobson, C. M., Aaron, J. S., Heddleston, J. M. & Chew, T.-L. Visualizing the invisible: advanced optical microscopy as a tool to measure biomechanical forces. Front. Cell Dev. Biol. 9, 706126 (2021).

Google Scholar

-

Varki, A. Account for the ‘dark matter’ of biology. Nature 497, 565–565 (2013).

Google Scholar

-

Harayama, T. & Riezman, H. Understanding the diversity of membrane lipid composition. Nat. Rev. Mol. Cell Biol. 19, 281–296 (2018).

Google Scholar

-

Varki, A. Biological roles of glycans. Glycobiology 27, 3–49 (2017).

Google Scholar

-

Möckl, L. et al. Quantitative super-resolution microscopy of the mammalian glycocalyx. Dev. Cell 50, 57–72.e6 (2019).

Google Scholar

-

Zol-Hanlon, M. I. & Schumann, B. Open questions in chemical glycobiology. Commun. Chem. 3, 1–5 (2020).

Google Scholar

-

Hammond, G. R. V., Ricci, M. M. C., Weckerly, C. C. & Wills, R. C. An update on genetically encoded lipid biosensors. Mol. Biol. Cell 33(tp2), 1–7 (2022).

-

Warkentin, R. & Kwan, D. H. Resources and methods for engineering “designer” glycan-binding proteins. Molecules 26, 380 (2021).

Google Scholar

-

Bumpus, T. W. & Baskin, J. M. Greasing the wheels of lipid biology with chemical tools. Trends Biochem. Sci. 43, 970–983 (2018).

Google Scholar

-

Rigolot, V., Biot, C. & Lion, C. To view your biomolecule, click inside the cell. Angew. Chem. Int. Ed. 60, 23084–23105 (2021).

Google Scholar

-

Cioce, A. et al. Cell-specific bioorthogonal tagging of glycoproteins. Nat. Commun. 13, 6237 (2022).

Google Scholar

-

Suazo, K. F., Park, K.-Y. & Distefano, M. D. A not-so-ancient grease history: click chemistry and protein lipid modifications. Chem. Rev. 121, 7178–7248 (2021).

Google Scholar

-

Rehman, A. U. & Qureshi, S. A. A review of the medical hyperspectral imaging systems and unmixing algorithms’ in biological tissues. Photodiag Photodyn. Ther. 33, 102165 (2021).

Google Scholar

-

Hedde, P. N., Cinco, R., Malacrida, L., Kamaid, A. & Gratton, E. Phasor-based hyperspectral snapshot microscopy allows fast imaging of live, three-dimensional tissues for biomedical applications. Commun. Biol. 4, 1–11 (2021).

Google Scholar

-

Li, Q. et al. Review of spectral imaging technology in biomedical engineering: achievements and challenges. J. Biomed. Opt. 18, 100901 (2013).

Google Scholar

-

Datta, R., Heaster, T. M., Sharick, J. T., Gillette, A. A. & Skala, M. C. Fluorescence lifetime imaging microscopy: fundamentals and advances in instrumentation, analysis, and applications. J. Biomed. Opt. 25, 071203 (2020).

Google Scholar

-

Bitton, A., Sambrano, J., Valentino, S. & Houston, J. P. A review of new high-throughput methods designed for fluorescence lifetime sensing from cells and tissues. Front. Phys. 9, 648553 (2021).

Google Scholar

-

Chen, K., Li, W. & Xu, K. Super-multiplexing excitation spectral microscopy with multiple fluorescence bands. Biomed. Opt. Express 13, 6048–6060 (2022).

Google Scholar

-

Orth, A. et al. Super-multiplexed fluorescence microscopy via photostability contrast. Biomed. Opt. Express 9, 2943–2954 (2018).

Google Scholar

-

Valm, A. M., Oldenbourg, R. & Borisy, G. G. Multiplexed spectral Imaging of 120 different fluorescent labels. PLOS One 11, e0158495 (2016).

Google Scholar

-

Hoelzel, C. A. & Zhang, X. Visualizing and manipulating biological processes by using HaloTag and SNAP-Tag technologies. ChemBioChem 21, 1935–1946 (2020).

Google Scholar

-

Wilhelm, J. et al. Kinetic and structural characterization of the self-labeling protein Tags HaloTag7, SNAP-tag, and CLIP-tag. Biochemistry 60, 2560–2575 (2021).

Google Scholar

-

Reiche, M. A. et al. When light meets biology—how the specimen affects quantitative microscopy. J. Cell Sci. 135, jcs259656 (2022).

Google Scholar

-

Jensen, E. C. Use of fluorescent probes: their effect on cell biology and limitations. Anat. Rec. 295, 2031–2036 (2012).

Google Scholar

-

Yin, L. et al. How does fluorescent labeling affect the binding kinetics of proteins with intact cells? Biosens. Bioelectron. 66, 412–416 (2015).

Google Scholar

-

Costantini, L. M. & Snapp, E. L. Fluorescent proteins in cellular organelles: serious pitfalls and some solutions. DNA Cell Biol. 32, 622–627 (2013).

Google Scholar

-

Costantini, L. M. et al. A palette of fluorescent proteins optimized for diverse cellular environments. Nat. Commun. 6, 7670 (2015).

Google Scholar

-

Nguyen, T. L. et al. Quantitative phase imaging: recent advances and expanding potential in biomedicine. ACS Nano 16, 11516–11544 (2022).

Google Scholar

-

Park, Y., Depeursinge, C. & Popescu, G. Quantitative phase imaging in biomedicine. Nat. Photonics 12, 578–589 (2018).

Google Scholar

-

Manifold, B. & Fu, D. Quantitative stimulated Raman scattering microscopy: promises and pitfalls. Annu. Rev. Anal. Chem. 15, 269–289 (2022).

Google Scholar

-

Li, Y. et al. Review of stimulated Raman scattering microscopy techniques and applications in the biosciences. Adv. Biol. 5, 2000184 (2021).

Google Scholar

-

Du, J. et al. Raman-guided subcellular pharmaco-metabolomics for metastatic melanoma cells. Nat. Commun. 11, 4830 (2020).

Google Scholar

-

Andrews, M. G. & Kriegstein, A. R. Challenges of organoid research. Annu. Rev. Neurosci. 45, 23–39 (2022).

Google Scholar

-

Hofer, M. & Lutolf, M. P. Engineering organoids. Nat. Rev. Mater. 6, 402–420 (2021).

Google Scholar

-

Huang, Y. et al. Research progress, challenges, and breakthroughs of organoids as disease models. Front. Cell Dev. Biol. 9, 740574 (2021).

Google Scholar

-

Gigan, S. Optical microscopy aims deep. Nat. Photonics 11, 14–16 (2017).

Google Scholar

-

Richardson, D. S. et al. Tissue clearing. Nat. Rev. Methods Prim. 1, 1–24 (2021).

-

Chen, F., Tillberg, P. W. & Boyden, E. S. Expansion microscopy. Science 347, 543–548 (2015).

Google Scholar

-

Belle, M. et al. Tridimensional visualization and analysis of early human development. Cell 169, 161–173.e12 (2017).

Google Scholar

-

Lecoq, J. A., Boehringer, R. & Grewe, B. F. Deep brain imaging on the move. Nat. Methods 1–2 (2023) https://doi.org/10.1038/s41592-023-01808-z.

-

Helmchen, F. & Denk, W. Deep tissue two-photon microscopy. Nat. Methods 2, 932–940 (2005).

Google Scholar

-

Li, C. & Wang, Q. Challenges and opportunities for intravital near-infrared fluorescence imaging technology in the second transparency window. ACS Nano 12, 9654–9659 (2018).

Google Scholar

-

Li, C., Chen, G., Zhang, Y., Wu, F. & Wang, Q. Advanced fluorescence imaging technology in the near-infrared-II window for biomedical applications. J. Am. Chem. Soc. 142, 14789–14804 (2020).

Google Scholar

-

Liang, W., He, S. & Wu, S. Fluorescence imaging in second near-infrared window: developments, challenges, and opportunities. Adv. NanoBiomed. Res. 2, 2200087 (2022).

Google Scholar

-

Barretto, R. P. J., Messerschmidt, B. & Schnitzer, M. J. In vivo fluorescence imaging with high-resolution microlenses. Nat. Methods 6, 511–512 (2009).

Google Scholar

-

Qin, Z. et al. Adaptive optics two-photon endomicroscopy enables deep-brain imaging at synaptic resolution over large volumes. Sci. Adv. 6, eabc6521 (2020).

Google Scholar

-

Beacher, N. J., Washington, K. A., Zhang, Y., Li, Y. & Lin, D.-T. GRIN lens applications for studying neurobiology of substance use disorder. Addict. Neurosci. 4, 100049 (2022).

Google Scholar

-

Pochechuev, M. S. et al. Multisite cell- and neural-dynamics-resolving deep brain imaging in freely moving mice with implanted reconnectable fiber bundles. J. Biophotonics 13, e202000081 (2020).

Google Scholar

-

Laing, B. T., Siemian, J. N., Sarsfield, S. & Aponte, Y. Fluorescence microendoscopy for in vivo deep-brain imaging of neuronal circuits. J. Neurosci. Methods 348, 109015 (2021).

Google Scholar

-

Barbera, G. et al. Spatially compact neural clusters in the dorsal striatum encode locomotion relevant information. Neuron 92, 202–213 (2016).

Google Scholar

-

Pernici, C. D., Kemp, B. S. & Murray, T. A. Time course images of cellular injury and recovery in murine brain with high-resolution GRIN lens system. Sci. Rep. 9, 7946 (2019).

Google Scholar

-

McConnell, G. et al. A novel optical microscope for imaging large embryos and tissue volumes with sub-cellular resolution throughout. eLife 5, e18659 (2016).

Google Scholar

-

McConnell, G. & Amos, W. B. Application of the Mesolens for subcellular resolution imaging of intact larval and whole adult Drosophila. J. Microsc. 270, 252–258 (2018).

Google Scholar

-

Voigt, F. F. et al. Reflective multi-immersion microscope objectives inspired by the Schmidt telescope. Nat. Biotechnol. 1–7 (2023) https://doi.org/10.1038/s41587-023-01717-8.

-

Prabhat, P., Ram, S., Ward, E. S. & Ober, R. J. Simultaneous imaging of different focal planes in fluorescence microscopy for the study of cellular dynamics in three dimensions. IEEE Trans. NanoBiosci. 3, 237–242 (2004).

Google Scholar

-

Abrahamsson, S. et al. Fast multicolor 3D imaging using aberration-corrected multifocus microscopy. Nat. Methods 10, 60–63 (2013).

Google Scholar

-

Levoy, M., Ng, R., Adams, A., Footer, M. & Horowitz, M. Light field microscopy. in ACM SIGGRAPH 2006 Papers 924–934 (Association for Computing Machinery, 2006). https://doi.org/10.1145/1179352.1141976.

-

Kim, K. Single-shot light-field microscopy: an emerging tool for 3D biomedical imaging. BioChip J. 16, 397–408 (2022).

Google Scholar

-

Li, H. et al. Fast, volumetric live-cell imaging using high-resolution light-field microscopy. Biomed. Opt. Express 10, 29–49 (2019).

Google Scholar

-

Nöbauer, T., Zhang, Y., Kim, H. & Vaziri, A. Mesoscale volumetric light-field (MesoLF) imaging of neuroactivity across cortical areas at 18 Hz. Nat. Methods 1–10. https://doi.org/10.1038/s41592-023-01789-z (2023).

-

Cartwright, H. N., Hobson, C. M., Chew, T., Reiche, M. A. & Aaron, J. S. The challenges and opportunities of open‐access microscopy facilities. J. Microsc. 00, 1–11 (2023).

-

Andreev, A. & Koo, D. E. S. Practical guide to storage of large amounts of microscopy data. Microsc. Today 28, 42–45 (2020).

Google Scholar

-

Andreev, A., Morrell, T., Briney, K., Gesing, S. & Manor, U. Biologists need modern data infrastructure on campus. Preprint at https://doi.org/10.48550/arXiv.2108.07631 (2021).

-

Poger, D., Yen, L. & Braet, F. Big data in contemporary electron microscopy: challenges and opportunities in data transfer, compute and management. Histochem. Cell Biol. 160, 169–192 (2023).

Google Scholar

-

Wallace, C. T., St. Croix, C. M. & Watkins, S. C. Data management and archiving in a large microscopy-and-imaging, multi-user facility: problems and solutions. Mol. Reprod. Dev. 82, 630–634 (2015).

Google Scholar

-

Amat, F. et al. Efficient processing and analysis of large-scale light-sheet microscopy data. Nat. Protoc. 10, 1679–1696 (2015).

Google Scholar

-

Chew, T.-L., George, R., Soell, A. & Betzig, E. Opening a path to commercialization. Opt. Photonics N. 28, 42–49 (2017).

Google Scholar

-

Rahmoon, M. A., Simegn, G. L., William, W. & Reiche, M. A. Unveiling the vision: exploring the potential of image analysis in Africa. Nat. Methods 20, 979–981 (2023).

Google Scholar

-

Moran, C. M. & Thomson, A. J. W. Preclinical ultrasound imaging—a review of techniques and imaging applications. Front. Phys. 8, 00124 (2020).

Google Scholar

-

Wang, L. V. & Yao, J. A practical guide to photoacoustic tomography in the life sciences. Nat. Methods 13, 627–638 (2016).

Google Scholar

-

Das, D., Sharma, A., Rajendran, P. & Pramanik, M. Another decade of photoacoustic imaging. Phys. Med. Biol. 66, 05TR01 (2021).

Google Scholar

-

Lin, L. & Wang, L. V. The emerging role of photoacoustic imaging in clinical oncology. Nat. Rev. Clin. Oncol. 19, 365–384 (2022).

Google Scholar

-

Wang, L. V. & Hu, S. Photoacoustic tomography: in vivo imaging from organelles to organs. Science 335, 1458–1462 (2012).

Google Scholar

-

Wray, P., Lin, L., Hu, P. & Wang, L. V. Photoacoustic computed tomography of human extremities. J. Biomed. Opt. 24, 026003 (2019).

Google Scholar

-

Han, S. et al. Contrast agents for photoacoustic imaging: a review focusing on the wavelength range. Biosensors 12, 594 (2022).

Google Scholar

-

Upputuri, P. K. & Pramanik, M. Recent advances in photoacoustic contrast agents for in vivo imaging. WIREs Nanomed. Nanobiotechnol. 12, e1618 (2020).

Google Scholar

-

Luke, G. P., Yeager, D. & Emelianov, S. Y. Biomedical applications of photoacoustic imaging with exogenous contrast agents. Ann. Biomed. Eng. 40, 422–437 (2012).

Google Scholar

-

Maresca, D. et al. Biomolecular ultrasound and sonogenetics. Annu. Rev. Chem. Biomol. Eng. 9, 229–252 (2018).

Google Scholar

-

Farhadi, A., Ho, G. H., Sawyer, D. P., Bourdeau, R. W. & Shapiro, M. G. Ultrasound imaging of gene expression in mammalian cells. Science 365, 1469–1475 (2019).

Google Scholar

-

Kherlopian, A. R. et al. A review of imaging techniques for systems biology. BMC Syst. Biol. 2, 74 (2008).

Google Scholar

-

Ntziachristos, V. Going deeper than microscopy: the optical imaging frontier in biology. Nat. Methods 7, 603–614 (2010).

Google Scholar

-

Kose, K. Physical and technical aspects of human magnetic resonance imaging: present status and 50 years historical review. Adv. Phys. X 6, 1885310 (2021).

-

Pike, G. B. Quantitative functional MRI: concepts, issues and future challenges. NeuroImage 62, 1234–1240 (2012).

Google Scholar

-

Soares, J. M. et al. A Hitchhiker’s guide to functional magnetic resonance imaging. Front. Neurosci. 10, 00515 (2016).

Google Scholar

-

Rawson, S. D., Maksimcuka, J., Withers, P. J. & Cartmell, S. H. X-ray computed tomography in life sciences. BMC Biol. 18, 21 (2020).

Google Scholar

-

du Plessis, A. & Broeckhoven, C. Looking deep into nature: a review of micro-computed tomography in biomimicry. Acta Biomater. 85, 27–40 (2019).

Google Scholar

-

Shukla, A. K. & Kumar, U. Positron emission tomography: an overview. J. Med. Phys. 31, 13 (2006).

Google Scholar

-

Hooker, J. M. & Carson, R. E. Human positron emission tomography neuroimaging. Annu. Rev. Biomed. Eng. 21, 551–581 (2019).

Google Scholar

-

Bouma, B. E. et al. Optical coherence tomography. Nat. Rev. Methods Prim. 2, 1–20 (2022).

-

Gora, M. J., Suter, M. J., Tearney, G. J. & Li, X. Endoscopic optical coherence tomography: technologies and clinical applications [Invited]. Biomed. Opt. Express 8, 2405–2444 (2017).

Google Scholar

-

Zambito, G., Chawda, C. & Mezzanotte, L. Emerging tools for bioluminescence imaging. Curr. Opin. Chem. Biol. 63, 86–94 (2021).

Google Scholar

-

Mezzanotte, L. et al. In vivo molecular bioluminescence imaging: new tools and applications. Trends Biotechnol. 35, 640–652 (2017).

Google Scholar

-

Liu, S., Su, Y., Lin, M. Z. & Ronald, J. A. Brightening up biology: advances in luciferase systems for in vivo imaging. ACS Chem. Biol. 16, 2707–2718 (2021).

Google Scholar

-

Ozturk, M. S. et al. Intravital mesoscopic fluorescence molecular tomography allows non-invasive in vivo monitoring and quantification of breast cancer growth dynamics. Commun. Biol. 4, 1–11 (2021).

Google Scholar

-

Scheele, C. L. G. J. et al. Multiphoton intravital microscopy of rodents. Nat. Rev. Methods Prim. 2, 1–26 (2022).

-

Alieva, M., Ritsma, L., Giedt, R. J., Weissleder, R. & van Rheenen, J. Imaging windows for long-term intravital imaging. IntraVital 3, e29917 (2014).

Google Scholar

-

Skocek, O. et al. High-speed volumetric imaging of neuronal activity in freely moving rodents. Nat. Methods 15, 429–432 (2018).

Google Scholar

-

Senarathna, J. et al. A miniature multi-contrast microscope for functional imaging in freely behaving animals. Nat. Commun. 10, 99 (2019).

Google Scholar

-

Klioutchnikov, A. et al. A three-photon head-mounted microscope for imaging all layers of visual cortex in freely moving mice. Nat. Methods 20, 610–616 (2023).

Google Scholar

-

Guo, H., Chen, Q., Qin, W., Qi, W. & Xi, L. Detachable head-mounted photoacoustic microscope in freely moving mice. Opt. Lett. 46, 6055–6058 (2021).

Google Scholar

-

Zong, W. et al. Large-scale two-photon calcium imaging in freely moving mice. Cell 185, 1240–1256.e30 (2022).

Google Scholar

-

Zong, W. et al. Miniature two-photon microscopy for enlarged field-of-view, multi-plane and long-term brain imaging. Nat. Methods 18, 46–49 (2021).

Google Scholar

-

D’Ambrosio, M. V. et al. Point-of-care quantification of blood-borne filarial parasites with a mobile phone microscope. Sci. Transl. Med. 7, 286re4–286re4 (2015).

Google Scholar

-

Kamgno, J. et al. A test-and-not-treat strategy for onchocerciasis in Loa Loa–endemic areas. N. Engl. J. Med. 377, 2044–2052 (2017).

Google Scholar

-

Li, H., Soto-Montoya, H., Voisin, M., Valenzuela, L. F. & Prakash, M. Octopi: Open configurable high-throughput imaging platform for infectious disease diagnosis in the field. 684423 Preprint at https://doi.org/10.1101/684423 (2019).

-

Bouchard, M. B. et al. Swept confocally-aligned planar excitation (SCAPE) microscopy for high-speed volumetric imaging of behaving organisms. Nat. Photonics 9, 113–119 (2015).

Google Scholar

-

Tinguely, J.-C., Helle, Ø. I. & Ahluwalia, B. S. Silicon nitride waveguide platform for fluorescence microscopy of living cells. Opt. Express 25, 27678–27690 (2017).

Google Scholar

-

Hohlbein, J. et al. Open microscopy in the life sciences: quo vadis? Nat. Methods 19, 1020–1025 (2022).

Google Scholar

-

Reiche, M. A. et al. Imaging Africa: a strategic approach to optical microscopy training in Africa. Nat. Methods 18, 847–855 (2021).

Google Scholar

-