Abstract

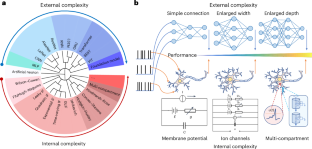

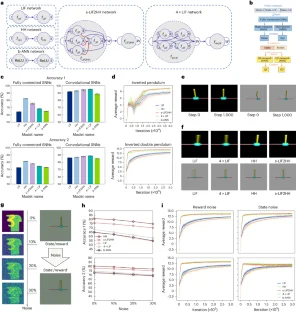

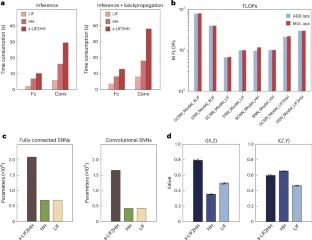

Artificial intelligence (AI) researchers currently believe that the main approach to building more general model problems is the big AI model, where existing neural networks are becoming deeper, larger and wider. We term this the big model with external complexity approach. In this work we argue that there is another approach called small model with internal complexity, which can be used to find a suitable path of incorporating rich properties into neurons to construct larger and more efficient AI models. We uncover that one has to increase the scale of the network externally to stimulate the same dynamical properties. To illustrate this, we build a Hodgkin–Huxley (HH) network with rich internal complexity, where each neuron is an HH model, and prove that the dynamical properties and performance of the HH network can be equivalent to a bigger leaky integrate-and-fire (LIF) network, where each neuron is a LIF neuron with simple internal complexity.

This is a preview of subscription content, access via your institution

Access options

style{display:none!important}.LiveAreaSection-193358632 *{align-content:stretch;align-items:stretch;align-self:auto;animation-delay:0s;animation-direction:normal;animation-duration:0s;animation-fill-mode:none;animation-iteration-count:1;animation-name:none;animation-play-state:running;animation-timing-function:ease;azimuth:center;backface-visibility:visible;background-attachment:scroll;background-blend-mode:normal;background-clip:borderBox;background-color:transparent;background-image:none;background-origin:paddingBox;background-position:0 0;background-repeat:repeat;background-size:auto auto;block-size:auto;border-block-end-color:currentcolor;border-block-end-style:none;border-block-end-width:medium;border-block-start-color:currentcolor;border-block-start-style:none;border-block-start-width:medium;border-bottom-color:currentcolor;border-bottom-left-radius:0;border-bottom-right-radius:0;border-bottom-style:none;border-bottom-width:medium;border-collapse:separate;border-image-outset:0s;border-image-repeat:stretch;border-image-slice:100%;border-image-source:none;border-image-width:1;border-inline-end-color:currentcolor;border-inline-end-style:none;border-inline-end-width:medium;border-inline-start-color:currentcolor;border-inline-start-style:none;border-inline-start-width:medium;border-left-color:currentcolor;border-left-style:none;border-left-width:medium;border-right-color:currentcolor;border-right-style:none;border-right-width:medium;border-spacing:0;border-top-color:currentcolor;border-top-left-radius:0;border-top-right-radius:0;border-top-style:none;border-top-width:medium;bottom:auto;box-decoration-break:slice;box-shadow:none;box-sizing:border-box;break-after:auto;break-before:auto;break-inside:auto;caption-side:top;caret-color:auto;clear:none;clip:auto;clip-path:none;color:initial;column-count:auto;column-fill:balance;column-gap:normal;column-rule-color:currentcolor;column-rule-style:none;column-rule-width:medium;column-span:none;column-width:auto;content:normal;counter-increment:none;counter-reset:none;cursor:auto;display:inline;empty-cells:show;filter:none;flex-basis:auto;flex-direction:row;flex-grow:0;flex-shrink:1;flex-wrap:nowrap;float:none;font-family:initial;font-feature-settings:normal;font-kerning:auto;font-language-override:normal;font-size:medium;font-size-adjust:none;font-stretch:normal;font-style:normal;font-synthesis:weight style;font-variant:normal;font-variant-alternates:normal;font-variant-caps:normal;font-variant-east-asian:normal;font-variant-ligatures:normal;font-variant-numeric:normal;font-variant-position:normal;font-weight:400;grid-auto-columns:auto;grid-auto-flow:row;grid-auto-rows:auto;grid-column-end:auto;grid-column-gap:0;grid-column-start:auto;grid-row-end:auto;grid-row-gap:0;grid-row-start:auto;grid-template-areas:none;grid-template-columns:none;grid-template-rows:none;height:auto;hyphens:manual;image-orientation:0deg;image-rendering:auto;image-resolution:1dppx;ime-mode:auto;inline-size:auto;isolation:auto;justify-content:flexStart;left:auto;letter-spacing:normal;line-break:auto;line-height:normal;list-style-image:none;list-style-position:outside;list-style-type:disc;margin-block-end:0;margin-block-start:0;margin-bottom:0;margin-inline-end:0;margin-inline-start:0;margin-left:0;margin-right:0;margin-top:0;mask-clip:borderBox;mask-composite:add;mask-image:none;mask-mode:matchSource;mask-origin:borderBox;mask-position:0 0;mask-repeat:repeat;mask-size:auto;mask-type:luminance;max-height:none;max-width:none;min-block-size:0;min-height:0;min-inline-size:0;min-width:0;mix-blend-mode:normal;object-fit:fill;object-position:50% 50%;offset-block-end:auto;offset-block-start:auto;offset-inline-end:auto;offset-inline-start:auto;opacity:1;order:0;orphans:2;outline-color:initial;outline-offset:0;outline-style:none;outline-width:medium;overflow:visible;overflow-wrap:normal;overflow-x:visible;overflow-y:visible;padding-block-end:0;padding-block-start:0;padding-bottom:0;padding-inline-end:0;padding-inline-start:0;padding-left:0;padding-right:0;padding-top:0;page-break-after:auto;page-break-before:auto;page-break-inside:auto;perspective:none;perspective-origin:50% 50%;pointer-events:auto;position:static;quotes:initial;resize:none;right:auto;ruby-align:spaceAround;ruby-merge:separate;ruby-position:over;scroll-behavior:auto;scroll-snap-coordinate:none;scroll-snap-destination:0 0;scroll-snap-points-x:none;scroll-snap-points-y:none;scroll-snap-type:none;shape-image-threshold:0;shape-margin:0;shape-outside:none;tab-size:8;table-layout:auto;text-align:initial;text-align-last:auto;text-combine-upright:none;text-decoration-color:currentcolor;text-decoration-line:none;text-decoration-style:solid;text-emphasis-color:currentcolor;text-emphasis-position:over right;text-emphasis-style:none;text-indent:0;text-justify:auto;text-orientation:mixed;text-overflow:clip;text-rendering:auto;text-shadow:none;text-transform:none;text-underline-position:auto;top:auto;touch-action:auto;transform:none;transform-box:borderBox;transform-origin:50% 50%0;transform-style:flat;transition-delay:0s;transition-duration:0s;transition-property:all;transition-timing-function:ease;vertical-align:baseline;visibility:visible;white-space:normal;widows:2;width:auto;will-change:auto;word-break:normal;word-spacing:normal;word-wrap:normal;writing-mode:horizontalTb;z-index:auto;-webkit-appearance:none;-moz-appearance:none;-ms-appearance:none;appearance:none;margin:0}.LiveAreaSection-193358632{width:100%}.LiveAreaSection-193358632 .login-option-buybox{display:block;width:100%;font-size:17px;line-height:30px;color:#222;padding-top:30px;font-family:Harding,Palatino,serif}.LiveAreaSection-193358632 .additional-access-options{display:block;font-weight:700;font-size:17px;line-height:30px;color:#222;font-family:Harding,Palatino,serif}.LiveAreaSection-193358632 .additional-login>li:not(:first-child)::before{transform:translateY(-50%);content:””;height:1rem;position:absolute;top:50%;left:0;border-left:2px solid #999}.LiveAreaSection-193358632 .additional-login>li:not(:first-child){padding-left:10px}.LiveAreaSection-193358632 .additional-login>li{display:inline-block;position:relative;vertical-align:middle;padding-right:10px}.BuyBoxSection-683559780{display:flex;flex-wrap:wrap;flex:1;flex-direction:row-reverse;margin:-30px -15px 0}.BuyBoxSection-683559780 .box-inner{width:100%;height:100%;padding:30px 5px;display:flex;flex-direction:column;justify-content:space-between}.BuyBoxSection-683559780 p{margin:0}.BuyBoxSection-683559780 .readcube-buybox{background-color:#f3f3f3;flex-shrink:1;flex-grow:1;flex-basis:255px;background-clip:content-box;padding:0 15px;margin-top:30px}.BuyBoxSection-683559780 .subscribe-buybox{background-color:#f3f3f3;flex-shrink:1;flex-grow:4;flex-basis:300px;background-clip:content-box;padding:0 15px;margin-top:30px}.BuyBoxSection-683559780 .subscribe-buybox-nature-plus{background-color:#f3f3f3;flex-shrink:1;flex-grow:4;flex-basis:100%;background-clip:content-box;padding:0 15px;margin-top:30px}.BuyBoxSection-683559780 .title-readcube,.BuyBoxSection-683559780 .title-buybox{display:block;margin:0;margin-right:10%;margin-left:10%;font-size:24px;line-height:32px;color:#222;text-align:center;font-family:Harding,Palatino,serif}.BuyBoxSection-683559780 .title-asia-buybox{display:block;margin:0;margin-right:5%;margin-left:5%;font-size:24px;line-height:32px;color:#222;text-align:center;font-family:Harding,Palatino,serif}.BuyBoxSection-683559780 .asia-link{color:#069;cursor:pointer;text-decoration:none;font-size:1.05em;font-family:-apple-system,BlinkMacSystemFont,”Segoe UI”,Roboto,Oxygen-Sans,Ubuntu,Cantarell,”Helvetica Neue”,sans-serif;line-height:1.05em6}.BuyBoxSection-683559780 .access-readcube{display:block;margin:0;margin-right:10%;margin-left:10%;font-size:14px;color:#222;padding-top:10px;text-align:center;font-family:-apple-system,BlinkMacSystemFont,”Segoe UI”,Roboto,Oxygen-Sans,Ubuntu,Cantarell,”Helvetica Neue”,sans-serif;line-height:20px}.BuyBoxSection-683559780 ul{margin:0}.BuyBoxSection-683559780 .link-usp{display:list-item;margin:0;margin-left:20px;padding-top:6px;list-style-position:inside}.BuyBoxSection-683559780 .link-usp span{font-size:14px;color:#222;font-family:-apple-system,BlinkMacSystemFont,”Segoe UI”,Roboto,Oxygen-Sans,Ubuntu,Cantarell,”Helvetica Neue”,sans-serif;line-height:20px}.BuyBoxSection-683559780 .access-asia-buybox{display:block;margin:0;margin-right:5%;margin-left:5%;font-size:14px;color:#222;padding-top:10px;text-align:center;font-family:-apple-system,BlinkMacSystemFont,”Segoe UI”,Roboto,Oxygen-Sans,Ubuntu,Cantarell,”Helvetica Neue”,sans-serif;line-height:20px}.BuyBoxSection-683559780 .access-buybox{display:block;margin:0;margin-right:10%;margin-left:10%;font-size:14px;color:#222;opacity:.8px;padding-top:10px;text-align:center;font-family:-apple-system,BlinkMacSystemFont,”Segoe UI”,Roboto,Oxygen-Sans,Ubuntu,Cantarell,”Helvetica Neue”,sans-serif;line-height:20px}.BuyBoxSection-683559780 .price-buybox{display:block;font-size:30px;color:#222;font-family:-apple-system,BlinkMacSystemFont,”Segoe UI”,Roboto,Oxygen-Sans,Ubuntu,Cantarell,”Helvetica Neue”,sans-serif;padding-top:30px;text-align:center}.BuyBoxSection-683559780 .price-buybox-to{display:block;font-size:30px;color:#222;font-family:-apple-system,BlinkMacSystemFont,”Segoe UI”,Roboto,Oxygen-Sans,Ubuntu,Cantarell,”Helvetica Neue”,sans-serif;text-align:center}.BuyBoxSection-683559780 .price-info-text{font-size:16px;padding-right:10px;color:#222;font-family:-apple-system,BlinkMacSystemFont,”Segoe UI”,Roboto,Oxygen-Sans,Ubuntu,Cantarell,”Helvetica Neue”,sans-serif}.BuyBoxSection-683559780 .price-value{font-size:30px;font-family:-apple-system,BlinkMacSystemFont,”Segoe UI”,Roboto,Oxygen-Sans,Ubuntu,Cantarell,”Helvetica Neue”,sans-serif}.BuyBoxSection-683559780 .price-per-period{font-family:-apple-system,BlinkMacSystemFont,”Segoe UI”,Roboto,Oxygen-Sans,Ubuntu,Cantarell,”Helvetica Neue”,sans-serif}.BuyBoxSection-683559780 .price-from{font-size:14px;padding-right:10px;color:#222;font-family:-apple-system,BlinkMacSystemFont,”Segoe UI”,Roboto,Oxygen-Sans,Ubuntu,Cantarell,”Helvetica Neue”,sans-serif;line-height:20px}.BuyBoxSection-683559780 .issue-buybox{display:block;font-size:13px;text-align:center;color:#222;font-family:-apple-system,BlinkMacSystemFont,”Segoe UI”,Roboto,Oxygen-Sans,Ubuntu,Cantarell,”Helvetica Neue”,sans-serif;line-height:19px}.BuyBoxSection-683559780 .no-price-buybox{display:block;font-size:13px;line-height:18px;text-align:center;padding-right:10%;padding-left:10%;padding-bottom:20px;padding-top:30px;color:#222;font-family:-apple-system,BlinkMacSystemFont,”Segoe UI”,Roboto,Oxygen-Sans,Ubuntu,Cantarell,”Helvetica Neue”,sans-serif}.BuyBoxSection-683559780 .vat-buybox{display:block;margin-top:5px;margin-right:20%;margin-left:20%;font-size:11px;color:#222;padding-top:10px;padding-bottom:15px;text-align:center;font-family:-apple-system,BlinkMacSystemFont,”Segoe UI”,Roboto,Oxygen-Sans,Ubuntu,Cantarell,”Helvetica Neue”,sans-serif;line-height:17px}.BuyBoxSection-683559780 .tax-buybox{display:block;width:100%;color:#222;padding:20px 16px;text-align:center;font-family:-apple-system,BlinkMacSystemFont,”Segoe UI”,Roboto,Oxygen-Sans,Ubuntu,Cantarell,”Helvetica Neue”,sans-serif;line-height:NaNpx}.BuyBoxSection-683559780 .button-container{display:flex;padding-right:20px;padding-left:20px;justify-content:center}.BuyBoxSection-683559780 .button-container>*{flex:1px}.BuyBoxSection-683559780 .button-container>a:hover,.Button-505204839:hover,.Button-1078489254:hover,.Button-2737859108:hover{text-decoration:none}.BuyBoxSection-683559780 .btn-secondary{background:#fff}.BuyBoxSection-683559780 .button-asia{background:#069;border:1px solid #069;border-radius:0;cursor:pointer;display:block;padding:9px;outline:0;text-align:center;text-decoration:none;min-width:80px;margin-top:75px}.BuyBoxSection-683559780 .button-label-asia,.ButtonLabel-3869432492,.ButtonLabel-3296148077,.ButtonLabel-1636778223{display:block;color:#fff;font-size:17px;line-height:20px;font-family:-apple-system,BlinkMacSystemFont,”Segoe UI”,Roboto,Oxygen-Sans,Ubuntu,Cantarell,”Helvetica Neue”,sans-serif;text-align:center;text-decoration:none;cursor:pointer}.Button-505204839,.Button-1078489254,.Button-2737859108{background:#069;border:1px solid #069;border-radius:0;cursor:pointer;display:block;padding:9px;outline:0;text-align:center;text-decoration:none;min-width:80px;max-width:320px;margin-top:20px}.Button-505204839 .btn-secondary-label,.Button-1078489254 .btn-secondary-label,.Button-2737859108 .btn-secondary-label{color:#069}

/* style specs end */

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$99.00 per year

only $8.25 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

U(1) dynamics in neuronal activities

A new reduced-morphology model for CA1 pyramidal cells and its validation and comparison with other models using HippoUnit

Critically synchronized brain waves form an effective, robust and flexible basis for human memory and learning

Data availability

The MultiMNIST dataset can be found at https://drive.google.com/open?id=1VnmCmBAVh8f_BKJg1KYx-E137gBLXbGG or in the GitHub public repository at https://github.com/Xi-L/ParetoMTL/tree/master/multiMNIST/data. The data used in the deep reinforcement learning experiment are generated from the ‘InvertedDoublePendulum-v4’ and ‘InvertedPendulum-v4’ simulation environments in the gym library (https://gym.openai.com). Source data for Figs. 3–5 can be accessed via the following Zenodo repository: https://doi.org/10.5281/zenodo.12531887 (ref. 55). Source data are provided with this paper.

Code availability

All of the source code for reproducing the results in this paper is available at https://github.com/helx-20/complexity (ref. 55). We use Python v.3.8.12 (https://www.python.org/), NumPy v.1.21.2 (https://github.com/numpy/numpy), SciPy v.1.7.3 (https://www.scipy.org/), Matplotlib v.3.5.1 (https://github.com/matplotlib/matplotlib), Pandas v.1.4.1 (https://github.com/pandas-dev/pandas), Pillow v8.4.0 (https://pypi.org/project/Pillow), MATLAB R2021a software and the SAC algorithm (https://github.com/haarnoja/sac).

References

-

Ouyang, L. et al. Training language models to follow instructions with human feedback. in Advances in Neural Information Processing Systems Vol. 35 27730–27744 (NeurIPS, 2022).

-

Raffel, C. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 21, 5485–5551 (2020).

Google Scholar

-

Bommasani, R. et al. On the opportunities and risks of foundation models. Preprint at https://arxiv.org/abs/2108.07258 (2021).

-

Rosenblatt, F. The perceptron: a probabilistic model for information storage and organization in the brain. Psychol. Rev. 65, 386 (1958).

Google Scholar

-

LeCun, Y. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1, 541–551 (1989).

Google Scholar

-

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Commun. ACM 60, 84–90 (2017).

Google Scholar

-

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. in Proc. IEEE Conference on Computer Vision and Pattern Recognition 770–778 (IEEE, 2016).

-

Hopfield, J. J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl Acad. Sci. USA 79, 2554–2558 (1982).

Google Scholar

-

Hochreiter, S. & Schmidhuber, J. Long short-term memory. Neural Comput. 9, 1735–1780 (1997).

Google Scholar

-

Cho, K. et al. Learning phrase representations using RNN encoder–decoder for statistical machine translation. Preprint at https://arxiv.org/abs/1406.1078 (2014).

-

Vaswani, A. et al. Attention is all you need. in 31st Conference on Neural Information Processing Systems (NIPS, 2017).

-

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: pre-training of deep bidirectional transformers for language understanding. in Proc. 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers) 4171–4186 (Association for Computational Linguistics, 2019).

-

Dosovitskiy, A. et al. An image is worth 16 × 16 words: transformers for image recognition at scale. in International Conference on Learning Representations (2020).

-

Liu, Z. et al. Swin transformer: hierarchical vision transformer using shifted windows. in Proc. IEEE/CVF International Conference on Computer Vision 10012–10022 (2021).

-

Li, Y. Competition-level code generation with alphacode. Science 378, 1092–1097 (2022).

Google Scholar

-

Ramesh, A., Dhariwal, P., Nichol, A., Chu, C. & Chen, M. Hierarchical text-conditional image generation with clip latents. Preprint at https://arxiv.org/abs/2204.06125 (2022).

-

Dauparas, J. Robust deep learning-based protein sequence design using proteinMPNN. Science 378, 49–56 (2022).

Google Scholar

-

Dayan, P. & Abbott, L. F. Theoretical Neuroscience: Computational and Mathematical Modeling of Neural Systems (MIT Press, 2005).

-

Markram, H. The blue brain project. Nat. Rev. Neurosci. 7, 153–160 (2006).

Google Scholar

-

Izhikevich, E. M. Simple model of spiking neurons. IEEE Trans. Neural Netw. 14, 1569–1572 (2003).

Google Scholar

-

Eliasmith, C. A large-scale model of the functioning brain. Science 338, 1202–1205 (2012).

Google Scholar

-

Wilson, H. R. & Cowan, J. D. Excitatory and inhibitory interactions in localized populations of model neurons. Biophys. J. 12, 1–24 (1972).

Google Scholar

-

FitzHugh, R. Mathematical models of threshold phenomena in the nerve membrane. Bull. Math. Biophys. 17, 257–278 (1955).

Google Scholar

-

Nagumo, J., Arimoto, S. & Yoshizawa, S. An active pulse transmission line simulating nerve axon. Proc. IRE 50, 2061–2070 (1962).

Google Scholar

-

Lapicque, L. Recherches quantitatives sur l’excitation electrique des nerfs traitee comme une polarization. J. Physiol. Pathol. Générale 9, 620–635 (1907).

-

Ermentrout, G. B. & Kopell, N. Parabolic bursting in an excitable system coupled with a slow oscillation. SIAM J. Appl. Math. 46, 233–253 (1986).

Google Scholar

-

Fourcaud-Trocmé, N., Hansel, D., Van Vreeswijk, C. & Brunel, N. How spike generation mechanisms determine the neuronal response to fluctuating inputs. J. Neurosci. 23, 11628–11640 (2003).

Google Scholar

-

Teeter, C. Generalized leaky integrate-and-fire models classify multiple neuron types. Nat. Commun. 9, 709 (2018).

Google Scholar

-

Hodgkin, A. L. & Huxley, A. F. A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 117, 500 (1952).

Google Scholar

-

Connor, J. & Stevens, C. Prediction of repetitive firing behaviour from voltage clamp data on an isolated neurone soma. J. Physiol. 213, 31–53 (1971).

Google Scholar

-

Hindmarsh, J. L. & Rose, R. A model of neuronal bursting using three coupled first order differential equations. Proc. R. Soc. Lond. B 221, 87–102 (1984).

Google Scholar

-

de Menezes, M. A. & Barabási, A.-L. Separating internal and external dynamics of complex systems. Phys. Rev. Let. 93, 068701 (2004).

Google Scholar

-

Ko, K.-I. On the computational complexity of ordinary differential equations. Information Control 58, 157–194 (1983).

Google Scholar

-

Waibel, A., Hanazawa, T., Hinton, G., Shikano, K. & Lang, K. J. Phoneme recognition using time-delay neural networks. IEEE Trans. Signal Proces. 37, 328–339 (1989).

Google Scholar

-

Roy, K., Jaiswal, A. & Panda, P. Towards spike-based machine intelligence with neuromorphic computing. Nature 575, 607–617 (2019).

Google Scholar

-

Pei, J. Towards artificial general intelligence with hybrid Tianjic chip architecture. Nature 572, 106–111 (2019).

Google Scholar

-

Davies, M. Loihi: a neuromorphic manycore processor with on-chip learning. IEEE Micro 38, 82–99 (2018).

Google Scholar

-

Zhou, P., Choi, D.-U., Lu, W. D., Kang, S.-M. & Eshraghian, J. K. Gradient-based neuromorphic learning on dynamical RRAM arrays. IEEE J. Emerging and Selected Topics in Circuits and Systems 12, 888–897 (2022).

Google Scholar

-

Wu, Y., Deng, L., Li, G., Zhu, J. & Shi, L. Spatio-temporal backpropagation for training high-performance spiking neural networks. Front. Neurosci. 12, 331 (2018).

Google Scholar

-

Haarnoja, T., Zhou, A., Abbeel, P. & Levine, S. Soft actor-critic: off-policy maximum entropy deep reinforcement learning with a stochastic actor. in International Conference on Machine Learning 1861–1870 (PMLR, 2018).

-

Tishby, N., Pereira, F. C. & Bialek, W. The information bottleneck method. Preprint at https://arxiv.org/abs/physics/0004057 (2000).

-

Johnson, M. H. Functional brain development in humans. Nat. Rev. Neurosci. 2, 475–483 (2001).

Google Scholar

-

Rakic, P. Evolution of the neocortex: a perspective from developmental biology. Nat. Revi. Neurosci. 10, 724–735 (2009).

Google Scholar

-

Kandel, E. R. et al. Principles of Neural Science Vol. 4 (McGraw-Hill, 2000).

-

Stelzer, F., Röhm, A., Vicente, R., Fischer, I. & Yanchuk, S. Deep neural networks using a single neuron: folded-in-time architecture using feedback-modulated delay loops. Nat. Commun. 12, 5164 (2021).

Google Scholar

-

Adeli, H. & Park, H. S. Optimization of space structures by neural dynamics. Neural Netw. 8, 769–781 (1995).

Google Scholar

-

Dubreuil, A., Valente, A., Beiran, M., Mastrogiuseppe, F. & Ostojic, S. The role of population structure in computations through neural dynamics. Nat. Neurosci. 25, 783–794 (2022).

Google Scholar

-

Tian, Y. et al. Theoretical foundations of studying criticality in the brain. Netw. Neurosci. 6, 1148–1185 (2022).

-

Gidon, A. Dendritic action potentials and computation in human layer 2/3 cortical neurons. Science 367, 83–87 (2020).

Google Scholar

-

Koch, C., Bernander, Ö. & Douglas, R. J. Do neurons have a voltage or a current threshold for action potential initiation? J. Comput. Neurosci. 2, 63–82 (1995).

Google Scholar

-

Tavanaei, A., Ghodrati, M., Kheradpisheh, S. R., Masquelier, T. & Maida, A. Deep learning in spiking neural networks. Neural Netw. 111, 47–63 (2019).

Google Scholar

-

Lin, X., Zhen, H.-L., Li, Z., Zhang, Q.-F. & Kwong, S. Pareto multi-task learning. In 33rd Conference on Neural Information Processing Systems (NeurIPS, 2019).

-

Molchanov, P., Tyree, S., Karras, T., Aila, T. & Kautz, J. Pruning convolutional neural networks for resource efficient inference. in International Conference on Learning Representations (2022).

-

Alemi, A. A., Fischer, I., Dillon, J. V. & Murphy, K. Deep variational information bottleneck. in International Conference on Learning Representations (2022).

-

Linxuan, H. Network model with internal complexity bridges artificial intelligence and neuroscience. Zenodo https://doi.org/10.5281/zenodo.12531887 (2024).

Acknowledgements

This work was partially supported by National Science Foundation for Distinguished Young Scholars (grant no. 62325603), National Natural Science Foundation of China (grant nos. 62236009, U22A20103, 62441606, 62332002, 62027804, 62425101, 62088102), Beijing Natural Science Foundation for Distinguished Young Scholars (grant no. JQ21015), the Hong Kong Polytechnic University under Project P0050631 and the CAAI-MindSpore Open Fund, developed on OpenI Community.

Author information

Authors and Affiliations

Contributions

G.L. proposed the initial idea and supervised the whole project. L.H. led the experiments, whereas Y.X. led the theoretical derivation. Y.X. took part in writing the code concerning the computational efficiency measurement and mutual information analysis. L.H., Y.X., W.H. and Y.L. took part in modifying the neuron models. W.H. and Y.L. took part in the design of the simulation and deep learning experiments, the computational efficiency measurement and the mutual information analysis; they also wrote the code concerning the network models and deep learning experiments. Yang Tian contributed to the design of the mutual information analysis. Y.W. contributed to writing the code concerning neuron models and HH network training methods. W.W. and Z.Z. contributed to the design of the deep learning experiments. J.H., Yonghong Tian and B.X. provided guidance for this work. G.L. led the writing of this paper, with all authors assisting in writing and reviewing the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Computational Science thanks Jason K. Eshraghian, Nicolas Fourcaud-Trocmé and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available. Primary Handling Editor: Ananya Rastogi, in collaboration with the Nature Computational Science team.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Proof of Theorem 1, supporting experiments of network equivalence and Supplementary Figs. 1–9 and Tables 1–10.

Reporting Summary

Peer Review File

Supplementary Data 1

Data for Supplementary Fig. 1.

Supplementary Data 3

Data for Supplementary Fig. 3.

Supplementary Data 8

Data for Supplementary Fig. 8.

Supplementary Data 9

Data for Supplementary Fig. 9.

Source data

Source Data Fig. 3

Source data for Fig. 3.

Source Data Fig. 4

Source data for Fig. 4.

Source Data Fig. 5

Source data for Fig. 5.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Reprints and permissions

About this article

Cite this article

He, L., Xu, Y., He, W. et al. Network model with internal complexity bridges artificial intelligence and neuroscience.

Nat Comput Sci 4, 584–599 (2024). https://doi.org/10.1038/s43588-024-00674-9

-

Received: 14 July 2023

-

Accepted: 12 July 2024

-

Published: 16 August 2024

-

Issue Date: August 2024

-

DOI: https://doi.org/10.1038/s43588-024-00674-9