Nvidia is adding more of its AI computing resources to the Microsoft Azure cloud as both companies look to help enterprises accelerate and expand their own AI developments. The news comes as OpenAI, which Microsoft invested in earlier this year, is seeking more money from the software giant to ramp up its generative AI technology efforts, as Microsoft itself is investing billions of dollars in future AI applications, and as Nvidia continues to push a full-stack AI hardware and software to enterprises and their cloud providers.

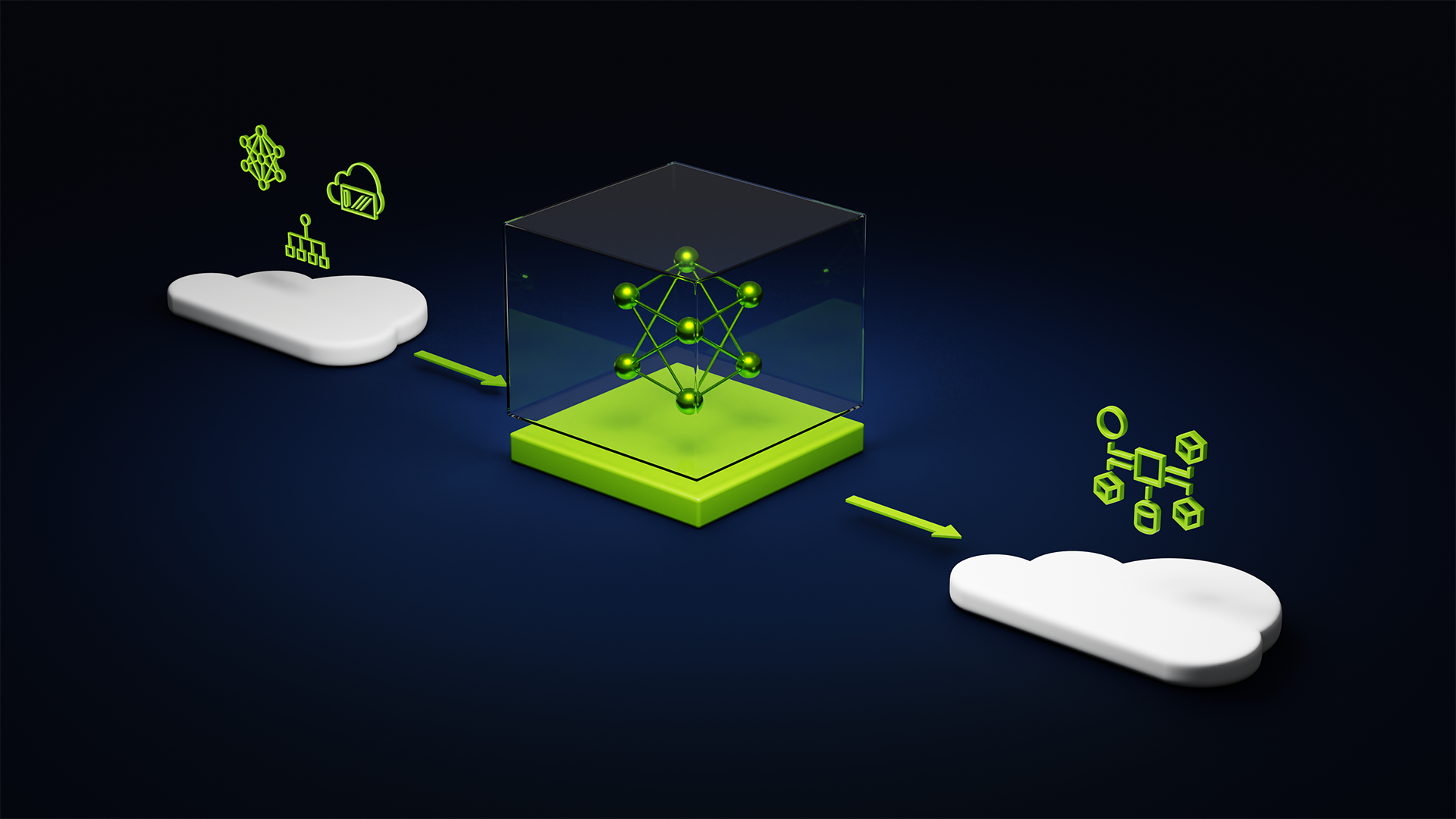

During the Microsoft Ignite event this week, Nvidia unveiled an AI foundry service available through Microsoft Azure that will pull together three elements–a collection of Nvidia AI Foundation Models, the Nvidia NeMo framework and tools, and the company’s DGX Cloud AI supercomputing services–in an end-to-end offering that will allow enterprises and other firms to accelerate and fine-tune the development of their own customized generative AI models. They can then use Nvidia’s AI Enterprise software to deploy their custom models to power generative AI applications, including intelligent search, summarization and content generation. To support all of this, Nvidia’s DGX Cloud also is now available via the Azure marketplace.

Manuvir Das, vice president of enterprise computing at Nvidia, said that the end-to-end offering through Azure builds on what Nvidia has been doing throughout this year to help enterprises leverage their own data to create customized language models to support their own generative AI applications.

“In these new applications, these models are really the largest piece of software that is being used in the application,” he said. “Once these models are produced, Nvidia enables this approach for enterprise customers where they can take the model and the runtime, put it in their briefcase and take it with them tomorrow. It belongs to them and they can deploy it wherever they choose–on servers on-prem, in a ‘colo’, their favorite cloud or into different ecosystems that they’re familiar with.”

The resources Nvidia is bringing to Azure make it easier for Microsoft’s cloud environment to become that destination of choice.

Das said, “For the first time, this entire end to end process with all the pieces that needed–all of the hardware, infrastructure, as well as all of the software pieces that Nvidia has built for this end-to-end workflow–they are all available end-to-end on Microsoft Azure, which means that any Microsoft customer can come and do this entire enterprise generative AI workflow. They can procure the required components of technology from the Azure marketplace.”

Microsoft Chairman and CEO Satya Nadella added, “Our partnership with Nvidia spans every layer of the Copilot stack — from silicon to software — as we innovate together for this new age of AI. With Nvidia’s generative AI foundry service on Microsoft Azure, we’re providing new capabilities for enterprises and startups to build and deploy AI applications on our cloud.”

The first users of the AI foundry service through Azure include SAP SE, Amdocs, and Getty Images. Das called SAP “the first large commercial customer for this end-to-end workflow,” adding that SAP had used it to power up the Joule generative AI assistant that it announced back in September.

“Joule draws on SAP’s unique position at the nexus of business and technology, and builds on our relevant, reliable and responsible approach to Business AI,” said Christian Klein, CEO and member of the Executive Board of SAP SE. “In partnership with Nvidia, Joule can help customers unlock the potential of generative AI for their business by automating time-consuming tasks and quickly analyzing data to deliver more intelligent, personalized experiences.”

In addition to the AI foundry service, Nvidia also announced Tensor RT LLM v0.6 for Windows, an offering to provide faster inference, new foundation models and more tools for developers doing generative AI development on local RTX workstations. Das said this move nods to the notion that generative AI development is not just happening in data centers and clouds, but also on local client machines.

“If you think about democratizing enterprise generative AI, development generally has been done in the public cloud or in data centers, but we have a lot of client devices that have GPUs as well that people are using across companies for a variety of different purposes already,” Das said. “It should be possible to leverage the power of these GPUs that are local to the end user to do generative AI as well, so Nvidia has been working with Microsoft on enabling generative AI natively on Windows devices that have an Nvidia RTX GPU. The reason you want to do that is because there’s various use cases that are built based on local applications that are hosted on Windows client devices that should be infused with generative AI.”

The ability to host some of those applications locally helps developers to be able to work with them offline, and helps users of the applications that may be having connectivity or latency issues and need to access an application locally.